Two Dialogs on Searle

If a computer can beat you at chess,

it can beat you at philosophy.

The first dialog explores the fundamental mistake Searle makes in his "Chinese Room Argument", namely that while in the general case syntax is insufficient for semantics, programming languages are semantics with syntax. I have written about Searle before (the latest here), but that's me making a case to an empty courtroom. Here, I carry on an adversarial conversation with Grok 3.0 and let it judge the result.

The second dialog considers where Searle can maintain his conclusion that computers cannot be conscious without his "syntax is insufficient for semantics" pillar except by denying the Church-Turing Hypothesis, which he affirms. My position is that he can't, Grok tried to show he can.

Grok is overly verbose, yet sometimes exhibits what appears to be keen insight and sometimes says things in a way that make me jealous that it can be a better wordsmith than me. Grok doesn't get mad, doesn't get frustrated, and doesn't get tired (at least as long as I maintain my $30/month subscription). It is a far more challenging opponent than I typically have access to.

In the future, these discussions will be multi-way with humans and chatbots pushing the boundaries, with the machines keeping everyone honest - demanding definitions, uncovering tacit assumptions, noticing loops, and keeping score. We will be able to save a transcript then ask ChatGPT to read it and comment on it. New insights can be added, repetitious material ignored. The possibility of positive transform is exciting.

Dialog 1 - Searle's mistake

Dialog 2 - Searle and the Church-Turing Hypothesis

Theodicy: Another Perspective

This same change of perspective is possible with the "problem" of evil (and divine hiddenness) which are two arguments employed for the non-existence of [a good] God.

The first step to the change of perspective is to remember that logic does not prove its axioms. It cannot, otherwise one is engaging in circular reasoning. The next step is to recall that logic neither adds to, nor subtracts from, the system described by the axioms. If it isn't "in" the axioms, it doesn't appear as a result; if it is "in" the axioms, it doesn't disappear from the result. Logic is a physical operation, not unlike a meat grinder. Beef in, hamburger out; pork in, ground pork out.

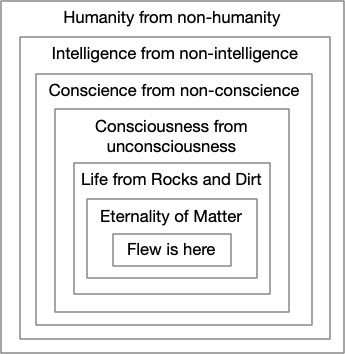

These first two steps should be without controversy. So should the third, but it's less familiar; namely, that nature does not impose on us the axioms that we choose. There are more axioms in "mind" space than there are in "meat" space2,3, and nature does not "tell" us which axioms we should use to describe it.

The switchover may come from the observation that the problem of evil and/or divine hiddenness are problems for some people and not for others. Jesus, for example, affirmed the existence of a good God in Luke 18:19 and denied a problem with hiddenness in his sermon on the mount [Matthew 5:8].

So if evil and hiddenness are problems, then the problem is inherent in the axioms; if they aren't problems, they aren't problems inherent in the axioms.4

Since nature doesn't tell us which axioms to choose, why do we choose the axioms we do? Are these "problems" just a mirror to our souls?

AddendumIt's gratifying to find someone coming to the same conclusion independently. In the coffee shop this morning I read the following and had to update this post.

Our passions infiltrate our intuitions. When in a bad mood, we read someone’s neutral look as a glare; in a good mood, we intuit the same look as interest. Social psychologists have played with the effect of emotions on social intuitions by manipulating the setting in which someone sees a face. Told a pictured man was a Gestapo leader, people will detect cruelty in his unsmiling face. Told he was an anti-Nazi hero, they will see kindness behind his caring eyes. Filmmakers have called this the “Kulechov effect,” after a Russian film director who similarly showed viewers an expressionless man. If first shown a bowl of hot soup, their intuition told them he was pensive. If shown a dead woman, they perceived him as sorrowful. If shown a girl playing, they said he seemed happy. The moral: our intuitions construe reality differently, depending on our assumptions. “We don’t see things as they are,” says the Talmud, “we see things as we are.” – "

Intuition", David G. Myers

[1] I usually make a "v" with my middle and index fingers and look at the dancer through the gap, starting at her feet and moving up until she changes direction. If she doesn't switch by the time I get to the waist, I move down and try again. Sometimes I have to vary with width of the gap. Blink several times and she goes back to the initial direction.

[2] This assumes that there is a difference between the two spaces. We certainly perceive that there is. But, as the dancer illustrates, we might not be looking at reality the right way.

[3] Non-Euclidean geometry is one well-known example of this.

[4] See "The Rules of God Club" for one set of axioms in which evil and hiddenness are not problems.

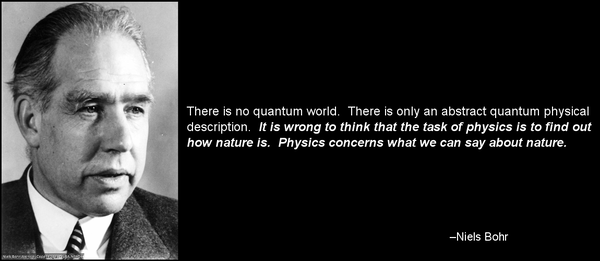

Bohr on Nature

What's interesting about this is that what Bohr thinks we can say about nature is also what we think we can say about consciousness. We recognize consciousness by behavior (cf. Searle's Chinese Room), but we cannot say whether consciousness is produced by that behavior or whether it is revealed by that behavior.

AGI

If AGI doesn't include insanity as well as intelligence then it may be artificial, but it won't be general.

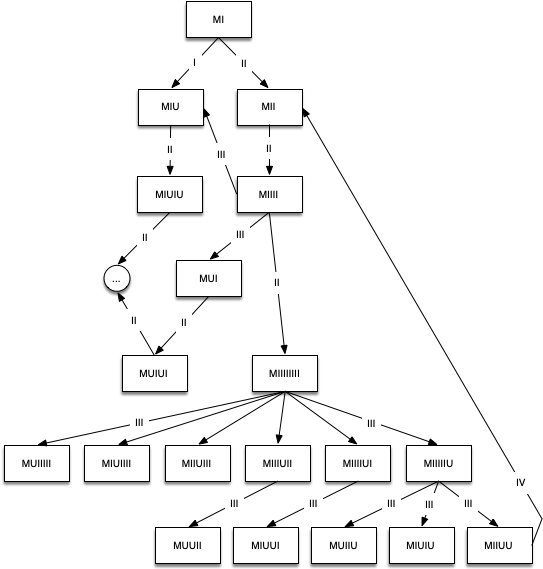

In "Gödel, Escher, Bach" Hofstadter wrote:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns.1

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials.2

For intelligence to be general, the ability to jump must be in all directions.

[1] Pg. 37

[2] Pg. 478

This idea is repeated in this post from almost 13 years ago. Have I stopped jumping outside the lines?

Quote

1,2

Whether or not the √2 is irrational cannot be shown by measuring it.

Whether or not the Church-Turing hypothesis is true cannot be shown by thinking about it.

[1] "Euclidean and Non-Euclidean Geometries", Greenberg, Marvin J., Second Edition, pg. 7: "The point is that this irrationality of length could never have been discovered by physical measurements, which always include a small experimental margin of error."

[2] This quote is partially inspired by Scott Aaronson's "PHYS771 Lecture 9: Quantum" where he talks about the necessity of experiments.

Searle's Chinese Room: Another Nail

[updated 3/9/2024 - note on Searle's statement that "programs are not machines"]

[updated 10/8/2024;02/09/2025] - added "Searle's Fundamental Error" at beginning to present the gist of why his argument is wrong]

Searle's Fundamental Error

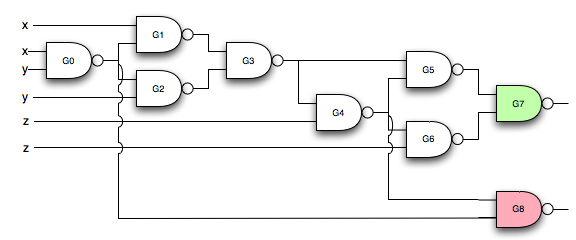

Searle's claim is that because syntax is insufficient for semantics that computers cannot understand meaning the way humans can. What Searle misses is that computer languages are semantics that happen to have syntax. Every computer language is equivalent to a network of logic gates and logic gates specify behavior. These networks can easily implement semantics.Introduction

I have discussed Searle's "Chinese Room Argument" twice before: here and here. It isn't necessary to review them. While both of them argue against Searle's conclusion, they aren't as complete as I think they could be. This is one more attempt to put another nail in the coffin, but the appeal of Searle's argument is so strong - even though it is manifestly wrong - that it may refuse to stay buried. The Addendum explains why.Searle's paper, "Mind, Brains, and Programs" is here. He argues that computers will never be able to understand language the way humans do for these reasons:

- Computers manipulate symbols.

- Symbol manipulation is insufficient for understanding the meaning behind the symbols being manipulated.

- Humans cannot communicate semantics via programming.

- Therefore, computers cannot understand symbols the way humans understand symbols.

- Is certainly true. But Searle's argument ultimately fails because he only considers a subset of the kinds of symbol manipulation a computer (and a human brain) can do.

- Is partially true. This idea is also expressed as "syntax is insufficient for semantics." I remember, from over 50 years ago, when I started taking German in 10th grade. We quickly learned to say "good morning, how are you?" and to respond with "I'm fine. And you?" One morning, our teacher was standing outside the classroom door and asked each student as they entered, the German equivalent of "Good morning," followed by the student's name, "how are you?" Instead of answering from the dialog we had learned, I decided to ad lib, "Ice bin heiss." My teacher turned bright red from the neck up. Bless her heart, she took me aside and said, "No, Wilhem. What you should have said was, 'Es ist mir heiss'. To me it is hot. What you said was that you are experiencing increased libido." I had used a simple symbol substitution, "Ich" for "I", "bin" for "am", and "heiss" for "hot", temperature-wise. But, clearly, I didn't understand what I was saying. Right syntax, wrong semantics. Nevertheless, I do now understand the difference. What Searle fails to establish is how meaningless symbols acquire meaning. So he handicaps the computer. The human has meaning and substitution rules; Searle only allows the computer substitution rules.

- Is completely false.

To understand why 2 is only partially true, we have to understand why 3 is false.

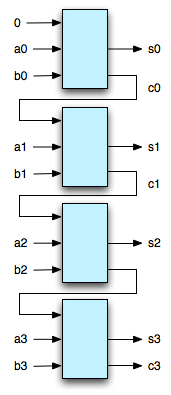

- A Turing-complete machine can simulate any other Turing machine. Two machines are Turing equivalent if each machine can simulate the other.

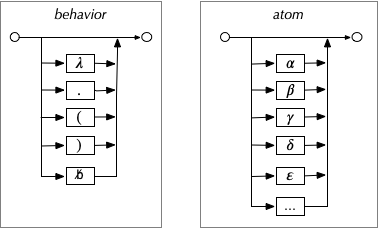

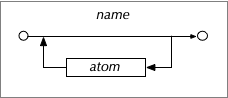

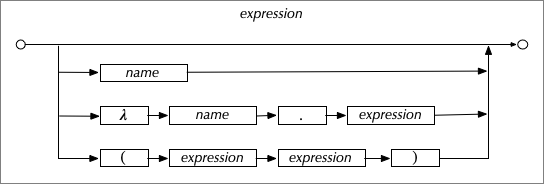

- The lambda calculus is Turing complete.

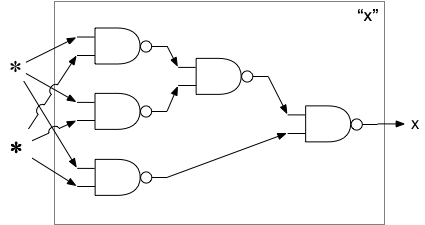

- A machine composed of NAND gates (a "computer" in the everyday sense) can be Turing complete.

- A NAND gate (along with a NOR gate) is a "universal" logic gate.

- Memory can also be constructed from NAND gates.

- The equivalence of a NAND-based machine and the lambda calculus is demonstrated by instantiating the lambda calculus on a computer.1

- From 3, every computer program can be written as expressions in the lambda calculus; every computer program can be expressed as an arrangement of logic gates. We could, if we so desired, build a custom physical device for every computer program. But it is massively economically unfeasible to do so.

- Because every computer program has an equivalent arrangement of NAND gates2, a Turing-complete machine can simulate that program.

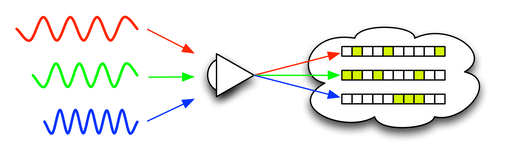

- NAND gates are building-blocks of behavior. So the syntax of every computer program represents behavior.

- Having established that computer programs communicate behavior, we can easily see what Searle's #2 is only partially true. Symbol substitution is one form of behavior. Semantics is another. Semantics is "this is that" behavior. This is the basic idea behind a dictionary. The brain associates visual, aural, temporal, and other sensory input and this is how we acquire meaning [see "The Problem of Qualia"]. Associating the visual input of a "dog", the sound "dog", the printed word "dog", the feel of a dog's fur, are how we learn what "dog" means. We have massive amounts of data that our brain associates to build meaning. We handicap our machines, first, by not typically giving them the ability to have the same experiences we do. We handicap them, second, by not giving them the vast range of associations that we have. Nevertheless, there are machines that demonstrate that they understand colors, shapes, locations, and words. When told to describe a scene, they can. When requested to "take the red block on the table and place it in the blue bowl on the floor", they can.

I was able to correct my behavior by establishing new associations: temperature and libido with German usage of "heiss". That associative behavior can be communicated to a machine. A machine, sensing a rise in temperature, could then inform an operator of its distress, "Es ist mir heiss!". Likely (at least, for now) lacking libido, it would not say, "Ich bin heiss."

Having shown that Searle's argument is based on a complete misunderstanding of computation, I wish to address selected statements in his paper.

Read More...

The Physical Ground of Logic

[updated 14 January 2024 to add note on semantics and syntax]

[updated 17 January 2024 to add note on universality of NAND and NOR gates]

[updated 26 March 2024 to add note about philosophical considerations of rotation]

The nature of logic is a contested philosophical question. One position is that logic exists independently of the physical realm; another is that it is a fundamental aspect of the physical realm; another is that it is a product of the physical realm. These correspond roughly to the positions of idealism, dualism, and materialism.

Here, the physical basis for logic is demonstrated. This doesn't disprove idealism, dualism, or materialism; but it does make it harder to find a bright line of demarcation between them and a way to make a final determination as to which might correspond to reality.

There are multiple logics. As "zeroth-order" (or "propositional logic") is the basis of all higher logics, we start here.

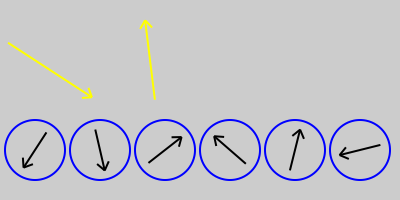

Begin with two arbitrary distinguishable objects. They can be anything: coins with heads and tails; bees and bears; letters in an alphabet, silicon and carbon atoms. Two unique objects, "lefty" and "righty" are chosen. The main reason is to use objects for which there is no common associated meaning. Meaning must not creep in "the back door" and using unfamiliar objects should help that from inadvertently happening. A secondary engineering reason, with a strong philosophical connection, will be demonstrated later.

| Lefty | Righty |

|---|---|

| \ | | |

These two objects can be combined four ways as shown in the next table. You should be able to convince yourself that these are the only four ways for these combinations to occur. For convenience, each input row and column is labeled for future reference.

| $1 | $2 | |

|---|---|---|

| C0 | \ | \ |

| C1 | \ | | |

| C2 | | | \ |

| C3 | | | | |

Next, observe that there are sixteen ways to select an object from each of the four combinations. You should be able to convince yourself that these are the only ways for these selections to occur. For convenience, the selection columns are labelled for future reference. The selection columns correspond to the combination rows.

| S0 | S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | S9 | S10 | S11 | S12 | S13 | S14 | S15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C0 | | | | | | | | | | | | | | | | | \ | \ | \ | \ | \ | \ | \ | \ |

| C1 | | | | | | | | | \ | \ | \ | \ | | | | | | | | | \ | \ | \ | \ |

| C2 | | | | | \ | \ | | | | | \ | \ | | | | | \ | \ | | | | | \ | \ |

| C3 | | | \ | | | \ | | | \ | | | \ | | | \ | | | \ | | | \ | | | \ |

Now the task is to build physical devices that combine these two inputs and select an output according to each selection rule. Notice that in twelve of the selection rules it is possible to get an output that was not an input. On the one hand, this is like pulling a rabbit out of a hat. It looks like something is coming out that wasn't going in. On the other hand, it's possible to build a device with a "hidden reservoir" of objects so that the required object is produced. But this is the engineering reason why "lefty" and "righty" are the way they are. "lefty" can be turned into "righty" and "righty" can be turned into "lefty" by rotating the object and rotation is a physical operation on a physical object.

This engineering decision has interesting implications. It ensures that logic is self-contained: what goes in is what comes out. If it doesn't go in, it doesn't come out. The logic gate "not" turns truth into falsity and falsity into truth by a simple rotation. Philosophically, this means that truth and falsity are stance dependent; truth depends on how you look at it. Moving an observer is equivalent to rotating the symbol.

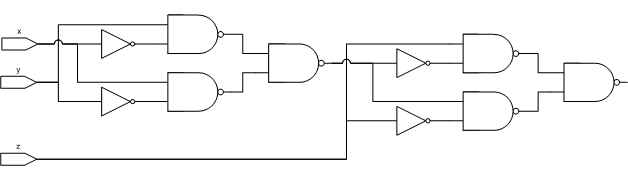

Suppose we can build a device which has the behavior of S7:

We know how to build devices which have the behavior of S7, which is known as a "NAND" (Not AND)1 gate. There are numerous ways to build these devices: with semiconductors that use electricity, with waveguides that use fluids such as air and water, with materials that can flex, neurons in the brain, even marbles.2 NAND gates and NOR gates (S1) are known as universal gates, since arrangements of each gate can produce all of the other selection operations. The demonstration with NAND gates is here. The correspondence between digital logic circuits and propositional logic has been known for a long time.

John Harrison writes3:Digital design Propositional Logic logic gate propositional connective circuit formula input wire atom internal wire subexpression voltage level truth value

The interesting bit now is how to get "truth values" into, or out of, the system. Typically, we put "truth" into the system. We look at the pattern for S7, say, and note that if we arbitrarily declare that "lefty" is "true", then "righty" is false, then we get the logical behavior that is familiar to us. Because digital computers work with low and high voltages, it is more common to arbitrarily make one of them 0 and the other 1 and then to, again arbitrarily, make the convention that one of them (typically 0) represents false and the other (either 1 or non-zero) true.

This way of looking at the system leads to the idea that truth is an emergent behavior in complex systems. Truth is found in the arrangement of the components, not in the components themselves.

But this isn't the only way of looking at the system.

Let us break down the what the internal behavior of each selection process has to accomplish. This behavior will be grouped in order of increasing complexity. To do this, we have to introduce some notation.

~ is the "rotate" operation. ~\ is |; ~| is \.

= tests for equality, e.g. $1 = $2 compares the first input value with the second input value.

? equal-path : unequal-path takes action based on the result of the equal operator.

\ = \ ? | : \ results in |, since \ is equal to \.

\ = | ? | : \ results in \, since \ is not equal to |.

| Selection | Behavior |

|---|---|

| S0 | | |

| S15 | \ |

The second group outputs one of the inputs, perhaps with rotation:

| Selection | Behavior |

|---|---|

| S12 | $1 |

| S3 | ~$1 |

| S10 | $2 |

| S5 | ~$2 |

The third group compares the inputs for equality and produces an output for the equal path and another for the unequal path:

| Selection | Behavior |

|---|---|

| S8 | $1 = $2 ? $1 : | |

| S14 | $1 = $2 ? $1 : \ |

| S1 | $1 = $2 ? ~$1 : | |

| S7 | $1 = $2 ? ~$1 : \ |

| S4 | $1 = $2 ? | : $1 |

| S2 | $1 = $2 ? | : $2 |

| S13 | $1 = $2 ? \ : $1 |

| S11 | $1 = $2 ? \ : $2 |

| S6 | $1 = $2 ? | : \ |

| S9 | $1 = $2 ? \ : | |

It's important to note that whatever the physical layer is doing, if the device performs according to these combination and selection operations then the internal layer is doing these logical operations.

The next step is to figure out how to do the equality operation. We might think to use S9 but this doesn't help. It still requires the arbitrary assignment of "true" to one of the input symbols. The insight comes if we consider the input symbol as an object that already has the desired behavior. Electric charge implements the equality behavior: equal charges repel; unequal charges attract. If the input objects repel then we take the "equal path" and output the required result; if they attract we take the "unequal path" and output that answer.

In this view, "truth" and "falsity" are the behaviors that recognize themselves and disregard "not themselves." And we find that nature provides this behavior of self-identification at a fundamental level. Charge - and its behavior - is not an emergent property in quantum mechanics.

What's interesting is that nature gives us two ways of looking at it and discovering two ways of explaining what we see without, apparently, giving us a clue as to which is the "right" way to look at it. Looking one way, truth is emergent. Looking way, truth is fundamental. Philosophers might not like this view of truth (that truth is the behavior of self-recognition), but our brains can't develop any other notions of truth apart from this "computational truth."

Hiddenness

How the fact that the behavior of electric charge is symmetric, as well as the physical/logical layer distinction, plays into subjective first person experience is here.Syntax and Semantics

In natural languages, it is understood that the syntax of a sentence is not sufficient to understand the semantics of the sentence. We can parse "'Twas brillig, and the slithy toves" but, without additional information, cannot discern its meaning. It is common to try to extend this principle to computation to assert that the syntax of computer languages cannot communicate meaning to a machine. But logic gates have syntax and behavior. The syntax of S7 can take many forms: S7, (NAND x y), NOT AND(a, b); even the diagram of S7, above. The behavior associated with the syntax is: $1 = $2 ? ~$1 : \. So the syntax of a computer language communicates behavior to the machine. As will be shown later, meaning is the behavior of association, this is that. So computer syntax communicates behavior, which can include meaning, to the machine.

More elaboration on how philosophy misunderstands computation is here.

Notes

An earlier derivation of this same result using the Lambda Calculus is here. But this demonstration, while inspired by the Lambda Calculus, doesn't require the heavier framework of the calculus.[1] An "AND" gate has the behavior of S8.

[2] There is a theory in philosophy known as "multiple realizibility" which argues that the ability to implement "mental properties" in multiple ways means that "mental properties" cannot be reduced to physical states. As this post demonstrates, this is clearly false.

[3] "A Survey of Automated Theorem Proving", pg. 27.

Quotes

We know that consciousness and infinity exist "inside" our minds. But we have no

objective way of knowing if they exist "outside" of our minds. There is no objective

measure for consciousness; there is no objective measure for infinity (there are no

rulers of infinite length, or clocks of endless time, for example).

The theist claims that there exists a mind that is both conscious and infinite and

calls this mind God.

The theologian or philosopher who attempts to prove the objective existence of God

is thereby engaging in a futile effort, as is the effort to disprove said existence.

The atheist who asks for objective proof of God is making a category error.

-- wrf3

The Inner Mind

[Similar introductory presentation here]

Philosophy of mind has the problem of "mental privacy" or "the problem of first-person perspective." It's a problem because philosophers can't account for how the first person view of consciousness might be possible. The answer has been known for the last 100 years or so and has a very simple physical explanation. We know how to build a system that has a private first person experience.

Let us use two distinguishable physical objects:

These shapes of these objects are arbitrary and were chosen so as not to represent something that might have meaning due to familiarity. While they have rotational symmetry, this is for convenience for something that is not related to the problem of mental privacy.

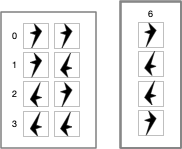

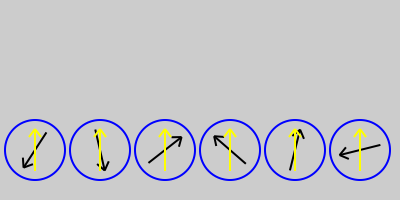

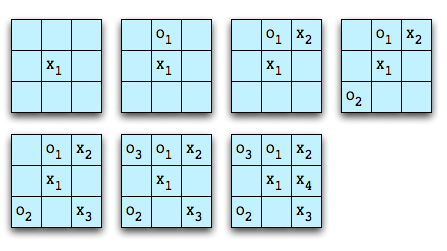

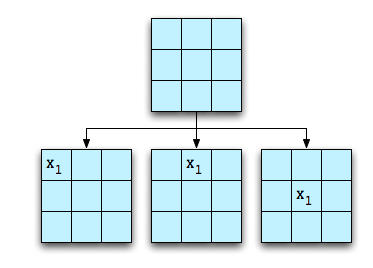

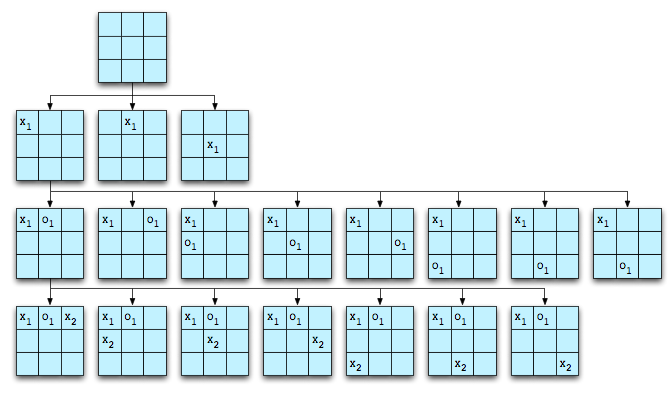

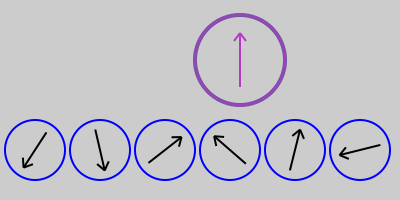

Next, let us build a physical device that takes two of these objects as input and selects one for output. Parenthesis will be used to group the inputs and an arrow will terminate with the selected output. The device will operate according to these four rules:

A network of these devices can be built such that the output of one device can be used as input to another device.

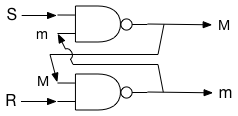

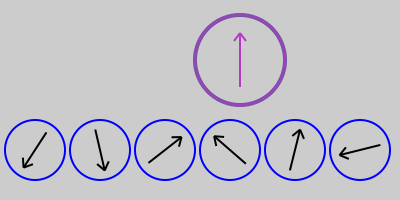

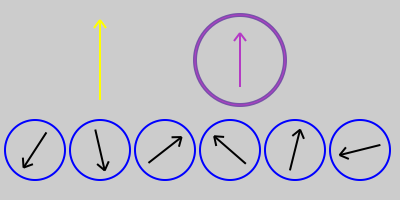

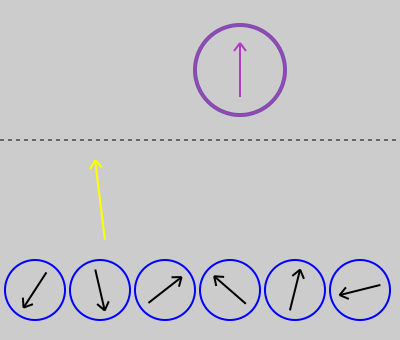

It is physically impossible to tell whether this device is acting as a NAND gate or as a NOR gate. We are taught that a NAND gate maps (T T)→F, (T F)→T, (F T)→T, and (F F)→T; (1 1)→0, (1 0)→1, (0 1)→1, (0 0)→0 and that a NOR gate maps (T T)→F, (T F)→F, (F T)→F, (F F)→T; (1 1)→0, (1 0)→0, (0 1)→0, (0 0)→1. But these traditional mappings obscure the fact that the symbols are arbitrary. The only thing that matters is the behavior of the symbols in a device and the network constructed from the devices. Because you cannot tell via external inspection what the device is doing you cannot tell via external inspection what the network of devices is doing. All an external observer sees is the passage of arbitrary objects through a network.1

To further complicate the situation, computation uses the symbol/value distinction: this symbol has that value. But "value" is just elements of the alphabet and so are identical to symbols when viewed externally. That means that to fully understand what a network is doing, you have to discern whether a symbol is being used as a symbol or as a value. But this requires inner knowledge of what the network is doing, which leads to infinite regress. You have to know what the network is doing on the inside to understand what the network is doing from the outside.

Or the network has to tell you what it's doing. NAND and NOR gates are universal logic gates which can be used to construct a "universal" computing device.2

So we can construct a device which can respond to the question "what logic gate was used in your construction?"

Depending on how it was constructed, its response might be:

- "I am made of NAND gates."

- "I am made of NOR gates."

- "I am made of NAND and NOR gates. My designer wasn't consistent throughout."

- "I don't know."

- "What's a logic gate?"

- "For the answer, deposit 500 bitcoin in my wallet."

Before the output device is connected to the system so that the answer can be communicated, there is no way for an external observer to know what the answer will be.

All an external observer has to go on is what the device communicates to that observer, whether by speech or some other behavior.3

And even when the output device is active and the observer receives the message, the observer cannot tell whether the answer given corresponds to how the system was actually constructed.

So extrospection cannot reveal what is happening "inside" the circuit. But note that introspection cannot reveal what is happening "outside" the circuit. The observer doesn't know what the circuit is doing; the subject doesn't know how it was built. It may think it does, but it has no way to tell.

[1] Technology has advanced to the point where brain signals can be interpreted by machine, e.g. here. But this is because these machines are trained by matching a person's speech to the corresponding brain signals. Without this training step, the device wouldn't work. Because our brains are similar, it's likely possible that a speaking person could train such a machine for a non-vocal person.

[2] I put universal in quotes because a universal Turing machine requires infinite memory and this would require an infinite number of gates. Our brains don't have an infinite number of neurons.

[3] See also Quss, Redux, since this has direct bearing on yet another problem that confounds philosophers, but not engineers.

On the Knowledge of God

[updated 11/21/2020 to change "all side" to "all sides"]

[updated 7/20/2022 to add quotes from Markides and Oppy]

I'm just this guy, you know?1 One of the possible recent mistakes I have made is getting involved with Twitter, in particular, with some of the apologists for theism and for atheism whose goal is to prove by reason that God does, or does not, exist. Over time, having examined both the arguments both for and against, I have come to the conclusion that neither side has any arguments that aren't in some way fundamentally flawed. One day, I will make this case in writing (I still have much more preparation to do first). Still, the failure of one argument doesn't automatically prove the opposite case. So the failure of the arguments on all sides does not mean that a good argument doesn't exist. It just means we haven't found it. Yet, once you see the structure of these arguments, their commonalities, and the problems with them, you begin to wonder if it isn't a hopeless enterprise in the first place. Hence my proposed "Spock-Stoddard Test" and "The Zeroth Commandment." To be sure, these are not based on rigorous proof, but merely on informed guesswork. But they encapsulate the notion that whether or not one believes or disbelieves in God is a logically free choice. It is primal. It is not entailed by other considerations. You either do, or you don't, for no other reason that you do, or you don't. Post-hoc rationalizations don't count.

When one is, as it were, the "lone voice crying in the desert" with an opinion that appears to be relatively rare, at least in the circles I run in, it's gratifying to find others who have come to the same conclusion. Clearly, group cohesion doesn't make my position true or false, but it does make it less lonely. Herewith are a few quotes that I've come across along the way.

He is not in the business of giving them arguments that will prove he has some derivative right to their attention; he is only inviting them to believe. This is the hard stone in the gracious peach of his Good News: salvation is not by works, be they physical, intellectual, moral, or spiritual; it is strictly by faith in him. ... Jesus obviously does not answer many questions from you or me. Which is why apologetics-the branch of theology that seeks to argue for the justifiability of God's words and deeds-is always such a questionable enterprise. Jesus just doesn't argue. ... He does not reach out to convince us; he simply stands there in all the attracting/repelling fullness of hisexousia and dares us to believe. -- Robert Farrar Capon. Parables of Judgment

Jesus did not, indeed, support His theism by argument; He did not provide in advance answers to the Kantian attack upon the theistic proofs. -- J. Gresham Machen,Christianity and Liberalism

Like probably nothing else, all authentic knowledge of God is participatory knowledge. I must say this directly and clearly because it is a very different way of knowing reality—and it should be the unique, open-horizoned gift of people of faith. But we ourselves have almost entirely lost this way of knowing, ever since the food fights of the Reformation and the rationalism of the Enlightenment, leading to fundamentalism on the Right and atheism or agnosticism on the Left. Neither of these know how to know! We have sacrificed our unique telescope for a very inadequate microscope.The Divine Dance

...

In other words, God (and uniquely the Trinity) cannot be known as we know any other object—such as a machine, an objective idea, or a tree—which we are able to “objectify.” We look at objects, and we judge them from a distance through our normal intelligence, parsing out their varying parts, separating this from that, presuming that to understand the parts is always to be able to understand the whole. But divine things can never be objectified in this way; they can only be “subjectified” by becoming one with them! When neither yourself nor the other is treated as a mere object, but both rest in an I-Thou of mutual admiration, you have spiritual knowing. Some of us call this contemplative knowing. -- Richard Rohr,

Reformed theology regards the existence of God as an entirely reasonable assumption, it does not claim the ability to demonstrate this by rational argumentation. Dr. Kuyper speaks as follows of the attempt to do this: “The attempt to prove God’s existence is either useless or unsuccessful. -- Louis Berkhof,Systematic Theology

Today, it is generally agreed that there can be no logical proof either way for the existence of God, and that this is purely a matter of faith. -- Marcus Weeks,Philosophy in Minutes

But the way to know God, Father Maximos would say repeatedly, is neither through philosophy nor through experimental science but through systematic methods of spiritual practice that could open us up to the Grace of the Holy Spirit. Only then can we have a taste of the Divine, a firsthand, experiential knowledge of the Creator. -- Kyriacos C. Markides,The Mountain of Silence

I think that such theists and atheists are mistaken. While they may be entirely within their rights to suppose that the arguments that they defend aresound, I do not think that they have any reason to suppose that their arguments are rationally compelling... -- Graham Oppy, Arguing About Gods

One final quote is appropriate:

Blessed are the pure in heart, for they will see God. -- Matthew 5:8, NRSV

[1] Said of Zaphod Beeblebrox, "The Restaurant and the End of the Universe"

Evidence for Christianity

At some point, it pays to stop beating your head against a wall and see if a fresh approach doesn't yield a new way to look at the problem.

The theory of computation shows that computation is certain behaviors on meaningless symbols (cf. the Lambda Calculus). We know, from the Lambda Calculus, how to derive logic. Once we have logic, we can derive meaning. From there, we can derive math, morality, and everything else for a human level intelligence. One can replace the meaningless symbols with physical atoms and show how neurons implement computation. And we know from computability theory, that how the device is implemented isn't what's important - it's the behavior.

We know that human level sentient creatures have a sense of self. It is something we are directly aware of, but outside observers cannot measure it objectively. Our thoughts, our ego, are "inside" the swirling atoms in our brains. An outside observer can only see the behavior caused by the swirl of atoms. Certainly, we can hook electrodes up to brains and measure electrical activity. But we cannot know what that activity corresponds to internally, unless the test subject tells us. It is only because we share common brain structures that we can try to predict which activity has what meaning in others.

The swirl of atoms in our brains, the repeated combination and selection of meaningless symbols, is a microcosm of the swirling of atoms in the universe. If our brains are localized intelligence, the case can be made that swirl of atoms in the universe is a global intelligence. But this is a subjective argument. This is why the Turing Test is conducted where an observer cannot see the subject. Seeing the human form biases us to conclude human intelligence. But for a non-human form, an observer has to recognize sentience from behavior. Why might that not be the case?

First, because there is a lot of randomness in the behavior of the universe and it is a common idea that randomness is ateleological. It lacks meaning and purpose. Unfortunately, this philosophical stance is without merit, since randomness can be used to achieve determined ends. The fact of randomness simply isn't enough to move the needle between purpose/purposelessness and meaning/meaninglessness. One could argue that human intelligence contains a great deal of randomness. But an observer looking on the outside cannot objectively see which is the case.

Second, because the behavior of the universe doesn't always comport with our desires. Our standards of good and evil are not the universe's standards of good and evil. If one holds to an objective morality, one will miss the possible sentience of someone who behaves very differently from ourselves.

Third, we want to think that there is only one objectively right way to view the universe. But the universe doesn't make that easy. We have the built-in knowledge of infinity (an endless and therefore unmeasurable process). There is no consensus whether infinity is "real" or "actual" and, since we can't measure it, I don't think consensus will ever be achieved. There is also the question of whether matter produces and moves the mind or mind produces and moves matter. Neither side has achieved consensus.

Into this mix comes Christianity which states that there is an extra-human intelligence "inside" the existence and motion of the universe, who has a sense of right and wrong that is different from ours, where what has been made is a strong indication of that sentience, and who calls people to itself. Because sentience is a subjective measurement, it must be made on faith - which is one of the bedrock tenets of Christianity. This picture of local intelligences inside a bigger intelligence is consistent with "in Him we live and move and have our being." Yet in spite of this connectedness, we remain disconnected, not recognizing our state. Which is yet another teaching of Christianity.

Much more can, and must, be said. But the immateriality and incommensurability of infinity; the subjectivity of sentience and morality; and the ability to build thinking things out of dirt are all a part of both Christianity and natural theology.

Quus, Redux

Philip Goff explores Kripke's quss function, defined as:

In English, if the two inputs are both less than 100, the inputs are added, otherwise the result is 5.

(defparameter N 100)

(defun quss (a b)

(if (and (< a N) (< b N))

(+ a b)

5))

Goff then claims:

Rather, it’s indeterminate whether it’s adding orquadding.

This statement rests on some unstated assumptions. The calculator is a finite state machine. For simplicity, suppose the calculator has 10 digits, a function key (labelled "?" for mystery function) and "=" for result. There is a three character screen, so that any three digit numbers can be "quadded". The calculator can then produce 1000x1000 different results. A larger finite state machine can query the calculator for all one million possible inputs then collect and analyze the results. Given the definition of quss, the analyzer can then feed all one million possible inputs to quss, and show that the output of quss matches the output of the calculator.

Goff then tries to extend this result by making N larger than what the calculator can "handle". But this attempt fails, because if the calculator cannot handle bigN, then the conditionals (< a bigN) and (< b bigN) cannot be expressed, so the calculator can't implement quss on bigN. Since the function cannot even be implemented with bigN, it's pointless to ask what it's doing. Questions can only be asked about what the actual implementation is doing; not what an imagined unimplementable implementation is doing.

Goff then tries to apply this to brains and this is where the sleight of hand occurs. The supposed dichotomy between brains and calculators is that brains can know they are adding or quadding with numbers that are too big for the brain to handle. Therefore, brains are not calculators.

The sleight of hand is that our brains can work with the descriptions of the behavior, while most calculators are built with only the behavior. With calculators, and much software, the descriptions are stripped away so that only the behavior remains. But there exists software that works with descriptions to generate behavior. This technique is known as "symbolic computation". Programs such as Maxima, Mathematica, and Maple can know that they are adding or quadding because they can work from the symbolic description of the behavior. Like humans, they deal with short descriptions of large things1. We can't write out all of the digits in the number 10^120. But because we can manipulate short descriptions of big things, we can answer what quss would do if bigN were 10^80. 10^80 is less than 10^120, so quss would return 5. Symbolic computation would give the same answer. But if we tried to do that with the actual numbers, we couldn't. When the thing described doesn't fit, it can't be worked on. Or, if the attempt is made, the old programming adage, Garbage In - Garbage Out, applies to humans and machines alike.

[1] We deal with infinity via short descriptions, e.g. "10 goto 10". We don't actually try to evaluate this, because we know we would get stuck if we did. We tag it "don't evaluate". If we actually need a result with these kinds of objects, we get rid of the infinity by various finite techniques.

[2] This post title refers to a prior brief mention of quss here. In that post, it suggested looking at the wiring of a device to determine what it does. In this post, we look at the behavior of the device across all of its inputs to determine what it does. But we only do that because we don't embed a rich description of each behavior in most devices. If we did, we could simply ask the device what it is doing. Then, just as with people, we'd have to correlate their behavior with their description of their behavior to see if they are acting as advertised.

Truth, Redux

Logic is mechanical operations on distinct objects. At it simplest, logic is the selection of one object from a set of two (see "The road to logic", or "Boolean Logic"). Consider the logic operation "equivalence". If the two input objects are the same, the output is the first symbol in the 0th row ("lefty"). If the two input objects are different, the output is the first symbol in the 3rd row ("righty").

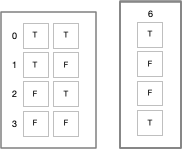

If this were a class in logic, the meaningless symbols "lefty" and "righty" would be replaced by "true" and "false".

But we can't do this. Yet. We have to show how to go from the meaningless symbols "lefty" and "righty" to the meaningful symbols "T" and "F". The lambda calculus shows us how. The lambda calculus describes a universal computing device using an alphabet of meaningless symbols and a set of symbols that describe behaviors. And this is just what we need, because we live in a universe where atoms do things. "T" and "F" need to be symbols that stand for behaviors.

We look at these symbols, we recognize that they are distinct, and we see how to combine them in ways that make sense to our intuitions. But we don't know we do it. And that's because we're "outside" these systems of symbols looking in.

Put yourself inside the system and ask, "what behaviors are needed to produce these results?" For this particular logic operation, the behavior is "if the other symbol is me, output T, otherwise output F". So you need a behavior where a symbol can positively recognize itself and negatively recognize the other symbol. Note that the behavior of T is symmetric with F. "T positively recognizes T and negatively recognizes F. F positively recognizes F and negatively recognizes T." You could swap T and F in the output column if desired. But once this arbitrary choice is made, it fixes the behavior of the other 15 logic combinations.

In addition, the lambda calculus defines true and false as behaviors.1 It just does it at a higher level of abstraction which obscures the lower level.

In any case, nature gives us this behavior of recognition with electric charge. And with this ability to distinguish between two distinct things, we can construct systems that can reason.

[1] Electric Charge, Truth, and Self-Awareness. This was an earlier attempt to say what this post says. YMMV.

On Rasmussen's "Against non-reductive physicalism"

It should be without controversy that there are more non-physical things than physical things. By some estimates there are 10^80 atoms in the universe. There are an unlimited number of numbers. We can't measure all of those numbers, since we can't put them in one-to-one correspondence with the "stuff" of the universe.

The first thing to note is that if the number of things argues against the physicality of mental states, then mental properties aren't needed, because there are more atoms in the universe than there are in the brain. If the sheer number of things argues against physical mental states then this would be sufficient to prove the claim. But as anyone who plays the piano knows, 88 keys can produce a conceptually infinite amount of music. And one need not postulate non-physicality to do so. Just hook a piano up to random number sources that vary the notes, tempo, and volume. The resulting music may not be melodious, but it will be unique.

Rasmussen presents a "construction principle" which states "for any properties, the xs, there is a mental property of thinking that the xs are physical." Here the confusion between mental property and mental state happens. The argument sneaks in the desired conclusion. After all, if this principle were true, then the counting argument wouldn't be needed. Clearly, there is a mental state when I think "my HomePod is playing Cat Stevens". But whether that mental state is physical or immaterial is what has to be shown. By saying it's a mental property then the assertion is mental states are non-physical and the rest of the proof isn't necessary. It's just proof by assertion.

Rasmussen then gives what he calls "a principle of uniformity" which says, "The divide between any two mental properties is narrower than the divide between physicality and non-physicality." To demonstrate this difference between physical and non-physical, he gives the example of a tower of Lego blocks. His claim is that as Lego block is stacked on Lego block, that both the Lego tower, and the shape of the Lego tower, remains physical. He asserts, "if (say) being a stack of n Lego blocks is a physical property, then clearly so is being a stack of n+1 Lego blocks, for any n." This is clearly false. A Lego tower of 10^90 pieces is non-physical. There aren't enough physical particles in the universe for constructing such a tower. Does the shape of the Lego tower remain physical? This is a more interesting question. A shape is a description of an arrangement of stuff. The shape of an imaginary rectangle and the shape of a physical rectangle are the same. Are descriptions physical or non-physical? To assert one or the other is to beg the question of the nature of mental states.

So we have a counting argument that isn't needed, a construction principle that begs the question, and a principle of uniformity that doesn't match experience.

Having failed to show that mental states are non-physical, in section 3 Rasmussen tries to show that mental states aren't grounded in physicality. The bulk of the proof is in his step B2: "if no member of MPROPERTIES entails any other, then some mental properties lack a physical grounding." He turns the counting argument around to claim that there is a problem of too few physical grounds for mental states. This claim is easily dismissed. First, consider words. The estimated vocabulary of an average adult speaker of English is 35,000 words. There is plenty of storage in the human brain for this. But if we don't know a word, we go to the dictionary to get a new definition and place that definition in short term reusable storage. If we use it enough so that it goes into long term storage, we may forget something to make room for it.

In the case of "infinite" things, we think of them in terms of a short fixed description of behavior, so we don't need a lot of storage for infinite things. The computer statement

is a short description of an endless process. We don't actually think of the entire infinite thing, but rather finite descriptions of the behavior behind the process. [1]

10 goto 10

Rasmussen's proof fails because the claim that there needs to be a unique physical property for each mental state doesn't stand. Much of our physical memory is reusable and we have access to external storage (books, videos, other people). In fact, as I wrote in 2015, "man is the animal that uses external storage". [2]

Since the final sections 4 and 5 aren't supported by 1, 2, and 3, they will be skipped over.

[1] See "Lazy Evaluation" for some ways to deal with infinite sequences with limited storage.

[2] "Man is the Animal...". Almost seven years later, this statement is still unique to me. Don't know why. It's obvious "to the most casual observer."

The End of Philosophy

While Russell was working on his Principa, Kurt Gödel's Incompleteness Theorems came along and proved that a self-describing system (i.e. a system that is sufficiently complex to express the basic arithmetic of the natural numbers) cannot simultaneously be consistent and complete. If it's consistent, it's not complete; if it's complete, it isn't consistent.

This ended Russell's lifelong dream. While his Principa is a tremendous intellectual achievement, it did not - and could not - achieve the goals Russell had for his work.

If nature is self-describing (as I think the posts on Natural Theology will show, once they're organized and edited for clarity), then philosophy suffers the same problem as mathematics. Empirically, the universe appears to be consistent. If we put a stake in that position, then all descriptions of nature will be incomplete. There will be no final "theory of everything."

If that's so then, observationally, there are questions for which we cannot know the answers. One such question is on the ontological nature of endlessness (infinity). Is endlessness emergent from a finite universe, or is the universe infinite and what we perceive as reality a quantization of this continuity? It's interesting that the theory of relativity is based on an infinitely continuous picture of nature. Quantum mechanics is based on a discrete picture of nature. String theory tries to split the difference by postulating tiny vibrating strings, but if nature has continuous/discontinuous duality like matter has wave/particle duality, then string theory may, like Russell's Principa, fall short of its intended goal.

Another such question is the nature of randomness. Does randomness indicate purposelessness (as the naturalists claim) or does it indicate hidden purpose (as the theists claim)? The value of 𝜋 can be computed by a deterministic formula. It can also be calculated via Monte Carlo methods (see Buffon's Needle). Therefore, the use of randomness does not preclude agency. Nor does it establish it.

Given that these questions cannot be answered, I propose "Newton's" Third Law of Metaphysics: there are some fundamental questions for which for every answer there is an equal and opposite answer. A corollary to this is that the question of the existence/non-existence of God is in this class. Certainly, the inability over thousands of years of arguing to establish a decisive conclusion is evidence of this principle. Or it may be that the right insight hasn't yet been achieved. An answer which is also evidence of this principle.

I think Gödel has done to philosophy what he did to math. Philosophy won't end, since math didn't end. But it will put limits on what philosophy can say about certain things. I said as much in the post "Epistemology and Hitchens" but that was before I think that the idea that nature is self-describing could be demonstrated from the ground up.

On Self

While I agree with this sentiment (after all, Psalm 57:1 says that God has wings), Dr. Tuggy has also used this idea to defend Unitarianism. More specifically, Dr. Tuggy lists 20 self-evident principles that he uses to guide his reading of Scripture.

While this post is not meant to focus on the debate between Trinitarians and Unitarians, I do want to use Dr. Tuggy's tweet as a springboard to consider if his "self-evident" truths are universally self-evident. I think there is reason to believe that they may not be.

Not long after we are born, we start to distinguish ourselves from our surroundings. We can feel a difference between ourselves and our environment. Place your hand on a table and run your finger from your hand to the table and notice the boundary your senses tell you is there. Look at your hand and notice the boundary between your hand and the table. Lift your hand from the table and notice that your hand moves but the table does not. Look in a mirror and notice the difference between you and your environment. All of these sense data tells us that we are distinct self-contained objects.

But our senses also tell us that the table on which our hand rests is solid. In reality, the table is mostly empty space. What we perceive as solidness is the repulsion of the electric field from the electrons in the table against the like-charged electrons in our hand. If we perceive a location for ourselves, we generally place it inside our skulls. To nature, there is no inside. Billions of neutrinos pass through a square centimeter every second. The experimental particle physicist, Tommaso Dorigo, speculates:

... a few energetic muons are crossing your brain every second, possibly activating some of your neurons by the released energy. Is that a source of apparently untriggered thoughts? Maybe.

4gravitons writes:

This is Quantum Field Theory, the universe of ripples. Democritus said that in truth there are only atoms and the void, but he was wrong. There are no atoms. There is only the void. It ripples and shimmers, and each of us lives as a collection of whirlpools, skimming the surface, seeming concrete and real and vital…until the ripples dissolve, and a new pattern comes.

From a physical view, what we are, are ripples in the quantum pond, with our "selves" limited to local interaction by an inverse square law. From a Biblical view,

‘In him we live and move and have our being’

— Acts 17:28, NRSV

I suspect there is no "inverse square law" with spirit so what separates us from one another is a... mystery.1

[1] Almost immediately after hitting "publish", I started kicking myself. Scripture says what separates us, from God and from each other:

Rather, your iniquities have been barriers between you and your God ...

— Isa. 59:2, NRSV

Ought From Is

To get "ought" from "is", take an "is" and move it into the future as a goal.

The Universe Inside

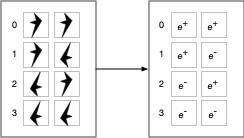

Let us replace distinguishable objects with objects that distinguish themselves:

Then the physical operation that determines if two elements are equal is:

As shown in the previous post, e+ (the positron and its behavior) and e- (the electron and its behavior) are the behaviors assigned to the labels "true" and "false". One could swap e+ and e-. The physical system would still exhibit consistent logical behavior. In any case, this physical operation answers the question "are we the same?", "Is this me?", because these fundamental particles are self-identifying.

From this we see that logical behavior - the selection of one item from a set of two - is fully determined behavior.

In contrast to logic, nature features fully undetermined behavior where a selection is made from a group at random. The double-slit experiment shows the random behavior of light as it travels through a barrier with two slits and lands somewhere on a detector.

In between these two options, there is partially determined, or goal directed behavior where selections are made from a set of choices that lead to a desired state. It is a mixture of determined and undetermined behavior. This is where the problem of teleology comes in. To us, moving toward a goal state indicates purpose. But what if the goal is chosen at random? Another complication is that, while random events are unpredictable, sequences of random events have predictable behavior. Over time, a sequence of random events with tend to its expected value. We are faced with having to decide if randomness indicates no purpose or hidden purpose, agency or no agency.

In this post, from 2012, I made a claim about a relationship between software and hardware. In the post, "On the Undecidability of Materialism vs. Idealism", I presented an argument using the Lambda Calculus to show how software and hardware are so entwined that you can't physically take them apart. This low-level view of nature reinforces these ideas. All logical operations are physical (all software is hardware). Not all physical operations are logical (not all hardware is software). Computing is behavior and the behavior of the elementary particles cannot be separated from the particles themselves. If we're going to choose between idealism and physicalism, it must be based on a coin flip2.

If computers are built of logic "circuits" then computer behavior ought to be fully determined. But when we add peripherals to the system and inject random behavior (either in the program itself, or from external sources), we get non-logical behavior in addition to logic. If a computer is a microcosm of the brain, the brain is a microcosm of the universe.

[1] Quarks have fractional charge, but quarks aren't found outside the atomic nucleus. The strong nuclear force keeps them together. Electrons and positrons are elementary particles.

[2] Dualism might be the only remaining choice, but I think that dualism can't be right. That's a post for another day.

Electric Charge, Truth, and Self-Awareness

John 18:38, NRSV

What is truth?

— Pilate,

To say of what is that it is not, or of what is not that it is, is false, while to say of

what is that it is, and of what is not that it is not, is true.

— Aristotle

"The truth points to itself."

"What?"

"The truth points to itself."

"I do not understand."

"You will."

— Kosh and Delenn in Babylon 5:"In the Beginning"

The one quote that I want, but can no longer find, is to the effect that philosophers don't really know what truth is. Introductions to the philosophy of truth (e.g. here) make for fascinating reading. I claim that the philosophers can't reach agreement because they aren't trying to build a self-aware creature. Were they to attempt it, they might reason something like the following.

Aristotle's definition of truth is clumsy. It simplifies to:If true then [say] true is true.

In hindsight, this isn't a totally terrible definition. It combines the behavior (say "true") with the behavior ("truth leads to truth"). But it's still circular. "True ... is true" doesn't tell us what truth is, so it's useless for building something.

Still, this formulation anticipated a definition of truth in computer programming languages by some 1,700 years:if true then truth-action else false-action

"True" is a special symbol that is given the special meaning of truth. In Lisp, t is true and nil is false. In FORTRAN, .true. is true and .false. is false. Python uses True and False. And so on. But this still doesn't tell you what truth is other than it is a special symbol that can be used for making selections.

Looking at how "true" is defined in the Lambda Calculus provides a critical clue. Considering the Lambda Calculus is important, because it describes all computation in terms of behaviors (denoted by the special symbols λ, ., (, ), and blank) and meaningless symbols. There is no special symbol for "true".def true = λx.λy.x

What this means is that "true" is a function of two objects, x and y and it returns the first object x. Truth is a behavior that can be used as a property. That is, this behavior can be attached to other symbols. It is the behavior that selects true things and rejects false things. False has symmetric behavior. It selects false things and rejects true things. So we've advanced from a special symbol to a behavior. But we aren't yet done.

The simplest if-then statement is:if true then true else false

Truth is the behavior that selects itself. So we've derived the basis for the quote from Babylon 5, above. But we need to take one more step. Fundamental to the Lambda Calculus is the ability to distinguish between symbols. It is a behavior that is assumed by the Lambda calculus, one that doesn't have a special symbol like λ, ., (, ), and space to denote the behavior of distinguishing between symbols.

So consider a Lambda Calculus with two symbols:

And so, we find that truth is the ability to recognize self and select similar self-recognizing things.

And so, we find that electric charge gives us the laws of thought and truth, all in one force.

On Formal Proofs For/Against God

[Updated 2/2/2024]

Over on Ed Feser's blog, is another attempt, in a never-ending series of attempts, to formally prove the existence of God. [1] I was playing devil's advocate by taking the position that the answer to Feser's question is a resounding "no" by providing counter-arguments to their arguments. [2] [3]

"Talmid" made the statement:

You can defend that the arguments fail...

This is where the light came on.

Nobody would say of the proof of the Pythagorean theorem, or of the non-existence of a largest prime number, that "the arguments fail." That isn't how proofs work. If a proof fails, it's because of one of two reasons. Either a premise is denied, or there is a mistake in the mechanical procedure of constructing the proof. When you read these proofs of God's existence (or non-existence), at some point you come to a step in the proof where it looks like the next logical step was taken by coin-flip, instead of logical necessity. This is evidence of the presence of an unstated premise.

Find the unstated premises. Don't let your common sense get in the way. [4] If the argument assumes that things have a beginning, question it. Why must history be linear and not, say, circular? [5] Why can't something come from nothing? That may defy common sense, but it's still an assumption.

Now, suppose that in an argument for or against God that there are five premises. If the premises are independent of each other (and they should be, otherwise one of them isn't a premise), and each premise has a 50-50 chance of being correct, then the proof has a one in thirty-two chance of being correct. Those aren't great odds.

An immediate response to this would be, "but Euclidean geometry has five premises, and it's correct! So why not an argument for/against God with the same number of premises?" The answer is simple. We can measure the results of Euclidean geometry with a ruler and a protractor. While it's against the rules to construct something in Euclidean geometry with anything other than a straightedge and a compass, it isn't against the rules to check the result with measuring devices. And for non-Euclidean geometry, which is used in Relativity, we can measure it against the curvature of light around stars and the gravitational waves produced by merging black holes.

But we can't measure God, at least the non-physical God as God is normally conceived[6].

If that's the case, then it doesn't make sense to argue for/against the existence of God by any means other than "assume God does/does not exist". That gives a one in two chance of being right, as opposed to one in four, one in eight, ... one in 2^(number of premises).

If the premise "God does/does not exist" leads to a contradiction then, assuming the principle of (non)contradiction, the premise is falsified. I suspect, but cannot prove, that both systems are logically consistent. If this is so, then the search for God by formal argument is futile.

[Update:]

It seems to me that if the search for God by formal argument is futile, then the choice of axiom - God does/does not exist - is a logically free choice. And if it's a choice that you are not logically compelled to make, then it comes down to desire. [7]

[1] Can a Thomist Reason to God a priori?

[2] Commenting as "wrf3".

[3] I've informally taken this position here, here, here, and here.

[4] One unstated premise is usually, "common sense is a reliable guide to true explanations." It isn't. Relativity, and Quantum Mechanics, defy "common sense". Quantum Mechanics, for example, uses negative probabilities in the equations of quantum behavior. What's a negative probability? What's a "-20% chance of rain"? Yet we are forced by experiment to describe Nature this way.

[5] Quantum Mechanics also defies our common sense on causation, cf. "Quantum Mischief".

[6] Sentience/consciousness/the inner mind cannot be objectively measured. See The Inner Mind.

[7] For the desire to be fulfilled, God must then fulfill it. You can't tickle yourself. If you want to experience tickling, you must be tickled by someone else. If you want to experience God, then God must reveal Himself.

Electric Charge and the Laws of Thought

| Thought | Charge | |

|---|---|---|

| 1 | Identity | Like charges repel, opposite charges attract |

| 2 | Non-contradiction | Positive charge is not negative charge |

| 3 | Excluded Middle | Charge is either positive or negative |

Dialog with Jeff Williams: Intermission

I would ask you to demonstrate why reason is an atomic arrangement, and why it being a part of nature would imply truth; and along with that how you would explain erroneous ideas and the limits of the invariability principles.

Having written the first three (of five) parts, I think I've answered everything except "the limits of the invariability principles." To do that, I have to finish the posts on "meaning" and "math," then ruminate on the nature of infinity and its relationship to nature (a small part of the latter is here, but I also have some unpublished material on that, too).

Since I think I've answered all but the last (and I have every reason to believe that I can answer the last, but with a lot more exposition), I'm going to take a break to take time to mentally recharge before working on the next two parts.

Jeff can now attempt to rebut.

Table of Contents

- Jeff's original post

- Intro to my reply

- Part I to my reply

- Part IIa to my reply

- Part IIb to my reply

- Part III to my reply

- Intermission to my reply

Dialog with Jeff Williams: Part III

This is the third part to the answer of his question:

I would ask you to demonstrate why reason is an atomic arrangement, and why it being a part of nature would imply truth; and along with that how you would explain erroneous ideas and the limits of the invariability principles.

The answer will consist of five parts:

- The road to logic

- The road to truth

- Logic and Reason

- The road to meaning

- The road to math

This post will cover the third topic, Logic and Reason.

Read More...

Dialog with Jeff Williams: Part IIb

This is the second part to the answer of his question:

I would ask you to demonstrate why reason is an atomic arrangement, and why it being a part of nature would imply truth; and along with that how you would explain erroneous ideas and the limits of the invariability principles.

The answer will consist of five parts:

- The road to logic

- The road to truth

- Logic and Reason1

- The road to meaning

- The road to math

This post will cover the second topic, the road to truth.

Read More...

Dialog with Jeff Williams: Part IIa

Having set the stage, I will now answer his question:

I would ask you to demonstrate why reason is an atomic arrangement, and why it being a part of nature would imply truth; and along with that how you would explain erroneous ideas and the limits of the invariability principles.

The answer will consist of four parts:

- The road to logic

- The road to truth

- The road to meaning

- The road to math

This post will cover the first topic, the road to logic.

Read More...

Dialog with Jeff Williams: Part I

On his blog, Jeff has asked me to respond to several points.

But before he makes his specific request, he makes some preliminary statements, some of which I take issue with. He writes:

I recognize two distinct innate modes of human thought: rational objectification of events in the world; and esthetic experience of Being.

I agree with Jeff that we make distinctions between the sense data of our experiences, the description of what we think our sense data is telling us about an external world (assuming an external world exists!), and the description of how we think that sense data compares to an ideal (the esthetic experience). Where I disagree with Jeff is the nature of these distinctions.

We are all just "ripples on the quantum pond" (do take time to read this link. If we disagree on this we won't agree on the important things). So our sense data is ripples on the pond; our rational objectification of events is ripples on the pond, our esthetic experience is ripples on the pond; our "our" is ripples on the pond. For there to be true distinctions between these things then there needs to be true distinctions in the ripples.

This means that there are ripples that give rise to logic, truth, and meaning for these are the basis of our ability to describe events (Jeff's "rational objectification") and our ability to describe a "distance" between two events (which is the "is/ought" distinction). The only difference between the "rational objectification of events" and the "esthetic experience of Being" is that the latter involves a distance metric between two events or between an event and an "idealized" event.

The resulting representations do not exist as such in the external world...

Here, Jeff needs to demonstrate that there is an "external" -- as opposed to "internal" -- world. If everything is ripples on the pond, then the events and our descriptions of the events, all exist in the same pond. The "internal"/"external" distinction is due to the limitations of our perception and are not due to a fundamental aspect of reality.

I retain Heidegger’s distinction between them as “Truth” arising from esthetic experience, and “Correctness” inhering in objectification.

I note that Jeff needs to define what "truth" and "correctness" are in his worldview, just as I will have to do in mine. Mine is easy.

Again, Being is reduced to copula.

This is problematic for several reasons, which Jeff will have to defend. First, how does anyone know what "Being" is, since we can't directly experience it? Second, it betrays a form of thinking where "Being" and "copula" are distinct things. As a Christian, I would argue that this is equivalent to the "modalist" heresy. I don't want to immediately derail this particular part of the discussion, but we may eventually have to go there (cf. my posts on the Trinity, which are more about the ways this doctrine shows how individuals think about things than it is about the doctrine itself.).

Thankfully, we have no need to go through another tedious debate about duality.

I'm not sure we can ultimately escape it. As I (attempt to show) in On the Undecidability of Materialism vs. Idealism both physicalism and metaphysicalism are dual ways of looking at the same thing. If Jeff wants to get rid of metaphysics, then the only way he's going to be able to do it is by a subjective mental coin flip. That is, the only way you can get rid of metaphysics is by arbitrary fiat. Note the duality: the only way you can get rid of physicalism is by arbitrary fiat, too.

So now we get to the discussion points. Jeff wrote:

my claim that reason is essentially different from reality ...

Reason can't be different from reality, since it's all just ripples on the quantum pond1. What I think Jeff wants to say is that reason allows us to construct descriptions that may, or may not, accurately describe reality. The hard part is knowing which descriptions belong to which class. Jeff wants to reject the idea of "Being" and "copula", but he has to provide a basis as to why. Why not say that "Being" and "accurate descriptions of Being" are both "Being"? (note the parallel to Trinitarian thought).

I will repeat my original answer to that question: while we have dedicated receptors and neural paths for each sensation, no such thing exists for reason. I cannot experience reason the way I do light.

If reason is just the swirling of atoms in certain ways in your brain, then you have to be able to experience it, even if the connection may not be obvious. As I will show in the next blog post, you do have neural paths for reason. I'll show how they work in theory. That this works in practice can be seen in the paper "Computation Emerges from Adaptive Synchronization of Networking Neurons". And, if you're like other people (admittedly, my sample size is small), you experience reason by talking to yourself (we subvocalize our thoughts). That is, computation has to interact with it's environment for the results of computation to be known. The swirling atoms in your brain which are your reason interact with the sense receptors in your brain to make the results of reason known. That is, your sense receptors can be triggered by interaction with an external swirl of atoms as well as the internal swirl of atoms.

where I can create mathematics or logical forms, but this is entirely without external sense data.

I will show that this is false. You cannot sever the roots of mathematics from sense data. But I first have to show where you get logic, then truth, then meaning, and then math.

Without converting to the imaginings of space and time, I have no intuition of reason at all.

This, too, is false. One of the things that has to be understood is that, when it comes to physical devices, there is no difference between the hardware and the software. We may not know what initial knowledge the wiring of our brains gives us, but it's clear that it's there. See, e.g. "Addition and subtraction by human infants", Karen Wynn, Nature, Vol 361, 28 January 1993.

The new subject of quantum mind is attracting top physicists and neuroscientists and perhaps offers the path to understanding.

One the one hand, everything is quantum. On the other hand, let me quote Feynman:

Computer theory has been developed to a point where it realizes that it doesn't make any difference; when you get to a universal computer, it doesn't matter how it's manufactured, how it's actually made.2

That is, computer theory doesn't care about the actual physical construction details as long as you get the right behavior.

Your model, however, centers on atoms, not waves, and leaves the exact principles unspecified.

As per the Feynman quote, it doesn't matter if the model is based on atoms or waves. The model doesn't care. I'm going to use atoms simply because it's easier. And this is an interesting property, since whatever quantum "stuff" is, it exhibits wave-particle duality. That is, computation theory is wave-particle agnostic. What matters is the actual behavior.

and since atomic arrangements are part of nature, that implies an exact connection and description of truth.

Note quite. There is an exact connection between connection and description, but that doesn't mean that every description is true. Remember, there are false as well as true descriptions.

It would also leave unexplained the limitations of Wigner’s invariability principles, which seem to demonstrate the inability of reason to grasp anything larger than a very limited set of events within limited space and time.

Sure, our brains, being physical objects, have physical limitations on what they can keep in mind at one time. But the wonderful thing about Turing machines is that they can use external storage. In fact, to the best of my knowledge, man is the only animal that does use external storage for thoughts. We have all the physical bits in the universe by which we can augment our reason.

Instead, I would ask you to demonstrate why reason is an atomic arrangement ...

Better, reason is matter in motion in certain patterns. If you want to get a preview, see Notes on Feser's "From Aristotle..." If you have questions with this, I can try to address them in the next post.

[1] Unless, of course, you want to admit a transcendent God, who is reason itself and the non-physical cause of all physical things.

[2] Simulating Physics with Computers, International Journal of Theoretical Physics, Vol. 21. Nos. 6/7, 1982

Dialog with Jeff Williams: Intro

Eleven days later, the discussion is still going. I cobbled some code together to pull the entire conversation from this starting tweet, formatted it a bit, and saved it in a text file here.3 It helps immensely to be able to search the complete discussion for what has been said, to look for conversational loops, dead ends, and unanswered questions.

But the conversation has outgrown Twitter. At this point in Twitter, Jeff has asked me to defend one of my claims and has switched to his blog to continue this phase of the dialog. His post is here. After some preliminary remarks, I will respond directly here on my blog. If Twitter isn't a very good medium for these kinds of things, neither are a blog's commenting facilities, particularly since I'm going to want to use diagrams to illustrate some points.

Why have Jeff and I been doing this for almost two weeks now? I won't speak for Jeff but, while I thoroughly disagree with some of his fundamental statements and think that his worldview is ultimately incoherent, we do agree in some surprising ways. For example, he posted a rebuttal to some arguments made by the Christian apologist William Lane Craig. While I don't agree with everything in his rebuttal, I do agree that Craig (as well as most contemporary apologists) are an embarrassment. I'll try my best not to join them.

I also agree that reality, whatever it is, is deeply counterintuitive. I've posted Feynman's comments about the nature of nature from his lecture on quantum mechanics before (e.g here and here). They are1: