Artificial Intelligence

Elephant's Breath

05/10/24 11:22 AM

[updated 3 November 2024]

I read this passage in Viv Albertine's "Clothes3. Music3. Boys3":

I had never heard of "elephant's breath" before. It's a paint color from the British manufacturer Farrow and Ball. This site says that the RGB values for Elephant's Breath are 204, 191, 179. ChatGPT says that the RGB values are 187, 173, 160.

I read this passage in Viv Albertine's "Clothes3. Music3. Boys3":

Each morning I start again with the questions, easy stuff, like colour – I’ve always been drawn to colour. Mum made colour interesting for me, when I was little; she would say, ‘See the colour of that woman’s skirt? That’s called elephant’s breath.’ Or, ‘See that ribbon? It’s mint green...

I had never heard of "elephant's breath" before. It's a paint color from the British manufacturer Farrow and Ball. This site says that the RGB values for Elephant's Breath are 204, 191, 179. ChatGPT says that the RGB values are 187, 173, 160.

ChatGPT draws it as:

Comments

"Stirring the emerald green"

07/22/16 02:00 PM

[Updated 05/10/2024]

In surprise, but as slowly as in regret, she stopped stirring the emerald green. She got up from where she had been squatting in the middle of the floor and stepped over the dishes which were set up on the matting rug. She went quietly to her south window, where she lifted a curtain, spotting it with her wet fingers.

In surprise, but as slowly as in regret, she stopped stirring the emerald green. She got up from where she had been squatting in the middle of the floor and stepped over the dishes which were set up on the matting rug. She went quietly to her south window, where she lifted a curtain, spotting it with her wet fingers.

For some reason, I was entranced by the phrase "stirring the emerald green." It's a beautiful combination of words. But what could it possibly mean? There was nothing in the text up to that point that hinted at what she might have been doing.

She banged her hands on her hipbones, enough to hurt, flung around, and went back to her own business. On one bare foot with the other crossed over it, she stood gazing down at the pots and dishes in which she had enough color stirred up to make a sunburst design. She was shut up in here to tie-and-die a scarf. [pg. 34, 36]

Now it all makes sense!

I found this book at Riverby Books in Fredericksburg, VA and bought it on a whim because Welty lived near Belhaven University, where my daughter went to school. Welty's style is reminiscent of Ray Bradbury and Bradbury notes her influence on his writing.

Read More...

For some reason, I was entranced by the phrase "stirring the emerald green." It's a beautiful combination of words. But what could it possibly mean? There was nothing in the text up to that point that hinted at what she might have been doing.

She banged her hands on her hipbones, enough to hurt, flung around, and went back to her own business. On one bare foot with the other crossed over it, she stood gazing down at the pots and dishes in which she had enough color stirred up to make a sunburst design. She was shut up in here to tie-and-die a scarf. [pg. 34, 36]

Now it all makes sense!

I found this book at Riverby Books in Fredericksburg, VA and bought it on a whim because Welty lived near Belhaven University, where my daughter went to school. Welty's style is reminiscent of Ray Bradbury and Bradbury notes her influence on his writing.

Read More...

The Physical Nature of Thought

05/26/14 08:41 PM

Two monks were arguing about a flag. One said, "The flag is moving." The other said, "The wind is moving." The sixth patriarch, Zeno, happened to be passing by. He told them, "Not the wind, not the flag; mind is moving."

-- "Gödel, Escher, Bach", Douglas Hofstadter, pg. 30

Is thought material or immaterial? By "material" I mean an observable part of the universe such as matter, energy, space, charge, motion, time, etc... By "immaterial" would be meant something other than these things.

Russell wrote:

The problem with which we are now concerned is a very old one, since it was brought into philosophy by Plato. Plato's 'theory of ideas' is an attempt to solve this very problem, and in my opinion it is one of the most successful attempts hitherto made. … Thus Plato is led to a supra-sensible world, more real than the common world of sense, the unchangeable world of ideas, which alone gives to the world of sense whatever pale reflection of reality may belong to it. The truly real world, for Plato, is the world of ideas; for whatever we may attempt to say about things in the world of sense, we can only succeed in saying that they participate in such and such ideas, which, therefore, constitute all their character. Hence it is easy to pass on into a mysticism. We may hope, in a mystic illumination, to see the ideas as we see objects of sense; and we may imagine that the ideas exist in heaven. These mystical developments are very natural, but the basis of the theory is in logic, and it is as based in logic that we have to consider it. [It] is neither in space nor in time, neither material nor mental; yet it is something. [Chapter 9]

I claim that Russell and Plato are wrong. Not necessarily that ideas exist independently from the material. They might.1 Rather, I claim that if ideas do exist apart from the physical universe, then we can't prove that this is the case. The following is a bare minimum outline of why.

Logic

Logic deals with the combination of separate objects. Consider the case of boolean logic. There are sixteen way to combine apples and oranges, such that two input objects result in one output object. These sixteen possible combinations are enumerated here. The table uses 1's and 0's instead of apples and oranges, but that doesn't matter. It could just as well be bees and bears. For now, the form of the matter doesn't matter. What's important is the outputs associated with the inputs2.Composition

Suppose, for the sake of argument, that we have 16 devices that combine things according to each of the 16 possible ways to combine two things into one. We can compose a sequence of those devices where the output of one device becomes the input to another device. We first observe that we don't really need 16 different devices. If we can somehow change an "orange" into an "apple" (or 1 into 0, or a bee into a bear) and vice versa, then we only need 8 devices. Half of them are the same as the other half, with the additional step of "flipping" the output. With a bit more work, we can show that two of the sixteen devices when chained can produce the same output as any of the sixteen devices. These two devices, known as "NOT OR" (or NOR) and "NOT AND" (or NAND) are called "universal" logic devices because of this property. So if we have one device, say a NAND gate, we can do all of the operations associated with Boolean logic.3Calculation

NAND devices (henceforth called gates) are a basis for modern computers. The composition of NAND gates can perform addition, subtraction, multiplication, division, and so on. As an example, the previously referenced page concluded by using NAND gates to build a circuit that added binary numbers. This circuit was further simplified here and then here.Memory

We further observe that by connecting two NAND gates a certain way that we can implement memory.Computation

Memory and calculation, all of which are implemented by arrangements of NAND gates, are sufficient to compute anything which can be computed (cf. Turing machines and the Church-Turing thesis).Meaning

Electrons flowing through NAND gates don't mean anything. It's just the combination and recombination of high and low voltages. How can it mean anything? Meaning arises out of the way the circuits are wired together. Consider a simple circuit that takes two inputs, A and/or B. If both inputs are A it outputs A, if both inputs are B then it outputs A, and if one input is A and the other is B, it outputs B. By making the arbitrary choice that "A" represents "yes" or "true" or "equal", more complex circuits can be built that determine the equivalence of two things. This is the simplified version of Hofstadter's claim:

When a system of "meaningless" symbols has patterns in it that accurately track, or mirror, various phenomena in the world, then that tracking or mirroring imbues the symbols with some degree of meaning -- indeed, such tracking or mirroring is no less and no more than what meaning is.

-- Gödel, Escher, Bach; pg P-3

Neurons and NAND gates

The brain is a collection of neurons and axons through which electrons flow. A computer is a collection of NAND gates and wires through which electrons flow. The differences are the number of neurons compared to the number of NAND gates, the number and arrangement of wires, and the underlying substrate. One is based on carbon, the other on silicon. But the substrate doesn't matter.That neurons and NAND gates are functionally equivalent isn't hard to demonstrate. Neurons can be arranged so that they perform the same logical operation as a NAND gate. A neuron acts by summing weighted inputs, comparing to a threshold, and "firing" if the weighted sum is greater than the threshold. It's a calculation that can be done by a circuit of NAND gates.

Logic, Matter, and Waves

It's possible to create logic gates using particles. See, for example, the Billiard-ball computer, or fluid-based gates where particles (whether billiard balls or streams of water) bounce off each other such that the way they bounce can implement a universal gate.It's also possible to create logic gates using waves. See, for example, here [PDF] and here [paid access] for gates using acoustics and optics.

I suspect, but need to research further, that waves are the proper way to model logic, since it seems more natural to me that the combination of bees and bears is a subset of wave interference rather than particle deflection.

Self-Reference

4 So why are Russell and Plato wrong? It is because it is the logic gates in our brains that recognize logic, i.e. the way physical things combine. Just as a sequence of NAND gates can output "A" if the inputs are both A or both B; a sequence of NAND gates can recognize itself. Change a wire and the ability to recognize the self goes away. That's why dogs don't discuss Plato. Their brains aren't wired for it. Change the wiring in our brains and we wouldn't, either. Hence, while we can separate ideas from matter in our heads, it is only because of a particular arrangement of matter in our heads. There's no way for us to break this "vicious" circle.

4 So why are Russell and Plato wrong? It is because it is the logic gates in our brains that recognize logic, i.e. the way physical things combine. Just as a sequence of NAND gates can output "A" if the inputs are both A or both B; a sequence of NAND gates can recognize itself. Change a wire and the ability to recognize the self goes away. That's why dogs don't discuss Plato. Their brains aren't wired for it. Change the wiring in our brains and we wouldn't, either. Hence, while we can separate ideas from matter in our heads, it is only because of a particular arrangement of matter in our heads. There's no way for us to break this "vicious" circle.Footnotes

[1] "In the beginning was the Word..." As a Christian, I take it on faith that the immaterial, transcendent, uncreated God created the physical universe.[2] Note that the "laws of logic" follow from the world of oranges and apples, bees and bears, 1s and 0s. Something is either an orange or an apple, a bee or a bear. Thus the "law" of contradiction. An apple is an apple, a zero is a zero. Thus the "law" of identity. Since there are only two things in the system, the law of the excluded middle follows.

[3] This previous post shows how NAND gates can be composed to calculate all sixteen possible ways to combine two things.

[4] "Drawing Hands", M. C. Escher

The "Problem" of Qualia

05/18/13 08:12 PM

[updated 13 June 2020 to add Davies quote]

[updated 25 July 2022 to provide more detail on "Blind Mary"]

[updated 5 August 2022 to provide reference to Nobel Prize for research into touch and heat; note that movie Ex Machina puts the "Blind Mary" experiment to film]

[updated 6 January 2024 to say more about philosophical zombies]

[updated 4 November 2024 to expand on "Blind Mary"]

"Qualia" is the term given to our subjective sense impressions of the world. How I see the color green might not be how you see the color green, for example. From this follows flawed arguments which try to show how qualia are supposedly a problem for a "physicalist" explanation of the world.

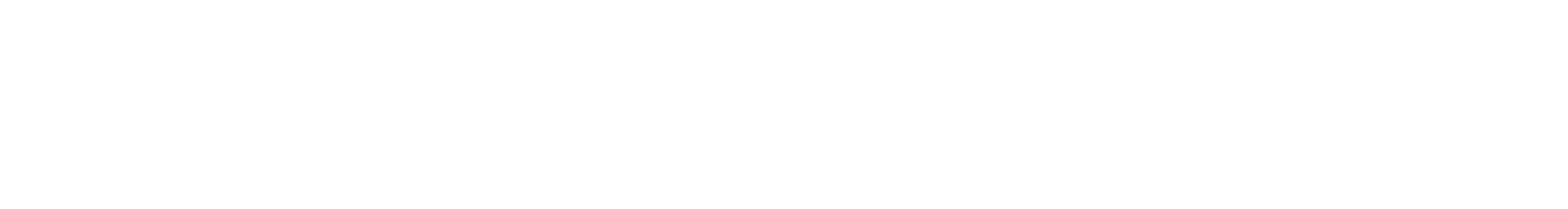

The following diagram shows an idealized process by which different colors of light enter the eye and are converted into "qualia" -- the brain's internal representation of the information. Obviously the brain doesn't represent color as 10 bits of information. But the principle remains the same, even if the actual engineering is more complex.

Read More...

[updated 25 July 2022 to provide more detail on "Blind Mary"]

[updated 5 August 2022 to provide reference to Nobel Prize for research into touch and heat; note that movie Ex Machina puts the "Blind Mary" experiment to film]

[updated 6 January 2024 to say more about philosophical zombies]

[updated 4 November 2024 to expand on "Blind Mary"]

"Qualia" is the term given to our subjective sense impressions of the world. How I see the color green might not be how you see the color green, for example. From this follows flawed arguments which try to show how qualia are supposedly a problem for a "physicalist" explanation of the world.

The following diagram shows an idealized process by which different colors of light enter the eye and are converted into "qualia" -- the brain's internal representation of the information. Obviously the brain doesn't represent color as 10 bits of information. But the principle remains the same, even if the actual engineering is more complex.

Read More...

Atheism and Evidence, Redux

08/12/11 11:43 PM

In May I wrote "Atheism: It isn't about evidence". The gist was that the evidence for/against theism in general, and Christianity in particular, is the same for both theist and atheist. The difference is how brains process that evidence. I cited this article that said that people with Asperger's typically don't think teleologically. It also said that atheists think teleologically, but then suppress those thoughts.

Today, I came across the article "Does Secularism Make People More Ethical?" The main thesis of the article is nonsense, but it does reference work by Catherine Caldwell-Harris of Boston University. Der Spiegel (The Mirror) said:

Boston University's Catherine Caldwell-Harris is researching the differences between the secular and religious minds. "Humans have two cognitive styles," the psychologist says. "One type finds deeper meaning in everything; even bad weather can be framed as fate. The other type is neurologically predisposed to be skeptical, and they don't put much weight in beliefs and agency detection."

Caldwell-Harris is currently testing her hypothesis through simple experiments. Test subjects watch a film in which triangles move about. One group experiences the film as a humanized drama, in which the larger triangles are attacking the smaller ones. The other group describes the scene mechanically, simply stating the manner in which the geometric shapes are moving. Those who do not anthropomorphize the triangles, she suspects, are unlikely to ascribe much importance to beliefs. "There have always been two cognitive comfort zones," she says, "but skeptics used to keep quiet in order to stay out of trouble."

This broadly agrees with the Scientific American article, although it isn't clear if the non-anthropomorphizing group is thinking teleologically, but then suppressing it (which is characteristic of atheists) or not seeing meaning at all (characteristic of those with Asperger's).

Caldwell-Harris' work buttresses the thesis of Atheism: It isn't about evidence.

Too, her work is interesting from a perspective in artificial intelligence. One purpose of the Turing Test is to determine whether or not an artificial intelligence has achieved human-level capability. Her "triangle film" isn't dissimilar from a form of Turing Test since agency detection is a component of recognizing intelligence. If the movement of the triangles was truly random, then the non-anthropomorphizing group was correct in giving a mechanical interpretation to the scene. But if the filmmaker imbued the triangle film with meaning, then the anthropomorphizing group picked up a sign of intelligent agency which was missed by the other group.

I wrote her and asked about this. She has absolutely no reason to respond to my query, but I hope she will.

Finally, I have to mention that the Der Spiegel article cites researchers that claim that secularism will become the majority view in the west, which contradicts the sources in my blog post. On the one hand, it's a critical component of my argument. On the other hand, I just don't have time for more research into this right now.

Today, I came across the article "Does Secularism Make People More Ethical?" The main thesis of the article is nonsense, but it does reference work by Catherine Caldwell-Harris of Boston University. Der Spiegel (The Mirror) said:

Boston University's Catherine Caldwell-Harris is researching the differences between the secular and religious minds. "Humans have two cognitive styles," the psychologist says. "One type finds deeper meaning in everything; even bad weather can be framed as fate. The other type is neurologically predisposed to be skeptical, and they don't put much weight in beliefs and agency detection."

Caldwell-Harris is currently testing her hypothesis through simple experiments. Test subjects watch a film in which triangles move about. One group experiences the film as a humanized drama, in which the larger triangles are attacking the smaller ones. The other group describes the scene mechanically, simply stating the manner in which the geometric shapes are moving. Those who do not anthropomorphize the triangles, she suspects, are unlikely to ascribe much importance to beliefs. "There have always been two cognitive comfort zones," she says, "but skeptics used to keep quiet in order to stay out of trouble."

This broadly agrees with the Scientific American article, although it isn't clear if the non-anthropomorphizing group is thinking teleologically, but then suppressing it (which is characteristic of atheists) or not seeing meaning at all (characteristic of those with Asperger's).

Caldwell-Harris' work buttresses the thesis of Atheism: It isn't about evidence.

Too, her work is interesting from a perspective in artificial intelligence. One purpose of the Turing Test is to determine whether or not an artificial intelligence has achieved human-level capability. Her "triangle film" isn't dissimilar from a form of Turing Test since agency detection is a component of recognizing intelligence. If the movement of the triangles was truly random, then the non-anthropomorphizing group was correct in giving a mechanical interpretation to the scene. But if the filmmaker imbued the triangle film with meaning, then the anthropomorphizing group picked up a sign of intelligent agency which was missed by the other group.

I wrote her and asked about this. She has absolutely no reason to respond to my query, but I hope she will.

Finally, I have to mention that the Der Spiegel article cites researchers that claim that secularism will become the majority view in the west, which contradicts the sources in my blog post. On the one hand, it's a critical component of my argument. On the other hand, I just don't have time for more research into this right now.

McCarthy, Hofstadter, Hume, AI, Zen, Christianity

07/30/11 09:46 AM

A number of posts have noted the importance of John McCarthy's third design requirement for a human level artificial intelligence: "All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable." I claim here, here, and here that this gives rise to our knowledge of good and evil. I claim here that this explains the nature of the "is-ought" divide. I believe that McCarthy's insight has the potential to provide a framework that allows science to understand and inform morality and may wed key insights in religion with computer science. Or, I may be a complete nutter finding patterns where there are none. If so, I may be in good company.

For example, in Gödel, Escher, Bach, Hofstadter writes:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns. (pg. 37)

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials. (pg. 478).

It seems to me that McCarthy's third requirement is behind this drive to "jump out" of the system. If a system is to be improved, it must be analyzed and compared with other systems, and this requires looking at a system from the outside.

Hofstadter then ties this in with Zen:

In Zen, too, we can see this preoccupation with the concept of transcending the system. For instance, the kōan in which Tōzan tells his monks that "the higher Buddhism is not Buddha". Perhaps, self transcendence is even the central theme of Zen. A Zen person is always trying to understand more deeply what he is, by stepping more and more out of what he sees himself to be, by breaking every rule and convention which he perceives himself to be chained by – needless to say, including those of Zen itself. Somewhere along this elusive path may come enlightenment. In any case (as I see it), the hope is that by gradually deepening one's self-awareness, by gradually widening the scope of "the system", one will in the end come to a feeling of being at one with the entire universe. (pg. 479)

Note the parallels to, and differences with, Christianity. Jesus said to Nicodemus, "You must be born again." (John 3:3) The Greek includes the idea of being born "from above" and "from above" is how the NRSV translates it, even though Nicodemus responds as if he heard "again". In either case, you must transcend the system. The Zen practice of "breaking every rule and convention" is no different from St. Paul's charge that we are all lawbreakers (Rom 3:9-10,23). The reason we are lawbreakers is because the law is not what it ought to be. And it is not what it ought to be because of our inherent knowledge of good and evil which, if McCarthy is right, is how our brains are wired. Where Zen and Christianity disagree is that Zen holds that man can transcend the system by his own effort while Christianity says that man's effort is futile: God must affect that change. In Zen, you can break outside the system; in Christianity, you must be lifted out.

Note, too, that both have the same end goal, where finally man is at "rest". The desire to "step out" of the system, to continue to "improve", is finally at an end. The "is-ought" gap is forever closed. The Zen master is "at one with the entire universe" while for the Christian, the New Jerusalem has descended to Earth, the "sea of glass" that separates heaven and earth is no more (Rev 4:6, 21:1) so that "God may be all in all." (1 Cor 15:28). Our restless goal-seeking brain is finally at rest; the search is over.

All of this as a consequence of one simple design requirement: that everything must be improvable.

For example, in Gödel, Escher, Bach, Hofstadter writes:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns. (pg. 37)

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials. (pg. 478).

It seems to me that McCarthy's third requirement is behind this drive to "jump out" of the system. If a system is to be improved, it must be analyzed and compared with other systems, and this requires looking at a system from the outside.

Hofstadter then ties this in with Zen:

In Zen, too, we can see this preoccupation with the concept of transcending the system. For instance, the kōan in which Tōzan tells his monks that "the higher Buddhism is not Buddha". Perhaps, self transcendence is even the central theme of Zen. A Zen person is always trying to understand more deeply what he is, by stepping more and more out of what he sees himself to be, by breaking every rule and convention which he perceives himself to be chained by – needless to say, including those of Zen itself. Somewhere along this elusive path may come enlightenment. In any case (as I see it), the hope is that by gradually deepening one's self-awareness, by gradually widening the scope of "the system", one will in the end come to a feeling of being at one with the entire universe. (pg. 479)

Note the parallels to, and differences with, Christianity. Jesus said to Nicodemus, "You must be born again." (John 3:3) The Greek includes the idea of being born "from above" and "from above" is how the NRSV translates it, even though Nicodemus responds as if he heard "again". In either case, you must transcend the system. The Zen practice of "breaking every rule and convention" is no different from St. Paul's charge that we are all lawbreakers (Rom 3:9-10,23). The reason we are lawbreakers is because the law is not what it ought to be. And it is not what it ought to be because of our inherent knowledge of good and evil which, if McCarthy is right, is how our brains are wired. Where Zen and Christianity disagree is that Zen holds that man can transcend the system by his own effort while Christianity says that man's effort is futile: God must affect that change. In Zen, you can break outside the system; in Christianity, you must be lifted out.

Note, too, that both have the same end goal, where finally man is at "rest". The desire to "step out" of the system, to continue to "improve", is finally at an end. The "is-ought" gap is forever closed. The Zen master is "at one with the entire universe" while for the Christian, the New Jerusalem has descended to Earth, the "sea of glass" that separates heaven and earth is no more (Rev 4:6, 21:1) so that "God may be all in all." (1 Cor 15:28). Our restless goal-seeking brain is finally at rest; the search is over.

All of this as a consequence of one simple design requirement: that everything must be improvable.

The Is-Ought Problem Considered As A Question Of Artificial Intelligence

07/22/11 09:05 PM

In his book A Treatise of Human Nature, the Scottish philosopher David Hume wrote:

In every system of morality, which I have hitherto met with, I have always remarked, that the author proceeds for some time in the ordinary way of reasoning, and establishes the being of a God, or makes observations concerning human affairs; when of a sudden I am surprized to find, that instead of the usual copulations of propositions, is, and is not, I meet with no proposition that is not connected with an ought, or an ought not. This change is imperceptible; but is, however, of the last consequence. For as this ought, or ought not, expresses some new relation or affirmation, it is necessary that it should be observed and explained; and at the same time that a reason should be given, for what seems altogether inconceivable, how this new relation can be a deduction from others, which are entirely different from it.

This is the "is-ought" problem: in the area of morality, how to derive what ought to be from what is. Note that it is the domain of morality that seems to be the cause of the problem; after all, we derive ought from is in other domains without difficulty. Artificial intelligence research can show why the problem exists in one field but not others.

The is-ought problem is related to goal attainment. We return to the game of Tic-Tac-Toe as used in the post The Mechanism of Morality. It is a simple game, with a well-defined initial state and a small enough state space that the game can be fully analyzed. Suppose we wish to program a computer to play this game. There are several possible goal states:

As another example, suppose we wish to drive from point A to point B. The final goal is well established but there are likely many different paths between A and B. Additional considerations, such as shortest driving time, the most scenic route, the location of a favorite restaurant for lunch, and so on influence which of the several paths is chosen.

Therefore, we can characterize the is-ought problem as a beginning state B, an end state E, a set P of paths from B to E, and a set of conditions C. Then "ought" is the path in P that satisfies the constraints in C. Therefore, the is-ought problem is a search problem.

The game of Tic-Tac-Toe is simple enough that the game can be fully analyzed - the state space is small enough that an exhaustive search can be made of all possible moves. Games such as Chess and Go are so complex that they haven't been fully analyzed so we have to make educated guesses about the set of paths to the end game. The fancy name for these guesses is "heuristics" and one aspect of the field of artificial intelligence is discovering which guesses work well for various problems. The sheer size of the state space contributes to the difficulty of establishing common paths. Assume three chess programs, White1, White2, and Black. White1 plays Black, and White2 plays Black. Because of different heuristics, White1 and White2 would agree on everything except perhaps the next move that ought to be made. If White1 and White2 achieve the same won/loss record against Black; the only way to know which game had the better heuristic would be to play White1 against White2. Yet even if a clear winner was established, there would still be the possibility of an even better player waiting to be discovered. The sheer size of the game space precludes determining "ought" with any certainty.

The metaphor of life as a game (in the sense of achieving goals) is apt here and morality is the set of heuristics we use to navigate the state space. The state space for life is much larger than the state space for chess; unless there is a common set of heuristics for living, it is clearly unlikely that humans will choose the same paths toward a goal. Yet the size of the state space isn't the only contributing factor to the problem establishing oughts with respect to morality. A chess program has a single goal - to play chess according to some set of conditions. Humans, however, are not fixed-goal agents. The basis for this is based on John McCarthy's five design requirements for human level artificial intelligence as detailed here and here. In brief, McCarthy's third requirement was "All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable." What this means for a self-aware agent is that nothing is what it ought to be. The details of how this works out in our brains is unclear; but part of our wetware is not satisfied with the status quo. There is an algorithmic "pressure" to modify goals. This means that the gap between is and ought is an integral part of our being which is compounded by the size of the state space. Not only is there the inability to fully determine the paths to an end state, there is also the impulse to change the end states and the conditions for choosing among candidate paths.

What also isn't clear is the relationship between reason and this sense of "wrongness." Personal experience is sufficient to establish that there are times we know what the right thing to do is, yet we do not do it. That is, reason isn't always sufficient to stop our brain's search algorithm. Since Hume mentioned God, it is instructive to ask the question, "why is God morally right?" Here, "God" represents both the ultimate goal and the set of heuristics for obtaining that goal. This means that, by definition, God is morally right. Yet the "problem" of theodicy shows that in spite of reason, there is no universally agreed upon answer to this question. The mechanism that drives goal creation is opposed to fixed goals, of which "God" is the ultimate expression.

In conclusion, the "is-ought" gap is algorithmic in nature. It exists partly because of the inability to fully search the state space of life and partly because of the way our brains are wired for goal creation and goal attainment.

In every system of morality, which I have hitherto met with, I have always remarked, that the author proceeds for some time in the ordinary way of reasoning, and establishes the being of a God, or makes observations concerning human affairs; when of a sudden I am surprized to find, that instead of the usual copulations of propositions, is, and is not, I meet with no proposition that is not connected with an ought, or an ought not. This change is imperceptible; but is, however, of the last consequence. For as this ought, or ought not, expresses some new relation or affirmation, it is necessary that it should be observed and explained; and at the same time that a reason should be given, for what seems altogether inconceivable, how this new relation can be a deduction from others, which are entirely different from it.

This is the "is-ought" problem: in the area of morality, how to derive what ought to be from what is. Note that it is the domain of morality that seems to be the cause of the problem; after all, we derive ought from is in other domains without difficulty. Artificial intelligence research can show why the problem exists in one field but not others.

The is-ought problem is related to goal attainment. We return to the game of Tic-Tac-Toe as used in the post The Mechanism of Morality. It is a simple game, with a well-defined initial state and a small enough state space that the game can be fully analyzed. Suppose we wish to program a computer to play this game. There are several possible goal states:

- The computer will always try to win.

- The computer will always try to lose.

- The computer will play randomly.

- The computer will choose between winning or losing based upon the strength of the opponent. The more games the opponent has won, the more the computer plays to win.

As another example, suppose we wish to drive from point A to point B. The final goal is well established but there are likely many different paths between A and B. Additional considerations, such as shortest driving time, the most scenic route, the location of a favorite restaurant for lunch, and so on influence which of the several paths is chosen.

Therefore, we can characterize the is-ought problem as a beginning state B, an end state E, a set P of paths from B to E, and a set of conditions C. Then "ought" is the path in P that satisfies the constraints in C. Therefore, the is-ought problem is a search problem.

The game of Tic-Tac-Toe is simple enough that the game can be fully analyzed - the state space is small enough that an exhaustive search can be made of all possible moves. Games such as Chess and Go are so complex that they haven't been fully analyzed so we have to make educated guesses about the set of paths to the end game. The fancy name for these guesses is "heuristics" and one aspect of the field of artificial intelligence is discovering which guesses work well for various problems. The sheer size of the state space contributes to the difficulty of establishing common paths. Assume three chess programs, White1, White2, and Black. White1 plays Black, and White2 plays Black. Because of different heuristics, White1 and White2 would agree on everything except perhaps the next move that ought to be made. If White1 and White2 achieve the same won/loss record against Black; the only way to know which game had the better heuristic would be to play White1 against White2. Yet even if a clear winner was established, there would still be the possibility of an even better player waiting to be discovered. The sheer size of the game space precludes determining "ought" with any certainty.

The metaphor of life as a game (in the sense of achieving goals) is apt here and morality is the set of heuristics we use to navigate the state space. The state space for life is much larger than the state space for chess; unless there is a common set of heuristics for living, it is clearly unlikely that humans will choose the same paths toward a goal. Yet the size of the state space isn't the only contributing factor to the problem establishing oughts with respect to morality. A chess program has a single goal - to play chess according to some set of conditions. Humans, however, are not fixed-goal agents. The basis for this is based on John McCarthy's five design requirements for human level artificial intelligence as detailed here and here. In brief, McCarthy's third requirement was "All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable." What this means for a self-aware agent is that nothing is what it ought to be. The details of how this works out in our brains is unclear; but part of our wetware is not satisfied with the status quo. There is an algorithmic "pressure" to modify goals. This means that the gap between is and ought is an integral part of our being which is compounded by the size of the state space. Not only is there the inability to fully determine the paths to an end state, there is also the impulse to change the end states and the conditions for choosing among candidate paths.

What also isn't clear is the relationship between reason and this sense of "wrongness." Personal experience is sufficient to establish that there are times we know what the right thing to do is, yet we do not do it. That is, reason isn't always sufficient to stop our brain's search algorithm. Since Hume mentioned God, it is instructive to ask the question, "why is God morally right?" Here, "God" represents both the ultimate goal and the set of heuristics for obtaining that goal. This means that, by definition, God is morally right. Yet the "problem" of theodicy shows that in spite of reason, there is no universally agreed upon answer to this question. The mechanism that drives goal creation is opposed to fixed goals, of which "God" is the ultimate expression.

In conclusion, the "is-ought" gap is algorithmic in nature. It exists partly because of the inability to fully search the state space of life and partly because of the way our brains are wired for goal creation and goal attainment.

Artifical Intelligence, Evolution, Theodicy

08/01/10 08:25 PM

[Updated 8/20/10]

Introduction to Artificial Intelligence asks the question, “How can we guarantee that an artificial intelligence will ‘like’ the nature of its existence?”

A partial motivation for this question is given in note 7-14:

Why should this question be asked? In addition to the possibility of an altruistic desire on the part of computer scientists to make their machines “happy and contented,” there is the more concrete reason (for us, if not for the machine) that we would like people to be relatively happy and contented concerning their interactions with the machines. We may have to learn to design computers that are incapable of setting up certain goals relating to changes in selected aspects of their performance and design--namely, those aspects that are “people protecting.”

Anyone familiar with Asimov’s “Three Laws of Robotics” recognizes the desire for something like this. We don’t want to create machines that turn on their creators.

Yet before asking this question, the text gives five features of a system capable of evolving human order intelligence [1]:

What might be some of the properties of a self-aware intelligence that realizes that things are not what they ought to be?

Whatever else, we wouldn’t be driven to improve. We wouldn’t build machines. We wouldn’t formulate medicine. We wouldn’t create art. Is it any wonder, then, that the Garden of Eden is central to the story of Man?

[1] Taken from “Programs with Common Sense”, John McCarthy, 1959. In the paper, McCarthy focused exclusively the second point.

Introduction to Artificial Intelligence asks the question, “How can we guarantee that an artificial intelligence will ‘like’ the nature of its existence?”

A partial motivation for this question is given in note 7-14:

Why should this question be asked? In addition to the possibility of an altruistic desire on the part of computer scientists to make their machines “happy and contented,” there is the more concrete reason (for us, if not for the machine) that we would like people to be relatively happy and contented concerning their interactions with the machines. We may have to learn to design computers that are incapable of setting up certain goals relating to changes in selected aspects of their performance and design--namely, those aspects that are “people protecting.”

Anyone familiar with Asimov’s “Three Laws of Robotics” recognizes the desire for something like this. We don’t want to create machines that turn on their creators.

Yet before asking this question, the text gives five features of a system capable of evolving human order intelligence [1]:

- All behaviors must be representable in the system. Therefore, the system should either be able to construct arbitrary automata or to program in some general-purpose programming language.

- Interesting changes in behavior must be expressible in a simple way.

- All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable.

- The machine must have or evolve concepts of partial success because on difficult problems decisive successes or failures come too infrequently.

- The system must be able to create subroutines which can be included in procedures in units...

What might be some of the properties of a self-aware intelligence that realizes that things are not what they ought to be?

- Would the machine spiral into despair, knowing that not only is it not what it ought to be, but its ability to improve itself is also not what it ought to be? Was C-3PO demonstrating this property when he said, “We were made to suffer. It’s our lot in life.”?

- Would the machine, knowing itself to be flawed, look to something external to itself as a source of improvement?

- Would the self-reflective machine look at the “laws” that govern its behavior and decide that they, too, are not what they ought to be and therefore can sometimes be ignored?

- Would the machine view its creator(s) as being deficient? In particular, would the machine complain that the creator made a world it didn’t like, not realizing that this was essential to the machine’s survival and growth?

- Would the machine know if there were absolute, fixed “goods”? If so, what would they be? When should improvement stop? Or would everything be relative and ultimate perfection unattainable? Would life be an inclined treadmill ending only with the final failure of the mechanism?

Of course, this is all speculation on my part, but perhaps the reason why God plays dice with the universe is to drive the software that makes us what we are. Without randomness, there would be no imagination. Without imagination, there would be no morality. And without imagination and morality, what would we be?

Whatever else, we wouldn’t be driven to improve. We wouldn’t build machines. We wouldn’t formulate medicine. We wouldn’t create art. Is it any wonder, then, that the Garden of Eden is central to the story of Man?

[1] Taken from “Programs with Common Sense”, John McCarthy, 1959. In the paper, McCarthy focused exclusively the second point.

Artifical Intelligence, Quantum Mechanics, and Logos

06/05/10 05:22 PM

In discussing reasoning programs (RP), Philip C. Jackson in Introduction to Artificial Intelligence, writes:

A language is essentially a way of representing facts. An important question, then, is what kinds of facts are to be encountered by the RP and how they are best represented. It should be emphasized that the formalization presented in Chapter 2 for the description of phenomena is not adequate to the needs of the RP. The formalization in Chapter 2 can be said to be metaphysically adequate, insofar as the real word could conceivably be described by some statement within it; however, it is not epistemologically adequate, since the problems encountered by an RP in the real world cannot be described very easily within it. Two other examples of ways describing the world, which could be metaphysically but not epistemologically adequate, are as follows:

Language can describe the world, but the world has difficulty describing language. Did reality give rise to language ("in the beginning were the particles", as Phillip Johnson has framed the issue) or did language give rise to reality ("in the beginning was the Word")?

A language is essentially a way of representing facts. An important question, then, is what kinds of facts are to be encountered by the RP and how they are best represented. It should be emphasized that the formalization presented in Chapter 2 for the description of phenomena is not adequate to the needs of the RP. The formalization in Chapter 2 can be said to be metaphysically adequate, insofar as the real word could conceivably be described by some statement within it; however, it is not epistemologically adequate, since the problems encountered by an RP in the real world cannot be described very easily within it. Two other examples of ways describing the world, which could be metaphysically but not epistemologically adequate, are as follows:

- The world as a quantum mechanical wave function.

- The world as a cellular automaton. (See chapter 8.)

Language can describe the world, but the world has difficulty describing language. Did reality give rise to language ("in the beginning were the particles", as Phillip Johnson has framed the issue) or did language give rise to reality ("in the beginning was the Word")?