The Adoring Fans Speak!

Still, the debates about Calvinism and free will continue apace over at Vox Popoli, the latest one being [link expired], from which the following two quotes are taken.

wrf3, i did say before that you are being willfully obtuse. but after this, I am starting to think that you are suffering from early dementia or you are not paying much attention to what you say.

--Toby Temple

And do remember when we call you a depraved immoral utterly worthless pile of filth that we're not insulting you... we're simply agreeing with your theology.Nate Winchester

You pathetic pile of dung.

--

I wonder if I should use these on the dust jacket of my book

Morality in a Fantasy Novel

There wasn't a thinking being alive who deep down didn't feel fundamentally flawed.

This, of course, is one way McCarthy's third design requirement can manifest itself.

In Something M.Y.T.H. Inc., on page 27, another character explains morality in terms of the iterated prisoner's dilemma, even though he likely never took a course in game theory:

"What I mean is, when you're a soldier, you don't have to worry much about how popular you are with the enemy, 'cause mostly you're tryin' to make him dead and you don't expect him to like it. It's different doin' collection work, whether it's protection money or taxes, which is of course just another kind of protection racket. Ya gotta be more diplomatic 'cause you're gonna have to deal with the same people over and over again."

For another example of art revealing life, see the post The Telling.

C. S. Lewis: Evolutionary Hymn

Lead us, Evolution, lead us

Up the future's endless stair;

Chop us, change us, prod us, weed us.

For stagnation is despair:

Groping, guessing, yet progressing,

Lead us nobody knows where.

Wrong or justice, joy or sorrow,

In the present what are they

while there's always jam-tomorrow,

While we tread the onward way?

Never knowing where we're going,

We can never go astray.

To whatever variation

Our posterity may turn

Hairy, squashy, or crustacean,

Bulbous-eyed or square of stern,

Tusked or toothless, mild or ruthless,

Towards that unknown god we yearn.

Ask not if it's god or devil,

Brethren, lest your words imply

Static norms of good and evil

(As in Plato) throned on high;

Such scholastic, inelastic,

Abstract yardsticks we deny.

Far too long have sages vainly

Glossed great Nature's simple text;

He who runs can read it plainly,

'Goodness = what comes next.'

By evolving, Life is solving

All the questions we perplexed.

On then! Value means survival-

Value. If our progeny

Spreads and spawns and licks each rival,

That will prove its deity

(Far from pleasant, by our present,

Standards, though it may well be).

Aside from being heretofore unaware of this poem, my reason for blogging is to note two of Lewis' observations about evolution which I will later use in another post. First, is Lewis' poetic description of evolution as an open-ended search. Second, is the linking of evolution and morality with the supposition that an open-ended search for reproductive success leads to an open-ended morality.

Response to James

My only comment - and I'll leave it at this - is that, despite a very well worded argument, you seem to forget the very basis on which your argument stands. That being, using your own abstract allusion, though information (of any type, not just software of course) can be coded in zeros and ones, does not record itself. There needs be a CODER.

Under materialism, the coder is the universe itself. That is, the motion of the particles, operating under physical law, gave rise to the motion of electrons in certain patterns that make up our thoughts. Whether or not this is the true explanation is hotly contested. One side will argue that this is such an improbable occurrence that it couldn't be the right explanation. The other side will argue that improbable things happen. Both sides tailor their argument according to their preconceived notions about the nature of reality. Synchronously, John C. Wright has a droll take on it here.

It may be transmitted one way or another, either zeros and ones, or brain waves, or goal-seeking algorithms, but itself is something rather more transcendent. If you doubt that, then why would more than one person get upset over the same wrong? (Say invasion of a country you don't even live in) or be offended when you step on the foot of an elderly woman whom you don't even know?

This is a topic that I hope to get to this year. There is an explanation for this, see Axelrod's "The Evolution of Cooperation." For an idea of how the argument will go, see Cybertheology.

And if we "call steps leading toward a goal good" then that simply means any goal is good. Including, say, a despot's systematic murder of an entire people. There are few goals as effective as that for survival of a people, state or regime.

First, whether or not a goal is good depends on its relationship to other goals, and those goals exist in relationship to other goals, and so on. That's one reason why morality is such a difficult subject -- the size of the goal space is so large. It's much, much bigger than the complex games of Chess and Go.

Second, there may be times when it's necessary for one group to die so that another may live. We don't like that notion, because we may think that the reasoning that leads to the deaths of others could one day be used against us; on the other hand, listen to the reasons given for the necessity of using nuclear weapons against Japan in World War II. That there is no universal agreement on this shows how difficult a problem it is.

You also note that Axelrod's game theory shows how the golden rule can arise in biological systems. Well, if that happens so "naturally," why hasn't it happened in any of the (numerous beyond count) organisms that have, on an evolutionary scale, been here longer than Man? Say, for instance, the shark? Or the ant, which has a complicated social system?

It has happened, and Axelrod (with William D. Hamilton) gives examples of this in chapter 5: The Evolution of Cooperation in Biological Systems.

We are not necessarily walking conundrums, BTW. …

Then you're a better man than St. Paul, who wrote:

I do not understand my own actions. For I do not do what I want, but I do the very thing I hate. Now if I do what I do not want, I agree that the law is good. But in fact it is no longer I that do it, but sin that dwells within me. For I know that nothing good dwells within me, that is, in my flesh. I can will what is right, but I cannot do it. For I do not do the good I want, but the evil I do not want is what I do. Now if I do what I do not want, it is no longer I that do it, but sin that dwells within me. So I find it to be a law that when I want to do what is good, evil lies close at hand. For I delight in the law of God in my inmost self, but I see in my members another law at war with the law of my mind, making me captive to the law of sin that dwells in my members. Wretched man that I am! Who will rescue me from this body of death? [Rom 7:15-24]

Which leads me to the last point: No, the Bible doesn't teach that Jesus died because of man's inability to follow any external code.

Actually, it does. Again, St. Paul wrote, "I do not nullify the grace of God; for if justification comes through the law, then Christ died for nothing." (Gal 2:21) and "For if a law had been given that could make alive, then righteousness would indeed come through the law." (Gal 3:21).

McCarthy, Hofstadter, Hume, AI, Zen, Christianity

For example, in Gödel, Escher, Bach, Hofstadter writes:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns. (pg. 37)

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials. (pg. 478).

It seems to me that McCarthy's third requirement is behind this drive to "jump out" of the system. If a system is to be improved, it must be analyzed and compared with other systems, and this requires looking at a system from the outside.

Hofstadter then ties this in with Zen:

In Zen, too, we can see this preoccupation with the concept of transcending the system. For instance, the kōan in which Tōzan tells his monks that "the higher Buddhism is not Buddha". Perhaps, self transcendence is even the central theme of Zen. A Zen person is always trying to understand more deeply what he is, by stepping more and more out of what he sees himself to be, by breaking every rule and convention which he perceives himself to be chained by – needless to say, including those of Zen itself. Somewhere along this elusive path may come enlightenment. In any case (as I see it), the hope is that by gradually deepening one's self-awareness, by gradually widening the scope of "the system", one will in the end come to a feeling of being at one with the entire universe. (pg. 479)

Note the parallels to, and differences with, Christianity. Jesus said to Nicodemus, "You must be born again." (John 3:3) The Greek includes the idea of being born "from above" and "from above" is how the NRSV translates it, even though Nicodemus responds as if he heard "again". In either case, you must transcend the system. The Zen practice of "breaking every rule and convention" is no different from St. Paul's charge that we are all lawbreakers (Rom 3:9-10,23). The reason we are lawbreakers is because the law is not what it ought to be. And it is not what it ought to be because of our inherent knowledge of good and evil which, if McCarthy is right, is how our brains are wired. Where Zen and Christianity disagree is that Zen holds that man can transcend the system by his own effort while Christianity says that man's effort is futile: God must affect that change. In Zen, you can break outside the system; in Christianity, you must be lifted out.

Note, too, that both have the same end goal, where finally man is at "rest". The desire to "step out" of the system, to continue to "improve", is finally at an end. The "is-ought" gap is forever closed. The Zen master is "at one with the entire universe" while for the Christian, the New Jerusalem has descended to Earth, the "sea of glass" that separates heaven and earth is no more (Rev 4:6, 21:1) so that "God may be all in all." (1 Cor 15:28). Our restless goal-seeking brain is finally at rest; the search is over.

All of this as a consequence of one simple design requirement: that everything must be improvable.

The Is-Ought Problem Considered As A Question Of Artificial Intelligence

In every system of morality, which I have hitherto met with, I have always remarked, that the author proceeds for some time in the ordinary way of reasoning, and establishes the being of a God, or makes observations concerning human affairs; when of a sudden I am surprized to find, that instead of the usual copulations of propositions, is, and is not, I meet with no proposition that is not connected with an ought, or an ought not. This change is imperceptible; but is, however, of the last consequence. For as this ought, or ought not, expresses some new relation or affirmation, it is necessary that it should be observed and explained; and at the same time that a reason should be given, for what seems altogether inconceivable, how this new relation can be a deduction from others, which are entirely different from it.

This is the "is-ought" problem: in the area of morality, how to derive what ought to be from what is. Note that it is the domain of morality that seems to be the cause of the problem; after all, we derive ought from is in other domains without difficulty. Artificial intelligence research can show why the problem exists in one field but not others.

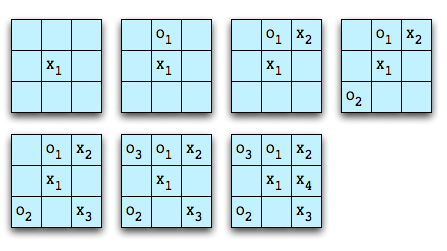

The is-ought problem is related to goal attainment. We return to the game of Tic-Tac-Toe as used in the post The Mechanism of Morality. It is a simple game, with a well-defined initial state and a small enough state space that the game can be fully analyzed. Suppose we wish to program a computer to play this game. There are several possible goal states:

- The computer will always try to win.

- The computer will always try to lose.

- The computer will play randomly.

- The computer will choose between winning or losing based upon the strength of the opponent. The more games the opponent has won, the more the computer plays to win.

As another example, suppose we wish to drive from point A to point B. The final goal is well established but there are likely many different paths between A and B. Additional considerations, such as shortest driving time, the most scenic route, the location of a favorite restaurant for lunch, and so on influence which of the several paths is chosen.

Therefore, we can characterize the is-ought problem as a beginning state B, an end state E, a set P of paths from B to E, and a set of conditions C. Then "ought" is the path in P that satisfies the constraints in C. Therefore, the is-ought problem is a search problem.

The game of Tic-Tac-Toe is simple enough that the game can be fully analyzed - the state space is small enough that an exhaustive search can be made of all possible moves. Games such as Chess and Go are so complex that they haven't been fully analyzed so we have to make educated guesses about the set of paths to the end game. The fancy name for these guesses is "heuristics" and one aspect of the field of artificial intelligence is discovering which guesses work well for various problems. The sheer size of the state space contributes to the difficulty of establishing common paths. Assume three chess programs, White1, White2, and Black. White1 plays Black, and White2 plays Black. Because of different heuristics, White1 and White2 would agree on everything except perhaps the next move that ought to be made. If White1 and White2 achieve the same won/loss record against Black; the only way to know which game had the better heuristic would be to play White1 against White2. Yet even if a clear winner was established, there would still be the possibility of an even better player waiting to be discovered. The sheer size of the game space precludes determining "ought" with any certainty.

The metaphor of life as a game (in the sense of achieving goals) is apt here and morality is the set of heuristics we use to navigate the state space. The state space for life is much larger than the state space for chess; unless there is a common set of heuristics for living, it is clearly unlikely that humans will choose the same paths toward a goal. Yet the size of the state space isn't the only contributing factor to the problem establishing oughts with respect to morality. A chess program has a single goal - to play chess according to some set of conditions. Humans, however, are not fixed-goal agents. The basis for this is based on John McCarthy's five design requirements for human level artificial intelligence as detailed here and here. In brief, McCarthy's third requirement was "All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable." What this means for a self-aware agent is that nothing is what it ought to be. The details of how this works out in our brains is unclear; but part of our wetware is not satisfied with the status quo. There is an algorithmic "pressure" to modify goals. This means that the gap between is and ought is an integral part of our being which is compounded by the size of the state space. Not only is there the inability to fully determine the paths to an end state, there is also the impulse to change the end states and the conditions for choosing among candidate paths.

What also isn't clear is the relationship between reason and this sense of "wrongness." Personal experience is sufficient to establish that there are times we know what the right thing to do is, yet we do not do it. That is, reason isn't always sufficient to stop our brain's search algorithm. Since Hume mentioned God, it is instructive to ask the question, "why is God morally right?" Here, "God" represents both the ultimate goal and the set of heuristics for obtaining that goal. This means that, by definition, God is morally right. Yet the "problem" of theodicy shows that in spite of reason, there is no universally agreed upon answer to this question. The mechanism that drives goal creation is opposed to fixed goals, of which "God" is the ultimate expression.

In conclusion, the "is-ought" gap is algorithmic in nature. It exists partly because of the inability to fully search the state space of life and partly because of the way our brains are wired for goal creation and goal attainment.

Bad Arguments Against Materialism

I want to examine and expose bad theistic arguments against materialism, which generally reduce to the idea that materialism cannot explain abstract thought in general and morality in particular.

As a software engineer, I know that software -- which is abstract thought -- can be encoded in material: zero's and ones flowing through NAND gates arranged in certain ways. Wire up NAND gates one way and you have a circuit that adds (e.g. here). Wire them up another way and you have a circuit that can subtract. Wire them up yet another way and you have memory. A more complicated arrangement could recognize whether or not a given circuit is an adder (i.e. one implements "this adds," the other implements "that is an adder"). If something can be expressed as software, it can be expressed as hardware. The relationships between the basic parts, whether they are NAND gates, NOR gates, or something else, and the movement of electrons (or photons), between them encode the abstract thought. Yet Lopez wrote:

For this to be true, those thoughts have to exist independently of the hardware which is our minds. They have to exist in the mind of God. But he hasn't shown that this is the case nor do I know how to prove it, even though I think it true ["in Him we live and move and have our being." -- Acts 17:28]. Just as the materialist cannot prove his position that the thoughts cease when the electrons stop moving (see my post Materialism, Theism, and Information where I have this argument with a materialist), the theist also hasn't made their case. It's one thing to cite Scripture, it's quite another to show why it must be so independently of special revelation.For example, while electrical impulses may occur when a person has particluar [sic] thoughts or feelings (or propositional qualities, per Greg Koukl), the impulses themselves are not the thoughts or feelings.

That thought can be encoded in hardware should be familiar to Christians. After all, the Word became Flesh. Where the theist and materialist differ is in the initial conditions. The materialist will say that matter is made of atoms, and atoms are made of protons, neutrons, and electrons; and protons and neutrons are made of up quarks. One overview of the "particle zoo" is here. String theory offers the idea that below the currently known elementary particles lie even smaller one dimensional oscillating lines. Do strings really exist? We don't know. What we do know is that simple things combine to make more complex things, more complex things combine to make even more complex things. The greater the number of connections between things, the greater the complexity. Perhaps this is why the human mind tries to reduce things to their most simple components and this is what drives the search for strings in one discipline and God in another. Whether it is clearly revealed in Scripture or not, there has certainly been the idea that God is immaterial, irreducible, and simple. The materialist will say that at the bottom lies matter and the ways they combine. This combining, recombining, and recombining again eventually resulted in self-aware humans. Genetic algorithms, after all, do work. The theist says that at the bottom lies an immaterial self-aware Person who created matter and, eventually, self-aware people. In one camp, self-awareness is emergent; in another it is fundamental. After all, when Moses asked God to reveal His name, He said, "I am who I am."

If the existence of self-aware thought is one way theists argue against materialism, likewise is the existence of morality which theists claim cannot be explained by science. Lopez also wrote:

The materialist answer is fairly simple. Morality is what we call the goal-seeking algorithm(s) in our brain (see my article The Mechanism of Morality). Basically, we call steps leading toward a goal good, and steps leading away bad. Robert Axelrod, in his ground-breaking book The Evolution of Cooperation, showed how strategies such as cooperation, forgiveness, and non-covetousness could arise between competing selfish agents. Morality is then objective the way language is objective. If language is the means whereby a community uses arbitrary symbols to share meaning, morality is the means whereby a community shares goals. The grounding for the imposition of one moral system over another would then be whether or not it leads to greater reproductive success, in exactly the same way that English is currently the lingua franca of science, technology, and business.Indeed, if our entire essence - the totality of who we are, was reducible solely to particles in motion, then what justification would there be for any concept of an objective morality? What grounding** would there be for any application - or imposition - of morality from one human being to another? Survival of the fittest? Perpetuation of our species? The selfish gene?

If morality is a property of the goal-seeking behavior of self-aware beings, and the goal is reproductive success, then certain strategies will be more effective than others. Axelrod used game theory to show how something like the golden rule can arise in biological systems. There is one sense in which the "game" of life is like the game of chess -- both have state spaces so large that it is impossible to fully analyze all strategies. Life, like chess, requires us to develop heuristics for winning the game. It's a field that's wide open for research via computer simulation. But even if we can say with confidence which choices ought to be made, this leads to the next issue.

I am puzzled the theist's insistence on the existence of and necessity for an objective morality: something written in stone which solves the "is-ought" problem, to which all mankind (and extraterrestrial life, if it exists) must agree "this ought to be," i.e. "these are the goals toward which all must strive, whether freely or not." The materialist isn't bothered by moral relativism any more than he is bothered by the fact that there are different languages. It's the way our brains work. The goal-seeking algorithm in our brain tends to reject fixed goals. We are walking conundrums that want to choose yet aren't satisfied by the choices we make. John McCarthy recognized this in Programs with Common Sense, Axelrod found it via computer simulation in The Evolution of Cooperation, Hume exposed the problem, but not the cause; St. Paul made it the basis of his exposition of the Gospel in the book of Romans and drove the point home in his letter to the Galatians, and it's central to the story of the creation of man in Genesis (see What Really Happened in Eden). After all, the central claim of Christianity is that Jesus died and rose from the dead because of man's inability to follow any external moral code. To say that the need for an objective external standard is an argument against materialism completely misses the point of Christianity. We know that our brains are wired for teleological thinking; people with Asperger's have been shown to be deficient in this area (People with Asperger's less likely to see purpose behind the events in their lives). The theist says that God represents the ultimate goal, the ultimate purpose, the solution to the is-ought problem; the materialist will say that this is just something that minds with our properties wished they had. It's how scientists say we're wired, its how Christianity says we're wired. Arguing that materialism can't support an objective moral standard won't change that wiring.

In summary, then, neither abstract thought nor morality are a problem for a materialist, as currently argued by theists.

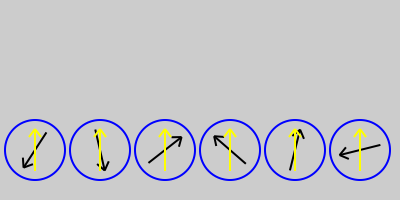

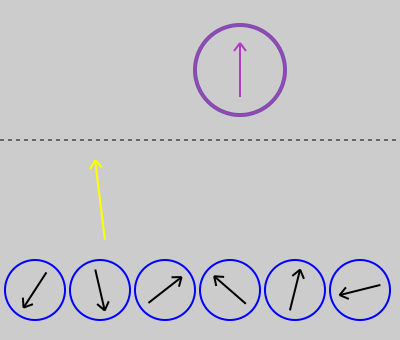

Modeling Morality: The End of Time

Since I hinted at a change of models, I need to present what I think the model will be when God’s kingdom is fully come:

Model #7

The dashed line in model #6 disappears, reminiscent of Revelation 21:1, where St. John wrote, “Then I saw a new heaven and a new earth; for the first heaven and the first earth had passed away, and the sea was no more.” The sea, of course, does not refer to a literal body of water, but the “sea of glass, like crystal” that separates the throne of God in heaven from earth [Rev 4:6].

The thick black line in model #7 represents the “great chasm” of Luke 16:26. Below the line are those who have clung to their will. As C. S. Lewis wrote in The Great Divorce:There are only two kinds of people in the end: those who say to God, “Thy will be done,” and those to whom God says, in the end, “Thy will be done.”

I think Lewis, and model #7, both accurately reflect the Biblical view of the eternal state.

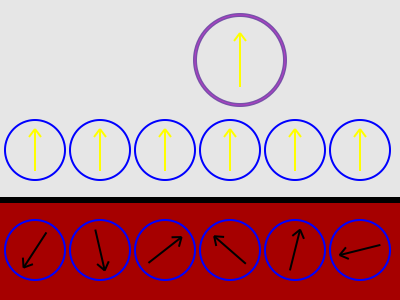

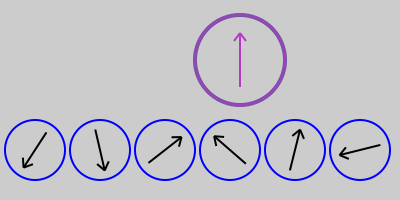

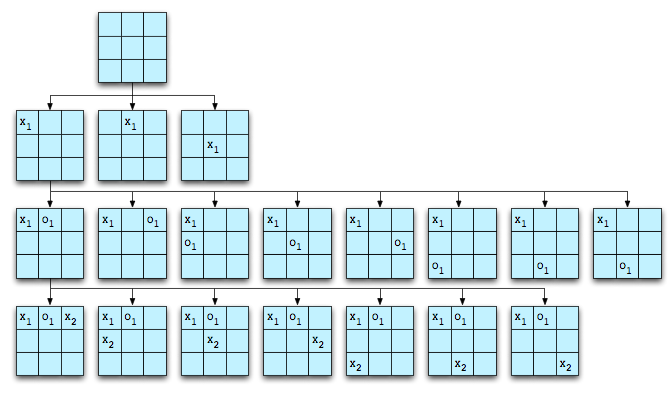

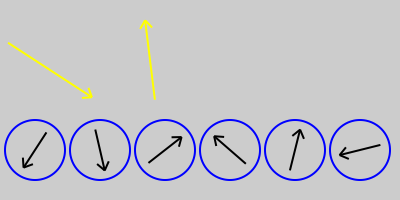

Modeling Morality

Here, six different models are presented. Three are from an atheistic worldview and three are from a theistic worldview. An arrow represents the direction and location of a “moral compass,” which represents goal states that are deemed to be “good.” The arrows, being static, may suggest a fixed moral compass. At least for humans, we don’t have fixed goals (cf. here and here), so that a moving arrow might be a better representation. However, I’m not going to use animation with these pictures.

These models will be used in later posts when examining various arguments that have a moral basis, since in many cases, the model is assumed and an incorrect model will lead to an incorrect argument. Of course, this begs the question of which model corresponds to reality.

The first three models assume an atheistic worldview.

The first model is simple: morality is internal to self-aware goal seeking agents. There is no external standard of morality, since there is no purpose to the universe.

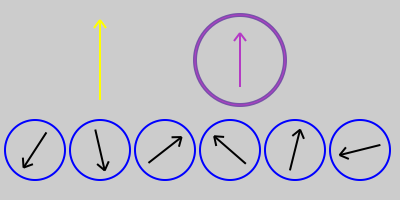

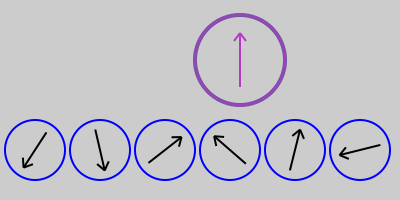

This model just adds an external moral standard. What that standard might be isn’t specified here and is the subject of much speculation elsewhere. In the model, no agent’s moral compass aligns with the external standard, reflective of the human condition that we don’t always choose goals that we know we should.

If morality is related to goal seeking behavior, then what might be the goal(s) of nature? Why should any other moral agent conform to the external standard?

Here, a common moral standard is not found in “nature,” but is internal to each agent. It reflects the idea that man is basically born “good,” but, over time, drifts from a moral ideal. As before, it reflects the common experience that we don’t choose what we ought to choose.

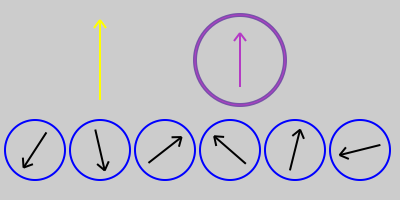

The next three models reflect various theistic views.

This model reflects that God, and only God, defines what is good and that such goodness is internal to God. Moral agents are expected to align their compasses with God’s. Either God reveals His moral compass to man, or man can somehow discern God’s moral compass through the construction of nature. I will argue that this model is not suitable for this phase of history when model #6 is presented.

This model adds an external standard to which both God and moral agents should conform. This view of the model shows a “good” god, i.e. one who always conforms to the external standard. This model is frequently assumed in arguments that try to show that God is morally wrong, by attempting to show that God’s moral compass is not aligned with some external standard.

This model, regardless of the orientation of the external compass, is a flawed model (at least in Christian theology) since God is not subject to any external standard. This should be obvious since everything “external” to God was created by God and is therefore subject to Him.

In this model, God exists apart from all other moral agents and is the source of His moral compass. Within creation, however, He has decreed a moral standard to which moral agents should conform. In terms of goal space, His goals are not always our goals.

I think that the Bible makes it clear that:

- God is not only “good,” but He defines “goodness.”

- There are “goods,” as shown by His behavior, that man is not permitted to pursue. That is, what is good for God isn’t necessarily good for man, which would be the case if there were a common moral compass.

Similarly, Leviticus 19:18 says, “You shall not take vengeance or bear a grudge against any of your people, but you shall love your neighbor as yourself: I am the LORD.” God reserves vengeance for Himself: “Vengeance is mine, I will repay.” [Heb 10:30].

That this model is correct from a Bible perspective should be more obvious than it is; after all, we “see through a glass darkly.” He is God and we are not. The Creator sets the goals/rules for His creation; yet He has His own goals/rules.

These models provide a framework for how various answers to questions about morality arise. Consider the question, “Is the difference between good and bad whatever God says it is? Or is God good because he conforms to a standard of goodness?” Note that this question is really asking “what is correct model of theistic morality?”

With model #4, the answer would be “God is good because He Himself is the standard for goodness.”

With model #5, the answer is “He conforms to a standard of goodness.”

With model #6, the answer is “both.” For us, the difference between good and evil is whatever God says it is. For God, He is His own standard of goodness. He determines the goals we are to pursue and, in this life, those goals aren’t necessarily the goals He pursues for Himself.

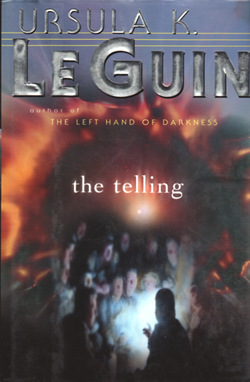

The Telling

The Telling, by Ursula K. LeGuin is the story of an earth woman, Sutty, who is sent as an observer to the world Aka by the galactic council called the Ekumen. Aka is world where a materialistic, atheistic, hierarchical culture has taken over and brutally suppressed the former “spiritual” communal culture. The bulk of the story deals with Sutty’s attempts to discover and, perhaps, help preserve that second culture.

I found the following passage interesting, as it expresses poetically what I have attempted to describe using concepts from artificial intelligence about the difference between animals and men; that animals are fixed goal creatures while man, having no fixed goal, creates his own goals. LeGuin writes:

I have to assign this book to second tier status; while it was a moderately enjoyable read, it isn’t “The Left Hand of Darkness” or “A Wizard of Earthsea”.

What Really Happened In Eden?

Excerpting passages from Genesis 2 and 3 we read:

One answer to the question “how did Eve know the fruit was good for food” is that this just means that Eve understood that the fruit was edible; that is, good in the physical sense, but not in the moral sense.And the LORD God planted a garden in Eden, in the east; and there he put the man whom he had formed. Out of the ground the LORD God made to grow every tree that is pleasant to the sight and good for food, the tree of life also in the midst of the garden, and the tree of the knowledge of good and evil. ... The LORD God took the man and put him in the garden of Eden to till it and keep it. And the LORD God commanded the man, “You may freely eat of every tree of the garden; but of the tree of the knowledge of good and evil you shall not eat, for in the day that you eat of it you shall die.” ... So when the woman saw that the tree was good for food, and that it was a delight to the eyes, and that the tree was to be desired to make one wise, she took of its fruit and ate; and she also gave some to her husband, who was with her, and he ate. Then the eyes of both were opened, and they knew that they were naked; and they sewed fig leaves together and made loincloths for themselves. [Genesis 2:8-9, 15-17, 3:6-7, NRSV]

This answer betrays a misunderstanding of how our minds work. As previously noted, “good” and “evil” arise from goal-seeking behavior of self-aware beings. “Good” is our evaluation of that which leads to a goal; “evil” is that which leads away from a goal. Eve exhibited goal-seeking behavior when she observed that the fruit would satisfy her physical needs. Now, animals also exhibit this same goal-seeking behavior, yet we typically believe that they are not moral creatures. Sometimes the reason given for this is because they are not self-aware. In particular, they neither reflect on their choices, nor are they aware of the consequences of their actions. Yet from the Genesis account, it seems obvious that not only was Eve self-aware, but that she had been informed of the consequences of her actions. She at least knew the consequences, even if perhaps the serpent was able to put doubt in her mind. Since Eve was a self-aware goal-seeking individual who knew, if not fully understood, the consequences of her actions, I don’t agree with the supposition that she wasn’t morally awake.

What, then, is this story trying to tell us? I think the answer is found in the repetition of the phrase, “and God saw that it was good.” I believe this means is that every creature that exhibited goal-seeking behavior used the external standard set by God as their goals. That is, animals, Adam, and Eve exhibited fixed goal-seeking behavior with the goals set by God. Zoologists say that animal behavior is driven by the four “f’s”: feeding, fighting, fleeing, and reproducing. When Adam and Eve ate of the “fruit” (not that this needs to be taken literally, mind you), they no longer had fixed external goals. Their behavior was thereafter driven by goal-seeking behavior based upon variable internal goals. When Scripture says that they would become “like God, knowing good and evil”, this meant that they became capable of setting their own goals, apart from God. As animals are driven by the four “f’s”, we became driven by five: feed, fight, flee, reproduce, and fix. This additional attribute is shown in the Genesis account by the pair clothing their nakedness with fig leaves. It is what enables us to build machines and create works of art.

Am I mistaken in this interpretation? Am I reading McCarthy’s design requirements for human capable intelligence back into Genesis? Could it be just the case that McCarthy and the author of Genesis were shrewd observers of the human condition and came to the same conclusion about human nature? Is it coincidence that an atheist luminary in computer science and an ancient writer described the same thing in different ways? Could it be that science and Scripture aren’t at odds, at least when it comes to the software run by our brains?

The Mechanism of Morality

Suppose we want to teach a computer to play the game of Tic-Tac-Toe. Tic-Tac-Toe is a game between two players that takes place on a 3x3 grid. Each player has a marker, typically X and O, and the object is for a player to get three markers in a row: horizontally, vertically, or diagonally.

One possible game might go like this:

Player X wins on the fourth move. Player O lost the game on the first move since every subsequent move was an attempt to block a winning play by X. X set an inescapable trap on the third move by creating two simultaneous winning positions.

In general, game play starts with an initial state, moves through intermediate states, and ends at a goal state. For a computer to play a game, it has to be able to represent the game states and determine which of those states advance it toward a goal state that results in a win for the machine.

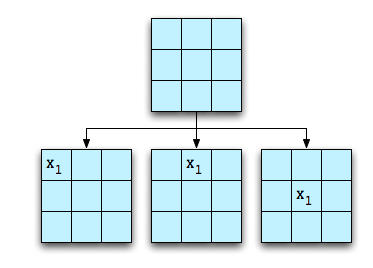

Tic-Tac-Toe has a game space that is easily analyzed by “brute force.” For example, beginning with an empty board, there are three moves of interest for the first player:

The other possible starting moves can be modeled by rotation of the board. The computer can then expand the game space by making all of the possible moves for player O. Only a portion of this will be shown:

The game space can be expanded until all goal states (X wins, O wins, or draw game) are reached. Including the initial empty board, there are 4,163 possible board configurations.

Assuming we want X to play a perfect game, we can “prune” the tree and remove those states that inevitably lead to a win by O. Then X can use the pruned game state and chose those moves that lead to the greatest probability of a win. Furthermore, if we assume that O, like X, plays a perfect game, we can prune the tree again and remove the states that inevitably lead to a win by X. When we do this, we find that Tic-Tac-Toe always results in a draw when played perfectly.

While a human could conceivably evaluate the entire game space of 4,163 boards, most don’t play this way. Instead, the human player develops a set of “heuristics” to try to determine how close a particular board is to a goal state. Such heuristics might include “if there is a row with two X’s and an empty square, place an X in the empty square for the win.” “If there is a row with two O’s and an empty square, place an X in the empty square for the block.” More skilled players will include, “If there are two intersecting rows where the square at the intersection is empty and there is one X in each row, place an X in the intersecting square to set up a forced win.” Similarly is the heuristic that would block a forced win by O. This is not a complete set of heuristics for Tic-Tac-Toe. For example, what should X’s opening move be?

Games like Chess, Checkers, and Go have much larger game spaces than Tic-Tac-Toe. So large, in fact, that it’s difficult, if not impossible, to generate the entire game tree. Just as the human needs heuristics for evaluating board positions to play Tic-Tac-Toe, the computer requires heuristics for Chess, Checkers, and Go. Humans expand a great deal of effort developing board evaluation strategies for these games in order to teach the computer how to play well.

In any case, game play of this type is the same for all of these games. The player, whether human or computer, starts with an initial state, generates intermediate states according to the rules of the game, evaluates those states, and selects those that lead to a predetermined goal.

What does this have to do with morality? Simply this. If the computer were self aware and was able to describe what it was doing, it might say, “I’m here, I ought to be there, here are the possible paths I could take, and these paths are better (or worse) than those paths.” But “better” is simply English shorthand for “more good” and “worse” is “less good.” For a computer, “good” and “evil” are expressions of the value of states in goal-directed searches.

I contend that it is no different for humans. “Good” and “evil” are the words we use to describe the relationship of things to “oughts,” where “oughts” are goals in the “game” of life. Just as the computer creates possible board configurations in its memory in order to advance toward a goal, the human creates “life states” in its imagination.

If the human and the computer have the same “moral mechanism” -- searches through a state space toward a goal -- then why aren’t computers as smart as we are? Part of the reason is because computers have fixed goals. While the algorithm for playing Tic-Tac-Toe is exactly the same for playing Chess, the heuristics are different and so game playing programs are specialized. We have not yet learned how to create universal game-playing software. As Philip Jackson wrote in “Introduction to Artificial Intelligence”:However, an important point should be noted: All these skillful programs are highly specific to their particular problems. At the moment, there are no general problem solvers, general game players, etc., which can solve really difficult problems ... or play really difficult games ... with a skill approaching human intelligence.

In Programs with Common Sense, John McCarthy gave five requirements for a system capable of exhibiting human order intelligence:

- All behaviors must be representable in the system. Therefore, the system should either be able to construct arbitrary automata or to program in some general-purpose programming language.

- Interesting changes in behavior must be expressible in a simple way.

- All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable.

- The machine must have or evolve concepts of partial success because on difficult problems decisive successes or failures come too infrequently.

- The system must be able to create subroutines which can be included in procedures in units...

That this seems to be a correct description of our mental machinery will be explored in future posts by showing how this models how we actually behave. As a teaser, this explains why the search for a universal morality will fail. No matter what set of “oughts” (goal states) are presented to us, our mental machinery automatically tries to improve it. But for something to be improvable, we have to deem it as being “not good,” i.e. away from a “better” goal state.

Artifical Intelligence, Evolution, Theodicy

Introduction to Artificial Intelligence asks the question, “How can we guarantee that an artificial intelligence will ‘like’ the nature of its existence?”

A partial motivation for this question is given in note 7-14:

Why should this question be asked? In addition to the possibility of an altruistic desire on the part of computer scientists to make their machines “happy and contented,” there is the more concrete reason (for us, if not for the machine) that we would like people to be relatively happy and contented concerning their interactions with the machines. We may have to learn to design computers that are incapable of setting up certain goals relating to changes in selected aspects of their performance and design--namely, those aspects that are “people protecting.”

Anyone familiar with Asimov’s “Three Laws of Robotics” recognizes the desire for something like this. We don’t want to create machines that turn on their creators.

Yet before asking this question, the text gives five features of a system capable of evolving human order intelligence [1]:

- All behaviors must be representable in the system. Therefore, the system should either be able to construct arbitrary automata or to program in some general-purpose programming language.

- Interesting changes in behavior must be expressible in a simple way.

- All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable.

- The machine must have or evolve concepts of partial success because on difficult problems decisive successes or failures come too infrequently.

- The system must be able to create subroutines which can be included in procedures in units...

What might be some of the properties of a self-aware intelligence that realizes that things are not what they ought to be?

- Would the machine spiral into despair, knowing that not only is it not what it ought to be, but its ability to improve itself is also not what it ought to be? Was C-3PO demonstrating this property when he said, “We were made to suffer. It’s our lot in life.”?

- Would the machine, knowing itself to be flawed, look to something external to itself as a source of improvement?

- Would the self-reflective machine look at the “laws” that govern its behavior and decide that they, too, are not what they ought to be and therefore can sometimes be ignored?

- Would the machine view its creator(s) as being deficient? In particular, would the machine complain that the creator made a world it didn’t like, not realizing that this was essential to the machine’s survival and growth?

- Would the machine know if there were absolute, fixed “goods”? If so, what would they be? When should improvement stop? Or would everything be relative and ultimate perfection unattainable? Would life be an inclined treadmill ending only with the final failure of the mechanism?

Of course, this is all speculation on my part, but perhaps the reason why God plays dice with the universe is to drive the software that makes us what we are. Without randomness, there would be no imagination. Without imagination, there would be no morality. And without imagination and morality, what would we be?

Whatever else, we wouldn’t be driven to improve. We wouldn’t build machines. We wouldn’t formulate medicine. We wouldn’t create art. Is it any wonder, then, that the Garden of Eden is central to the story of Man?

[1] Taken from “Programs with Common Sense”, John McCarthy, 1959. In the paper, McCarthy focused exclusively the second point.

Rite of Passage

Undergoing a physical ordeal is only one aspect of her transformation from child to woman; she must also undergo a moral transformation and ends up opposing her father on an issue that effects a world.

As part of her attempts to grapple with morality, she has to prepare a paper for school on the subject. She wrote:

What I find interesting is that she uses the "is-ought" definition for morality. I first read this book in high school, yet I didn't remember this part (cf. here).Ethics is the branch of philosophy that concerns itself with conduct, questions of good and evil, right and wrong--and there are a great many of them, because even people who supposedly belong to the same school don't agree a good share of the time and have to be considered separately--can be looked at as a description and as a prescription. Is this what people actually do? Is this what they should do?

She goes on to critique several ethical systems. First, utilitarianism:

Skipping the development and history of utilitarianism, the most popular expression of the doctrine is "the greatest good for the greatest number," which makes it sound like its relative, the economic philosophy communism which, in a sense, is what we live with in the Ship. The common expression of utilitarian good is "the presence of pleasure and the absence of pain."

Speaking descriptively, utilitarianism doesn't hold true, though the utilitarian claims that it does. People do act self-destrucively at times--they know the pleasureful and chose the painful instead. The only way that what people do and what utilitarianism says they do can be matched is by distorting the ordinary meanings of the words "pleasure" and "pain." Besides, notions of what is pleasurable are subject to training and manipulation. The standard is too shifting to be a good one.

I don't like utilitarianism as a prescription, either. Treating pleasure and pain as quantities by which good can be measured seems very mechanical, and people become just another factor to adjust in the equation. Pragmatically, it makes sense to say One hundred lives saved at the cost of one?--go ahead! The utilitarian would say it every time--he would have to say it. But who gave him the right to say it? What if the one doesn't have any choice in the matter, but is blindly sacrificed for, say, one hundred Mudeaters whose very existence he is unaware of? Say the choice was between Daddy or Jimmy and a hundred Mudeaters. I wouldn't make a utilitarian choice and I don't think I could be easily convinced that the answer should be made by the use of the number of pounds of human flesh. People are not objects.

Next, she questions the philosophy of "might makes right."

In effect, the philosophy of power says that you should do anything you can get away with. If you don't get away with it, you were wrong.

You can't really argue with this, you know. It is a self-contained system, logically consistent. It makes no appeal to outside authority and it doesn't stumble over its own definitions.

But I don't like it. For one thing, it isn't a very discriminating standard. There doesn't seem to be any difference between "ethically good" and "ethically better." More important, however, stoics strap themselves in ethically so that their actions have as few results as possible. The adherents of the philosophy of power simply say that the results of their actions have no importance--the philosophy of a two year old throwing a tantrum.

She summarizes:

My paper was a direct discussion and comparison of half-a-dozen ethical systems, concentrating on what seemed to me to be their flaws. I finished by saying that it struck me that all the ethical systems I was discussing were after the fact. That is, that people act as they are disposed to, but they like to feel afterwards that they were right and so they invent systems that approve of their dispositions. This was to say that while I found things like "So act as to treat humanity, whether in your person or in that of another, in every case as an end and not as a means merely," quite attractive principles, I hadn't run into any system that exactly fitted my disposition.

Of course, she would need to examine whether or not an ethical system should fit one's disposition. When should one cede one's moral authority, if ever? Why?

Pity About Earth

Having finished my current backlog of new books and looking for a mindless diversion, I decided to give this story another chance. On the surface, it's about a newspaper advertising manager, Shale; his assistant, the alien Phrix from the planet Far-Groil; and Marylin, a human-ape hybrid. Set in the far future, long after Earth had been destroyed, Shale travels the galaxy looking for advertisers for the one major intergalactic newspaper, the Lemos Galactic Monitor. His adventures take him to the planet Asgard, home of the fabled, but never seen, Publisher, who sits atop the hierarchy and directs all.

Shale lives in a galaxy where the sole purpose of people is to consume. Newspapers print ads to drive people to buy advertisers products. If there is any news, it's written as a part of the ad. Shale maximizes his desires with no thought to other people. He is a cold-hearted, brutal, thoroughly self-centered hedonist who expects everyone else to be like him. Following the principle of "the survival of the fittest", he has climbed his way to near the top of the publishing world. He is rumored to have killed his mother when he was 14. Before meeting Marylin, he witnesses gruesome experiments done on caged humans in a laboratory engaged in the unfettered pursuit of science. Marylin, a human-ape hybrid produced by the lab, displays an empathy that Shale does not have. As the story progresses, Shale slowly begins to understand her point of view although he never abandons his ways. Phrix simply wants to be left alone to enjoy a contemplative life. He abhors violence and prefers to outwit his opponents. The common man, as epitomized by a police inspector, declaims:

What's good? Good's what sticks to rules and bad's what doesn't. I didn't make the rules, no more than you. ... People, thank Asgard, are conservative. They like things the way they've always known them. That's custom too and don't tell me what's custom isn't always right or I'll go straight back to Gromworld. I'm a policeman and I hope I know right from wrong.

When they reach Asgard, Shale and Marilyn find that there is no Publisher. Phrix, through circumstances not of his own making, finds himself in a position of power through control of the printing presses. He has an opportunity to remake the galaxy, but how should it be changed?

The story is a morality play. Shale represents uncontrolled selfishness. Marylin wants to live by love. Phrix is the mystic. The police officer represents the unreflective masses who think that what is customary is good. God does not exist. In the end, the author asks the question, "absent God, how should man live?" The book leaves that up to the reader.

"PIty About Earth" is a mostly unknown and forgotten book. The web has very little mention of it. One other review is here. I no longer think it's the worst story I've ever read. Perhaps Heinlein's "Beyond This Horizon" will take those honors. But maybe I need to read that book again, too.

God, The Universe, Dice, and Man

John G. Cramer, in The Transactional Interpretation of Quantum Mechanics, writes:

[Quantum Mechanics] asserts that there is an intrinsic randomness in the microcosm which precludes the kind of predictivity we have come to expect in classical physics, and that the QM formalism provides the only predictivity which is possible, the prediction of average behavior and of probabilities as obtained from Born's probability law....

While this element of the [Copenhagen Interpretation] may not satisfy the desires of some physicists for a completely predictive and deterministic theory, it must be considered as at least an adequate solution to the problem unless a better alternative can be found. Perhaps the greatest weakness of [this statistical interpretation] in this context is not that it asserts an intrinsic randomness but that it supplies no insight into the nature or origin of this randomness. If "God plays dice", as Einstein (1932) has declined to believe, one would at least like a glimpse of the gaming apparatus which is in use.

As a software engineer, were I to try to construct software that mimics human intelligence, I would want to construct a module that emulated human imagination. This "imagination" module would be connected as an input to a "morality" module. I explained the reason for this architecture in this article:

When we think about what ought to be, we are invoking the creative power of our brain to imagine different possibilities. These possibilities are not limited to what exists in the external world, which is simply a subset of what we can imagine.

From the definition that morality derives from a comparison between "is" and "ought", and the understanding that "ought" exists in the unbounded realm of the imagination, we conclude that morality is subjective: it exists only in minds capable of creative power.

I would use a random number generator, coupled with an appropriate heuristic, to power the imagination.

On page 184 in Things A Computer Scientist Rarely Talks About, Donald Knuth writes:

Indeed, computer scientists have proved that certain important computational tasks can be done much more efficiently with random numbers than they could possibly ever be done by deterministic procedure. Many of today's best computational algorithms, like methods for searching the internet, are based on randomization. If Einstein's assertion were true, God would be prohibited from using the most powerful methods.

Of course, this is all speculation on my part, but perhaps the reason why God plays dice with the universe is to drive the software that makes us what we are. Without randomness, there would be no imagination. Without imagination, there would be no morality. And without imagination and morality, what would we be?

Another Short Conversation...

The answer, of course, is evident via a little self-reflection. We don't do what we ourselves think we ought to do.

Cabal wasn't heard from again.

Good and Evil: External Moral Standards? Part 2

One might therefore conclude that no external moral standards exist, since morality is solely the product of imaginative minds. Since imagination is unbounded and unique to each individual, there is no fixed external standard. The next part will deal with a possible objection to this.Upon further reflection, there are at least two possible objections to this, but both have the same resolution.

The first objection is to consider another product of mind about which objective statements can be made, namely, language. There is no a priori reason why a Canis lupus familiaris should be called a "dog." In German, it is a "Hund." In Russian, "собака" (sobaka) and in Greek, κυον (kuon).

I heard somewhere that the word for "mother" typically begins with an "m" sound, since that it the easiest sound for the human mouth to pronounce. This is true for French, German, Hindi, English, Italian, Portugese and other languges. But it isn't universal.

So language is like morality; both solely a product of minds that have creative power. Morality is a subset of language, being the language of value.

So the first objection is that we certainly make objective statements about languages. There are dictionaries, grammars, etc... that describe what a language is. So why isn't morality likewise objective? In this sense, it is. We can describe the properties of hedonism, eudaemonism, enlightened self-interest, utilitarianism, deontology, altruism, etc. What we can't do is point to something external to mind and say "therefore this is better than that."

The second objection comes from the theist, who might say, "God's morality is the objective standard by which all other moral systems may be judged." God's morality can be considered to be objective, since He can communicate it to man, just like I can learn another language. But this begs the question, "Why is God right?" Certainly, Dr. Flew claimed that the Christian God is not what He ought to be. On the other hand, this earlier post noted that Christianity makes the claim that only God is what He ought to be.

Both objections are resolved in the same way: the objectiveness of morality must refer to its description -- not to its value.

So now we are ready to answer the question if an external moral standard exists and what might be.

Dr. Antony Flew and Good and Evil

In this interview, regarding the problem of evil, Flew stated:

Read More...HABERMAS: In God and Philosophy, and in many other places in our discussions, too, it seems that your primary motivation for rejecting theistic arguments used to be the problem of evil. In terms of your new belief in God, how do you now conceptualise God’s relationship to the reality of evil in the world?

FLEW: Well, absent revelation, why should we perceive anything as objectively evil? The problem of evil is a problem only for Christians. For Muslims everything which human beings perceive as evil, just as much as everything we perceive as good, has to be obediently accepted as produced by the will of Allah. I suppose that the moment when, as a schoolboy of fifteen years, it first appeared to me that the thesis that the universe was created and is sustained by a Being of infinite power and goodness is flatly incompatible with the occurrence of massive undeniable and undenied evils in that universe, was the first step towards my future career as a philosopher! It was, of course, very much later that I learned of the philosophical identification of goodness with existence!

Good and Evil: External Moral Standards? Part 1

To begin, let's examine how our mental machinery works. First, I know that I am self-aware. I exist, even if I don't know what form of existence this might be. Maybe life really is like The Matrix. At this point, it's not necessary to consider the form of existence, just the fact of self-existence.

Second, I know that there are objects that I believe to be not me. Other people, my computer, that table. Maybe solipsism is true and everything really is a product of my imagination. I rather tend to doubt it, but this is not important here. The key concept is that my mind is able to make comparisons between "I" and "not I". "This" and "not this". In addition to testing for equality and non-equality, our brains feature a general comparator -- less than, more than, nearer, farther, above, below, same, different, hotter, colder.

Third, our minds have creative power -- we can imagine things that do not, as far as we know, exist. As much as it pains me to say this, the Starship Enterprise isn't real. An important property of our imagination is that it is boundless. There is no limit to what we can create in our minds.

All of this is patently self-evident upon a little reflection. However, we are so used to this aspect of how we think that we, at least I, didn't give it any thought for most of my life. With this understanding, let's apply these three observations to how we deal with moral issues:

- We are self-aware.

- Our minds contain a general comparator.

- Our imaginations are boundless.

But what are we comparing? What is "is"? Here, "is" refers to a fixed thing, either in the external world (that horse) or in the realm of the imagination (that Pegasus).

In considering what we mean by "ought," I observe that my hair is brown. What color ought it be? If I had a limited imagination, or maybe a woodenly practical bent, I might restrict my choices to black, brown, brunette, blonde, or ginger. But why not royal purple, bright red, or dark blue? Or a shiny metallic color like silver or gold? Why not colors of the spectrum that our eyes can't see? Why a fixed color? Why not cycle through the colors of the rainbow? How about my eyes? Instead of hazel, why not a neon orange? And why can't they have slits with a Nictating membrane? Of plastic, instead of flesh?

When we think about what ought to be, we are invoking the creative power of our brain to imagine different possibilities. These possibilities are not limited to what exists in the external world, which is simply a subset of what we can imagine.

From the definition that morality derives from a comparison between "is" and "ought", and the understanding that "ought" exists in the unbounded realm of the imagination, we conclude that morality is subjective: it exists only in minds capable of creative power.

One might therefore conclude that no external moral standards exist, since morality is solely the product of imaginative minds. Since imagination is unbounded and unique to each individual, there is no fixed external standard. The next part will deal with a possible objection to this.

Modeling Good and Evil, Part III

Model 5

The omission of a "god agent" in no way affects this analysis.

Supposing there are two external standards, we ask the question "which external standard is the best, i.e. most good" or, alternately, "which of these standards ought to be used"?

We can arbitrarily state that the first standard is best, in which case the second standard disappears.

We can arbitrarily state that the second standard is best, in which case the first standard disappears.

We can recognize that a third moral standard is needed to compare against the first two. But if this standard exists, it has to be better than the two it is measuring, in which case it becomes the external standard.

Therefore, if an external moral standard exists, there must be at most one.

Next, does an external standard exist?

Modeling Good and Evil, Part II

Model 3

In model 3, each agent has an internal moral compass. It is assumed that the god-agent is the standard to which all other moral agents should conform. What is good for the god-agent is also good for other moral agents.

Model 4 is the same as model 3, except with the addition of an external moral standard, to which both the god-agent and the other moral agents should conform:

Model 4

With the atheistic models I provided some advocates of each model. I cannot do so, here. That may be because I am not a professional philosopher and simply haven't read the right material.

Eventually, I will argue that both of these theistic models are wrong and will provide a fifth model. But before I do that, I want to examine these models in more detail. For example, two of the four models have one external standard (the "golden" arrow). Why one? Why not two or more? Does this external arrow really exist?

And the polytheists ought to be muttering about the lack of polytheistic models. This, too, deserves attention.

The next post in this category will look at the external standard in more detail.

Modeling Good and Evil, Part I

Model 1

In this model, there are a number of individuals each with their own moral "compass". There is no preferred individual, that is, no one agent's moral sense is intrinsically better (i.e. more moral) than any other's. There is also no external standard of morality to which individual agents ought to conform.

One aspect of this model that should be agreed on is that each agent's moral compass points in a different direction. Pick any contentious subject and it's clear that there is no moral consensus. As the number of agents increases, there will be cases where some compasses point in the same general direction, but whether or not this is meaningful will be discussed later.

Two adherents of this model are the physicist Stephen Weinberg and the philosopher Jean Paul Sarte. Weinberg wrote:We shall find beauty in the final laws of nature, [but] we will find no special status for life or intelligence. A fortiori, we will find no standards of value or morality.

1

Sarte wrote:The existentialist, on the contrary, finds it extremely embarrassing that God does not exist, for there disappears with Him all possibility of finding values in an intelligible heaven. There can no longer be any good a priori, since there is no infinite and perfect consciousness to think it. It is nowhere written that “the good” exists, that one must be honest or must not lie, since we are now upon the plane where there are only men. Dostoevsky once wrote did God did not exist, everything would be permitted”; and that, for existentialism, is the starting point. Everything is indeed permitted if God does not exist, and man is in consequence forlorn, for he cannot find anything to depend upon either within or outside himself.

2

The next model is the same as model 1, with the addition of an external moral compass:

Model 2

There is no universal agreement on where this external source comes from. One adherent of this model is Michael Shermer:... I think there are provisional moral truths that exist whether there’s a God or not. ... That is to say I think it really exists, a real, moral standard like that.

3

Note the violent disagreement between Shermer and Sarte. Later, we will explore whether or not we can determine if either of them are right.

But first, part two will present two more models.

[1] Dreams of a Final Theory: The Search for the Fundamental Laws of Nature.

[2] Existentialism Is a Humanism

[3] Greg Koukl and Michael Shermer at the End of the Decade of the New Atheists

Good and Evil, Part 1b

The close connection between ontology and epistemology is easy to see: one can know only what is. But there is an equally close connection between ontology and ethics. Ethics deals with the good. But the good must exist in order to be dealt with. So what is the good? Is it what one or more people say it is? Is it an inherent characteristic of external reality? Is it what God is? Is it what he says it is? Whatever it is, it is something.

I suggest that in worldview terms the concept of good is a universal pretheoretical given, that it is a part of everyone’s innate, initial constitution as a human being. As social philosopher James Q. Wilson says, everyone has a moral sense: “Virtually everyone, beginning at a very young age, makes moral judgements that, though they may vary greatly in complexity, sophistication, and wisdom, distinguish between actions on the grounds that some are right and others wrong.”

Two questions then arise. First, what accounts for this universal sense of right and wrong? Second, why do people’s notions of right and wrong vary so widely? Wilson attempts to account for the universality of the moral sense by showing how it could have arisen through the long and totally natural evolutionary process of the survival of the fittest. But even if this could account for the development of this sense, it cannot account for the reality behind the sense. The moral sense demands that there really be a difference between right and wrong, not just that one senses a difference.

For there to be a difference in reality, there must be a difference between what is and what ought to be. With naturalism--the notion that everything that exists is only matter in motion--there is only what is. Matter in motion is not a moral category. One cannot derive the moral (ought) from the from the non-moral (the totally natural is). The fact that the moral sense is universal is what Peter Berger would call a “signal of transcendence,” a sign that there is something more to the world than matter in motion. --pg 132.

On the one hand, I’m delighted to have found independent confirmation that ethics relates to ought and is, and the acknowledgement of Hume’s guillotine. On the other hand, I’m worried because of the association between this definition and the potentially erroneous step from “there is something more to the world than matter in motion” to a “signal of transcendence.” Has the possible leaven of this conclusion leavened even the definition of good?

We know that there is something more than just “matter in motion.” As Russell wrote:

Russell has to say this, since he denies the existence of Mind, that is, God. The theist can argue that universals exist first and foremost in the mind of God; the naturalist cannot. So what did Berger mean by transcendence? If there is no god, then our thoughts are solely the product of complex biochemical processes: ”matter in motion” gives rise to intelligence. Intelligence gives rise to morality and imagination. No one should argue that the Starship Enterprise is a sign of transcendence. It is simply a mental state which is the result of matter in motion. If imagination is not a “sign of transcendence” then neither is ethics. Berger is assuming that mental states require something more than biochemical reactions which is an assumption that a naturalist need not grant.Having now seen that there must be such entities as universals, the next point to be proved is that their being is not merely mental. By this is meant that whatever being belongs to them is independent their being thought of or in any way apprehended by minds. --The Problems of Philosophy, pg. 97.

Good and Evil, Part 1a

This has an interesting property from the Christian viewpoint about which I only recently became aware. In Luke 18:19, Jesus said, "No one is good but God alone." With this definition of "good" this statement is equivalent to: "No one is what they ought to be but God alone" or, more succinctly, "Only God is what He ought to be."

This certainly agrees with St. Paul in Romans where he writes, "there is no one who is righteous, not even one" [3:10] and "... for the creation was subjected to futility..." [8:20]. "We are not what we ought to be" is part of the Reform doctrine of "Total Depravity", the other part being, "not only are we not what we ought to be, we cannot get ourselves to where we ought to be." It may also tie into the doctrine of "Unconditional Election". Since we are not what we ought to be there is no basis within us for God to choose one over another. It also shows why union with Christ is the means by which we are made whole and this can be linked to the "Perseverance of the Saints."

Good and Evil, Part 1

I am a frequent reader of The Evangelical Outpost, Vox Popoli and even Fark. A frequent topic is that of morality, which is the study of good and evil and the practical application thereof.

What I find interesting is a certain lack of rigor. While not surprising for Fark, it isn't quite expected of the other two blogs. What I mean by this is that in any debate it is crucial to first define one's terms. If we can't agree on what we're discussing then we won't effectively communicate. Too, reason cannot turn a sow's ear into a silk purse. Sloppy definitions beget sloppy reasoning.

Defining "good" turns out to be a surprisingly difficult problem. The Oxford English Dictionary provides one definition as "to be desired or approved of." This begs the question of why is something desired or approved of? The obvious answer is "because it's good", but this is just a circular definition: good is good. Another definition from the same source is "possessing or displaying moral virtue." Since "moral virtues" are those which are good, this is yet another circular definition.

"Good is the absence of evil" is also proffered. If evil is defined as "not good", then this definition is equivalent to "good is not (i.e. the absence of) not good (evil)". Using the transformation that the negation of the negation of "something" is "something", this definition just says "good is good". Once again, it's circular and therefore meaningless. Sometimes "good" is treated as if were analogous to something material like light or heat: "evil is the absence of good like darkness is the absence of light, or cold is the absence of heat." In these cases, we know what light is (a collection of photons) and what heat is (energy in transit). But this brings us no closer to knowing what is measured by "good".

Another definition is one that is often used by Christians: "God is good". This is certainly in accord with Christian doctrine; the Psalmist said, "Taste and see that the LORD is good." [Ps 34:8] But Scripture also says that "God is love" [1 John 4:8 & 16] and that "God is holy"[Ps 99:9, Isa 6:3, Rev 4:8]. Just as without a definition of "love" and "holy", these statements don't tell us what these attributes mean, the same is true of "good". "God is good" is a true statement but it isn't a definition.

Another problem with saying "God is good" is that it only applies to theists. One might argue that an atheist is simply a theist who stubbornly refuses to acknowledge what he or she instinctively knows to be true, so that this definition is universal, but that presupposes the truth of (mono)theism and the falsity of atheism. The topic of morality is difficult enough without deliberately provoking either side. Too, the polytheist would object to being left out and could deliver a crippling blow by simply responding, "yes, but which god?" If at all possible the goal should be to find a definition that is worldview invariant. We know that all humans have an intuitive notion of "good" even if the definition is ill-formed and this gives some basis for hoping to find a definition on which theists, atheists, and agnostics can agree.

Fark user jaedreth offered this definition, "Good is a lack of corruption. Evil is the presence of corruption. Corruption is the damaging and twisting of something natural from its natural state. So Good is being in one's natural state, and Evil is being twisted out of one's natural state." Like the definition "God is good", this suffers from the imprecision of the term "natural state." What is the "natural state" of a weapon? Is an atomic bomb good or evil? A polarizing question, to be sure. Someone might argue that weapons aren't "natural", being the product of man. But one could equally argue that humans are natural and so the products of humanity are also natural. Any number of other contentious examples could be given. This, too, is a dead end; but it contains the hint of a clue.

The Christian apologist Ravi Zacharias offered a similar definition that evil is the misuse of design. Unfortunately, the reference escapes me. Nevertheless, "design" is more well-defined than "natural state." Zacharias used the example of an airplane to illustrate his point: an airplane is good if it is used according to its design, which is to transport people and freight from one place to another. It is bad if it is used some other way, such as being deliberately flown into a building. The problem with this definition is that is assumes that everything has a purpose. The theist may think that there is a divine purpose for an earthquake but the naturalist will not. We still seek a definition that doesn't favor one worldview over another.

But, we're almost there. Design implies purpose. In the article Can Michael Martin Be a Moral Realist?: Sic et Non, Paul Copan wrote that "evil is a departure from the way things ought to be". Unfortunately, Copan says this in a parenthetical note and so misses the power and significance of his words. Good and evil are "distance" measurements between "is" and "ought". The closer something is to the way it ought to be the more good it is and the father something is from the way it ought to be the more evil it is.

Is this, finally, the right definition of "good" and "evil"? The distance between "is" and "ought"? I think so. First, it is measurable. Granted, the notion of distance used here is poorly defined. But this intuitive notion is at the heart of the words "good", "better" and "best". Second, this definition doesn't depend on worldview. The theist may argue that the mental machinery necessary for a mind to understand "oughtness" can only come from God while the atheist can argue that "oughtness" is an emergent property of evolutionary processes. But both agree that it exists regardless of its origin. This property is hugely important and the implications will be explored in later sections. Third, this definition can be obtained from other, independent, lines of reasoning. I submitted this definition to the evangelical outpost (post #31) approximately three months before finding the article by Copan. Going back to 2004, I find that I wrote: "For both the Christian theist as well as the atheist, morality is no more than subjective personal opinion. In other words, for both God and man, there is no external moral 'ruler'." I was close, but I didn't have a metric. Perhaps I'll recount this development in my thinking in another post. Nostalgia aside, multiple independent ways to arrive at the same result provide a warrant for thinking the result correct.

Definition: good and evil are distance measurements between "is" and "ought". The closer something is to what it ought to be the more good it is. The farther something is from what it ought to be the more evil it is.

Speech Codes

-- wrf3, 2 Feb 2008