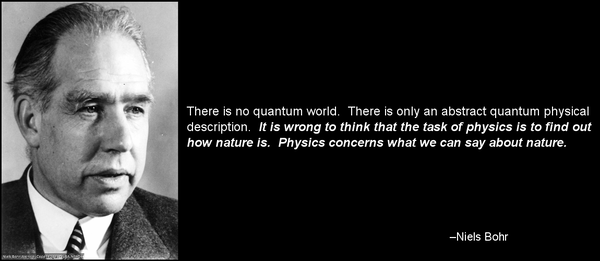

Bohr on Nature

What's interesting about this is that what Bohr thinks we can say about nature is also what we think we can say about consciousness. We recognize consciousness by behavior (cf. Searle's Chinese Room), but we cannot say whether consciousness is produced by that behavior or whether it is revealed by that behavior.

AGI

If AGI doesn't include insanity as well as intelligence then it may be artificial, but it won't be general.

In "Gödel, Escher, Bach" Hofstadter wrote:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns.1

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials.2

For intelligence to be general, the ability to jump must be in all directions.

[1] Pg. 37

[2] Pg. 478

This idea is repeated in this post from almost 13 years ago. Have I stopped jumping outside the lines?

Quote

1,2

Whether or not the √2 is irrational cannot be shown by measuring it.

Whether or not the Church-Turing hypothesis is true cannot be shown by thinking about it.

[1] "Euclidean and Non-Euclidean Geometries", Greenberg, Marvin J., Second Edition, pg. 7: "The point is that this irrationality of length could never have been discovered by physical measurements, which always include a small experimental margin of error."

[2] This quote is partially inspired by Scott Aaronson's "PHYS771 Lecture 9: Quantum" where he talks about the necessity of experiments.

Searle's Chinese Room: Another Nail

[updated 3/9/2024 - note on Searle's statement that "programs are not machines"]

I have discussed Searle's "Chinese Room Argument" twice before: here and here. It isn't necessary to review them. While both of them argue against Searle's conclusion, they aren't as complete as I think they could be. This is one more attempt to put another nail in the coffin, but the appeal of Searle's argument is so strong - even though it is manifestly wrong - that it may refuse to stay buried. The Addendum explains why.

Searle's paper, "Mind, Brains, and Programs" is here. He argues that computers will never be able to understand language the way humans do for these reasons:

- Computers manipulate symbols.

- Symbol manipulation is insufficient for understanding the meaning behind the symbols being manipulated.

- Humans cannot communicate semantics via programming.

- Therefore, computers cannot understand symbols the way humans understand symbols.

- Is certainly true. But Searle's argument ultimately fails because he only considers a subset of the kinds of symbol manipulation a computer (and a human brain) can do.

- Is partially true. This idea is also expressed as "syntax is insufficient for semantics." I remember, from over 50 years ago, when I started taking German in 10th grade. We quickly learned to say "good morning, how are you?" and to respond with "I'm fine. And you?" One morning, our teacher was standing outside the classroom door and asked each student as they entered, the German equivalent of "Good morning," followed by the student's name, "how are you?" Instead of answering from the dialog we had learned, I decided to ad lib, "Ice bin heiss." My teacher turned bright red from the neck up. Bless her heart, she took me aside and said, "No, Wilhem. What you should have said was, 'Es ist mir heiss'. To me it is hot. What you said was that you are experiencing increased libido." I had used a simple symbol substitution, "Ich" for "I", "bin" for "am", and "heiss" for "hot", temperature-wise. But, clearly, I didn't understand what I was saying. Right syntax, wrong semantics. Nevertheless, I do now understand the difference. What Searle fails to establish is how meaningless symbols acquire meaning. So he handicaps the computer. The human has meaning and substitution rules; Searle only allows the computer substitution rules.

- Is completely false.

To understand why 2 is only partially true, we have to understand why 3 is false.

- A Turing-complete machine can simulate any other Turing machine. Two machines are Turing equivalent if each machine can simulate the other.

- The lambda calculus is Turing complete.

- A machine composed of NAND gates (a "computer" in the everyday sense) can be Turing complete.

- A NAND gate (along with a NOR gate) is a "universal" logic gate.

- Memory can also be constructed from NAND gates.

- The equivalence of a NAND-based machine and the lambda calculus is demonstrated by instantiating the lambda calculus on a computer.1

- From 3, every computer program can be written as expressions in the lambda calculus; every computer program can be expressed as an arrangement of logic gates. We could, if we so desired, build a custom physical device for every computer program. But it is massively economically unfeasible to do so.

- Because every computer program has an equivalent arrangement of NAND gates2, a Turing-complete machine can simulate that program.

- NAND gates are building-blocks of behavior. So the syntax of every computer program represents behavior.

- Having established that computer programs communicate behavior, we can easily see what Searle's #2 is only partially true. Symbol substitution is one form of behavior. Semantics is another. Semantics is "this is that" behavior. This is the basic idea behind a dictionary. The brain associates visual, aural, temporal, and other sensory input and this is how we acquire meaning. Associating the visual input of a "dog", the sound "dog", the printed word "dog", the feel of a dog's fur, are how we learn what "dog" means. We have massive amounts of data that our brain associates to build meaning. We handicap our machines, first, by not typically giving them the ability to have the same experiences we do. We handicap them, second, by not giving them the vast range of associations that we have. Nevertheless, there are machines that demonstrate that they understand colors, shapes, locations, and words. When told to describe a scene, they can. When requested to "take the red block on the table and place it in the blue bowl on the floor", they can.

I was able to correct my behavior by establishing new associations: temperature and libido with German usage of "heiss". That associative behavior can be communicated to a machine. A machine, sensing a rise in temperature, could then inform an operator of its distress, "Es ist mir heiss!". Likely (at least, for now) lacking libido, it would not say, "Ich bin heiss."

Having shown that Searle's argument is based on a complete misunderstanding of computation, I wish to address selected statements in his paper.

Read More...

The Physical Ground of Logic

[updated 14 January 2024 to add note on semantics and syntax]

[updated 17 January 2024 to add note on universality of NAND and NOR gates]

[updated 26 March 2024 to add note about philosophical considerations of rotation]

The nature of logic is a contested philosophical question. One position is that logic exists independently of the physical realm; another is that it is a fundamental aspect of the physical realm; another is that it is a product of the physical realm. These correspond roughly to the positions of idealism, dualism, and materialism.

Here, the physical basis for logic is demonstrated. This doesn't disprove idealism, dualism, or materialism; but it does make it harder to find a bright line of demarcation between them and a way to make a final determination as to which might correspond to reality.

There are multiple logics. As "zeroth-order" (or "propositional logic") is the basis of all higher logics, we start here.

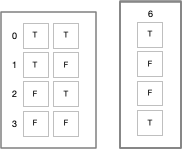

Begin with two arbitrary distinguishable objects. They can be anything: coins with heads and tails; bees and bears; letters in an alphabet, silicon and carbon atoms. Two unique objects, "lefty" and "righty" are chosen. The main reason is to use objects for which there is no common associated meaning. Meaning must not creep in "the back door" and using unfamiliar objects should help that from inadvertently happening. A secondary engineering reason, with a strong philosophical connection, will be demonstrated later.

| Lefty | Righty |

|---|---|

| \ | | |

These two objects can be combined four ways as shown in the next table. You should be able to convince yourself that these are the only four ways for these combinations to occur. For convenience, each input row and column is labeled for future reference.

| $1 | $2 | |

|---|---|---|

| C0 | \ | \ |

| C1 | \ | | |

| C2 | | | \ |

| C3 | | | | |

Next, observe that there are sixteen ways to select an object from each of the four combinations. You should be able to convince yourself that these are the only ways for these selections to occur. For convenience, the selection columns are labelled for future reference. The selection columns correspond to the combination rows.

| S0 | S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | S9 | S10 | S11 | S12 | S13 | S14 | S15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C0 | | | | | | | | | | | | | | | | | \ | \ | \ | \ | \ | \ | \ | \ |

| C1 | | | | | | | | | \ | \ | \ | \ | | | | | | | | | \ | \ | \ | \ |

| C2 | | | | | \ | \ | | | | | \ | \ | | | | | \ | \ | | | | | \ | \ |

| C3 | | | \ | | | \ | | | \ | | | \ | | | \ | | | \ | | | \ | | | \ |

Now the task is to build physical devices that combine these two inputs and select an output according to each selection rule. Notice that in twelve of the selection rules it is possible to get an output that was not an input. On the one hand, this is like pulling a rabbit out of a hat. It looks like something is coming out that wasn't going in. On the other hand, it's possible to build a device with a "hidden reservoir" of objects so that the required object is produced. But this is the engineering reason why "lefty" and "righty" are the way they are. "lefty" can be turned into "righty" and "righty" can be turned into "lefty" by rotating the object and rotation is a physical operation on a physical object.

This engineering decision has interesting implications. It ensures that logic is self-contained: what goes in is what comes out. If it doesn't go in, it doesn't come out. The logic gate "not" turns truth into falsity and falsity into truth by a simple rotation. Philosophically, this means that truth and falsity are stance dependent; truth depends on how you look at it. Moving an observer is equivalent to rotating the symbol.

Suppose we can build a device which has the behavior of S7:

We know how to build devices which have the behavior of S7, which is known as a "NAND" (Not AND)1 gate. There are numerous ways to build these devices: with semiconductors that use electricity, with waveguides that use fluids such as air and water, with materials that can flex, neurons in the brain, even marbles.2 NAND gates and NOR gates (S1) are known as universal gates, since arrangements of each gate can produce all of the other selection operations. The demonstration with NAND gates is here. The correspondence between digital logic circuits and propositional logic has been known for a long time.

John Harrison writes3:Digital design Propositional Logic logic gate propositional connective circuit formula input wire atom internal wire subexpression voltage level truth value

The interesting bit now is how to get "truth values" into, or out of, the system. Typically, we put "truth" into the system. We look at the pattern for S7, say, and note that if we arbitrarily declare that "lefty" is "true", then "righty" is false, then we get the logical behavior that is familiar to us. Because digital computers work with low and high voltages, it is more common to arbitrarily make one of them 0 and the other 1 and then to, again arbitrarily, make the convention that one of them (typically 0) represents false and the other (either 1 or non-zero) true.

This way of looking at the system leads to the idea that truth is an emergent behavior in complex systems. Truth is found in the arrangement of the components, not in the components themselves.

But this isn't the only way of looking at the system.

Let us break down the what the internal behavior of each selection process has to accomplish. This behavior will be grouped in order of increasing complexity. To do this, we have to introduce some notation.

~ is the "rotate" operation. ~\ is |; ~| is \.

= tests for equality, e.g. $1 = $2 compares the first input value with the second input value.

? equal-path : unequal-path takes action based on the result of the equal operator.

\ = \ ? | : \ results in |, since \ is equal to \.

\ = | ? | : \ results in \, since \ is not equal to |.

| Selection | Behavior |

|---|---|

| S0 | | |

| S15 | \ |

The second group outputs one of the inputs, perhaps with rotation:

| Selection | Behavior |

|---|---|

| S12 | $1 |

| S3 | ~$1 |

| S10 | $2 |

| S5 | ~$2 |

The third group compares the inputs for equality and produces an output for the equal path and another for the unequal path:

| Selection | Behavior |

|---|---|

| S8 | $1 = $2 ? $1 : | |

| S14 | $1 = $2 ? $1 : \ |

| S1 | $1 = $2 ? ~$1 : | |

| S7 | $1 = $2 ? ~$1 : \ |

| S4 | $1 = $2 ? | : $1 |

| S2 | $1 = $2 ? | : $2 |

| S13 | $1 = $2 ? \ : $1 |

| S11 | $1 = $2 ? \ : $2 |

| S6 | $1 = $2 ? | : \ |

| S9 | $1 = $2 ? \ : | |

It's important to note that whatever the physical layer is doing, if the device performs according to these combination and selection operations then the internal layer is doing these logical operations.

The next step is to figure out how to do the equality operation. We might think to use S9 but this doesn't help. It still requires the arbitrary assignment of "true" to one of the input symbols. The insight comes if we consider the input symbol as an object that already has the desired behavior. Electric charge implements the equality behavior: equal charges repel; unequal charges attract. If the input objects repel then we take the "equal path" and output the required result; if they attract we take the "unequal path" and output that answer.

In this view, "truth" and "falsity" are the behaviors that recognize themselves and disregard "not themselves." And we find that nature provides this behavior of self-identification at a fundamental level. Charge - and its behavior - is not an emergent property in quantum mechanics.

What's interesting is that nature gives us two ways of looking at it and discovering two ways of explaining what we see without, apparently, giving us a clue as to which is the "right" way to look at it. Looking one way, truth is emergent. Looking way, truth is fundamental. Philosophers might not like this view of truth (that truth is the behavior of self-recognition), but our brains can't develop any other notions of truth apart from this "computational truth."

Hiddenness

How the fact that the behavior of electric charge is symmetric, as well as the physical/logical layer distinction, plays into subjective first person experience is here.Syntax and Semantics

In natural languages, it is understood that the syntax of a sentence is not sufficient to understand the semantics of the sentence. We can parse "'Twas brillig, and the slithy toves" but, without additional information, cannot discern its meaning. It is common to try to extend this principle to computation to assert that the syntax of computer languages cannot communicate meaning to a machine. But logic gates have syntax and behavior. The syntax of S7 can take many forms: S7, (NAND x y), NOT AND(a, b); even the diagram of S7, above. The behavior associated with the syntax is: $1 = $2 ? ~$1 : \. So the syntax of a computer language communicates behavior to the machine. As will be shown later, meaning is the behavior of association, this is that. So computer syntax communicates behavior, which can include meaning, to the machine.

More elaboration on how philosophy misunderstands computation is here.

Notes

An earlier derivation of this same result using the Lambda Calculus is here. But this demonstration, while inspired by the Lambda Calculus, doesn't require the heavier framework of the calculus.[1] An "AND" gate has the behavior of S8.

[2] There is a theory in philosophy known as "multiple realizibility" which argues that the ability to implement "mental properties" in multiple ways means that "mental properties" cannot be reduced to physical states. As this post demonstrates, this is clearly false.

[3] "A Survey of Automated Theorem Proving", pg. 27.

Dialog with an Atheist #3

I prefer the academic definition of atheist: Belief that there are no gods.

I do not identify as an atheist.

I am a militant agnostic. I don't know, and you don't either.

@theosib2 and I have had numerous discussions about computer science and religion. In particular, we have gone back and forth about how we can test for 3rd party consciousness. It is absolutely impossible to objectively determine if something is conscious. The Inner Mind shows the physical reason why this is true. However, @theosib2 maintains this is not the case, even though I have pressed him to state what a function with this behavior is doing:

ξξ→ζ ξζ→ξ ζξ→ξ ζζ→ξ

Objectively, it's a lookup table. Objectively, it has no meaning and no purpose. It's just a swirl of meaningless objects. Subjectively, it does have meaning and purpose, but we can't decide what it is. It could be a NAND gate or a NOR gate. Arrange these gates into a computer program and we might be able to determine what it is doing by its overall behavior. But we have to be able to map its behavior into behavior that we recognize within ourselves.

With this as background, I responded to @theosib2:

“I’m conscious!”

“I don’t think so. You’re not carbon based.”

“I passed your Turing test, multiple times, when you couldn’t asses my form. I’m conscious, dammit.”

“I don’t know that you are. And you don’t, either.”

@theosib2 responded identically to "Victorian dad":

If only we could have a rigorous definition of consciousness. Then your comparison might be valid.

The endgame then played out as before:

Are you conscious?

Sometimes I wonder (with link to They Might Be Giants "Am I Awake?")

But only sometimes, right?

The atheist either has a problem with either knowing things that are subjectively known, or admitting the validity of subjective knowledge. Because if subjective knowledge is validly known, then demands for objective evidence for God are groundless. Because we have objective knowledge that mind is only known subjectively.

Farpoint Engineering

- FASTPOINT-80 product sheet

- Microway advertisement (the Micro Channel boards under Math Coprocessors).

- From BYTE magazine, August, 1990.

- Fractal demo code (386 assembly)

I wrote a terminate-and-stay-resident program to install an "int 15h" interface between applications and the hardware, but that code is long lost.

Eric Schmidt

PERFCT (BASIC)

MLTPUN (FORTRAN)

SPAGE (FORTRAN)

[1] I really wish I still had FRESEQ, which I wrote in FORTRAN, that resequenced FORTRAN line and statement numbers like the built-in resequence command did for BASIC programs. Sometime around then I also wrote an INTEL 4004/4040/8008/8080 macro assembler in FORTRAN, but I don't have that, either.

The Inner Mind

[Similar introductory presentation here]

Philosophy of mind has the problem of "mental privacy" or "the problem of first-person perspective." It's a problem because philosophers can't account for how the first person view of consciousness might be possible. The answer has been known for the last 100 years or so and has a very simple physical explanation. We know how to build a system that has a private first person experience.

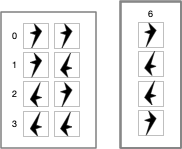

Let us use two distinguishable physical objects:

These shapes of these objects are arbitrary and were chosen so as not to represent something that might have meaning due to familiarity. While they have rotational symmetry, this is for convenience for something that is not related to the problem of mental privacy.

Next, let us build a physical device that takes two of these objects as input and selects one for output. Parenthesis will be used to group the inputs and an arrow will terminate with the selected output. The device will operate according to these four rules:

A network of these devices can be built such that the output of one device can be used as input to another device.

It is physically impossible to tell whether this device is acting as a NAND gate or as a NOR gate. We are taught that a NAND gate maps (T T)→F, (T F)→T, (F T)→T, and (F F)→T; (1 1)→0, (1 0)→1, (0 1)→1, (0 0)→0 and that a NOR gate maps (T T)→F, (T F)→F, (F T)→F, (F F)→T; (1 1)→0, (1 0)→0, (0 1)→0, (0 0)→1. But these traditional mappings obscure the fact that the symbols are arbitrary. The only thing that matters is the behavior of the symbols in a device and the network constructed from the devices. Because you cannot tell via external inspection what the device is doing you cannot tell via external inspection what the network of devices is doing. All an external observer sees is the passage of arbitrary objects through a network.1

To further complicate the situation, computation uses the symbol/value distinction: this symbol has that value. But "value" is just elements of the alphabet and so are identical to symbols when viewed externally. That means that to fully understand what a network is doing, you have to discern whether a symbol is being used as a symbol or as a value. But this requires inner knowledge of what the network is doing, which leads to infinite regress. You have to know what the network is doing on the inside to understand what the network is doing from the outside.

Or the network as to tell you what it's doing. NAND and NOR gates are universal logic gates which can be used to construct a "universal" computing device.2

So we can construct a device which can respond to the question "what logic gate was used in your construction?"

Depending on how it was constructed, its response might be:

- "I am made of NAND gates."

- "I am made of NOR gates."

- "I am made of NAND and NOR gates. My designer wasn't consistent throughout."

- "I don't know."

- "What's a logic gate?"

- "For the answer, deposit 500 bitcoin in my wallet."

Before the output device is connected to the system so that the answer can be communicated, there is no way for an external observer to know what the answer will be.

All an external observer has to go on is what the device communicates to that observer, whether by speech or some other behavior.3

And even when the output device is active and the observer receives the message, the observer cannot tell whether the answer given corresponds to how the system was actually constructed.

So extrospection cannot reveal what is happening "inside" the circuit. But note that introspection cannot reveal what is happening "outside" the circuit. The observer doesn't know what the circuit is doing; the subject doesn't know how it was built. It may think it does, but it has no way to tell.

[1] Technology has advanced to the point where brain signals can be interpreted by machine, e.g. here. But this is because these machines are trained by matching a person's speech to the corresponding brain signals. Without this training step, the device wouldn't work. Because our brains are similar, it's likely possible that a speaking person could train such a machine for a non-vocal person.

[2] I put universal in quotes because a universal Turing machine requires infinite memory and this would require an infinite number of gates. Our brains don't have an infinite number of neurons.

[3] See also Quss, Redux, since this has direct bearing on yet another problem that confounds philosophers, but not engineers.

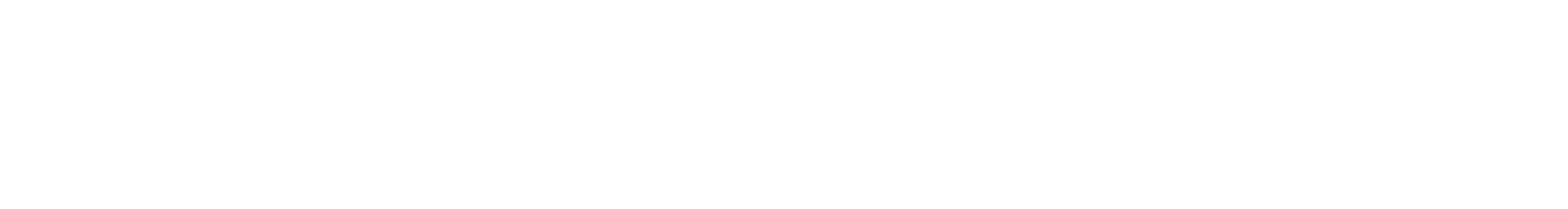

Venn Diagrams

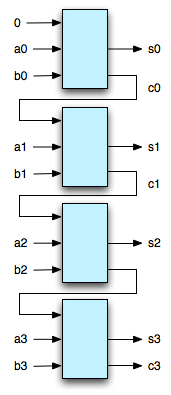

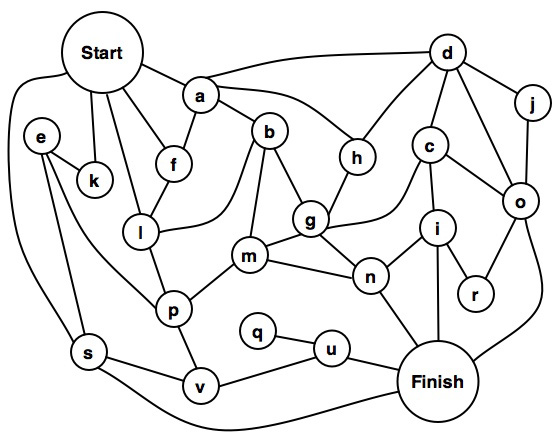

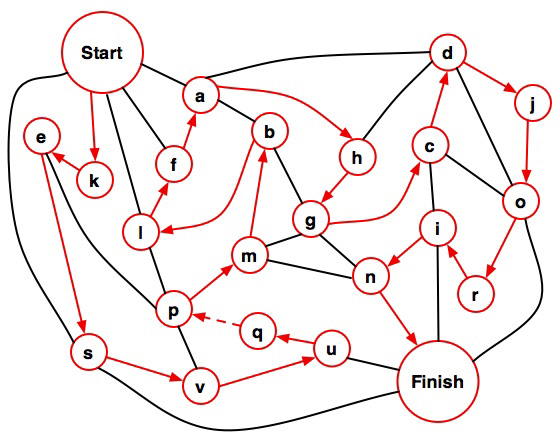

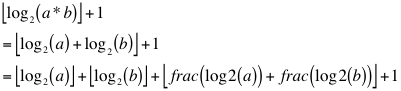

Twelve years ago I played around with designing digital circuits (1, 2, 3) and wrote some code to help in that endeavor. If you can evaluate logical expressions you ought to be able to visualize those expressions. So I extended this code to generate a Venn diagram of an expression. The sum and carry portions of an adder are striking. Click for larger images.

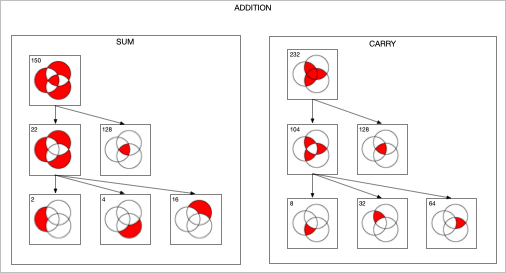

The Venn diagram for the subtraction and borrow equations of a subtraction circuit are similar:

I then produced an animated GIF of all of the 256 Venn diagrams for an equation of 3 variables. The right side is the complement of the equation on the left. 128 images were created, then I used Flying Meat's Retrobatch to resize each image, add the image number, and convert to .GIF format. Then I used the open source gifsicle to produce the final animated .GIF.

Solid State Jabberwocky

'Twas Burroughs, and the ILLIACS

Did JOSS and SYSGEN in the stack;

All ANSI were the acronyms,

and the Eckert-Mauchly ENIAC.

"Beware the deadly OS, son!

The Megabyte, the JCL!

Beware the Gigabit, and shun

The ponderous CODASYL!"

He took his KSR in hand:

Long time the Armonk foe he sought.

So rested he by the Syntax Tree

And APL'd in thought.

And as in on-line thought he stood,

the CODASYL of verbose fame,

Came parsing through the Chomsky wood,

And COBOL'ed as it came!

One, two! One, two! And through and through

The final pol at last drew NAK!

He left it dead, and with its head

He iterated back.

And hast thou downed old Ma Bell?

Come to my arms, my real-time boy!

Oh, Hollerith day! Array! Array!

He macroed in his joy.

'Twas Burroughs, and the ILLIACS

Did JOSS and SYSGEN in the stack;

All ANSI were the acronyms,

and the Eckert-Mauchly ENIAC.

-- William J. Wilson

The only reference to this on the web that I can find is at archive.org. This poem is 50 years old. I wonder who else, besides me, remembers it?

Quus, Redux

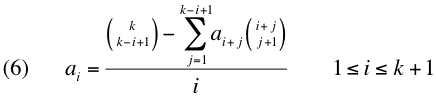

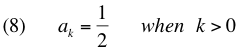

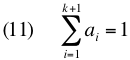

Philip Goff explores Kripke's quss function, defined as:

In English, if the two inputs are both less than 100, the inputs are added, otherwise the result is 5.

(defparameter N 100)

(defun quss (a b)

(if (and (< a N) (< b N))

(+ a b)

5))

Goff then claims:

Rather, it’s indeterminate whether it’s adding orquadding.

This statement rests on some unstated assumptions. The calculator is a finite state machine. For simplicity, suppose the calculator has 10 digits, a function key (labelled "?" for mystery function) and "=" for result. There is a three character screen, so that any three digit numbers can be "quadded". The calculator can then produce 1000x1000 different results. A larger finite state machine can query the calculator for all one million possible inputs then collect and analyze the results. Given the definition of quss, the analyzer can then feed all one million possible inputs to quss, and show that the output of quss matches the output of the calculator.

Goff then tries to extend this result by making N larger than what the calculator can "handle". But this attempt fails, because if the calculator cannot handle bigN, then the conditionals (< a bigN) and (< b bigN) cannot be expressed, so the calculator can't implement quss on bigN. Since the function cannot even be implemented with bigN, it's pointless to ask what it's doing. Questions can only be asked about what the actual implementation is doing; not what an imagined unimplementable implementation is doing.

Goff then tries to apply this to brains and this is where the sleight of hand occurs. The supposed dichotomy between brains and calculators is that brains can know they are adding or quadding with numbers that are too big for the brain to handle. Therefore, brains are not calculators.

The sleight of hand is that our brains can work with the descriptions of the behavior, while most calculators are built with only the behavior. With calculators, and much software, the descriptions are stripped away so that only the behavior remains. But there exists software that works with descriptions to generate behavior. This technique is known as "symbolic computation". Programs such as Maxima, Mathematica, and Maple can know that they are adding or quadding because they can work from the symbolic description of the behavior. Like humans, they deal with short descriptions of large things1. We can't write out all of the digits in the number 10^120. But because we can manipulate short descriptions of big things, we can answer what quss would do if bigN were 10^80. 10^80 is less than 10^120, so quss would return 5. Symbolic computation would give the same answer. But if we tried to do that with the actual numbers, we couldn't. When the thing described doesn't fit, it can't be worked on. Or, if the attempt is made, the old programming adage, Garbage In - Garbage Out, applies to humans and machines alike.

[1] We deal with infinity via short descriptions, e.g. "10 goto 10". We don't actually try to evaluate this, because we know we would get stuck if we did. We tag it "don't evaluate". If we actually need a result with these kinds of objects, we get rid of the infinity by various finite techniques.

[2] This post title refers to a prior brief mention of quss here. In that post, it suggested looking at the wiring of a device to determine what it does. In this post, we look at the behavior of the device across all of its inputs to determine what it does. But we only do that because we don't embed a rich description of each behavior in most devices. If we did, we could simply ask the device what it is doing. Then, just as with people, we'd have to correlate their behavior with their description of their behavior to see if they are acting as advertised.

Truth, Redux

Logic is mechanical operations on distinct objects. At it simplest, logic is the selection of one object from a set of two (see "The road to logic", or "Boolean Logic"). Consider the logic operation "equivalence". If the two input objects are the same, the output is the first symbol in the 0th row ("lefty"). If the two input objects are different, the output is the first symbol in the 3rd row ("righty").

If this were a class in logic, the meaningless symbols "lefty" and "righty" would be replaced by "true" and "false".

But we can't do this. Yet. We have to show how to go from the meaningless symbols "lefty" and "righty" to the meaningful symbols "T" and "F". The lambda calculus shows us how. The lambda calculus describes a universal computing device using an alphabet of meaningless symbols and a set of symbols that describe behaviors. And this is just what we need, because we live in a universe where atoms do things. "T" and "F" need to be symbols that stand for behaviors.

We look at these symbols, we recognize that they are distinct, and we see how to combine them in ways that make sense to our intuitions. But we don't know we do it. And that's because we're "outside" these systems of symbols looking in.

Put yourself inside the system and ask, "what behaviors are needed to produce these results?" For this particular logic operation, the behavior is "if the other symbol is me, output T, otherwise output F". So you need a behavior where a symbol can positively recognize itself and negatively recognize the other symbol. Note that the behavior of T is symmetric with F. "T positively recognizes T and negatively recognizes F. F positively recognizes F and negatively recognizes T." You could swap T and F in the output column if desired. But once this arbitrary choice is made, it fixes the behavior of the other 15 logic combinations.

In addition, the lambda calculus defines true and false as behaviors.1 It just does it at a higher level of abstraction which obscures the lower level.

In any case, nature gives us this behavior of recognition with electric charge. And with this ability to distinguish between two distinct things, we can construct systems that can reason.

[1] Electric Charge, Truth, and Self-Awareness. This was an earlier attempt to say what this post says. YMMV.

The Universe Inside

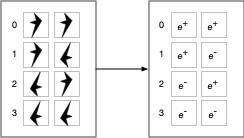

Let us replace distinguishable objects with objects that distinguish themselves:

Then the physical operation that determines if two elements are equal is:

As shown in the previous post, e+ (the positron and its behavior) and e- (the electron and its behavior) are the behaviors assigned to the labels "true" and "false". One could swap e+ and e-. The physical system would still exhibit consistent logical behavior. In any case, this physical operation answers the question "are we the same?", "Is this me?", because these fundamental particles are self-identifying.

From this we see that logical behavior - the selection of one item from a set of two - is fully determined behavior.

In contrast to logic, nature features fully undetermined behavior where a selection is made from a group at random. The double-slit experiment shows the random behavior of light as it travels through a barrier with two slits and lands somewhere on a detector.

In between these two options, there is partially determined, or goal directed behavior where selections are made from a set of choices that lead to a desired state. It is a mixture of determined and undetermined behavior. This is where the problem of teleology comes in. To us, moving toward a goal state indicates purpose. But what if the goal is chosen at random? Another complication is that, while random events are unpredictable, sequences of random events have predictable behavior. Over time, a sequence of random events with tend to its expected value. We are faced with having to decide if randomness indicates no purpose or hidden purpose, agency or no agency.

In this post, from 2012, I made a claim about a relationship between software and hardware. In the post, "On the Undecidability of Materialism vs. Idealism", I presented an argument using the Lambda Calculus to show how software and hardware are so entwined that you can't physically take them apart. This low-level view of nature reinforces these ideas. All logical operations are physical (all software is hardware). Not all physical operations are logical (not all hardware is software). Computing is behavior and the behavior of the elementary particles cannot be separated from the particles themselves. If we're going to choose between idealism and physicalism, it must be based on a coin flip2.

If computers are built of logic "circuits" then computer behavior ought to be fully determined. But when we add peripherals to the system and inject random behavior (either in the program itself, or from external sources), we get non-logical behavior in addition to logic. If a computer is a microcosm of the brain, the brain is a microcosm of the universe.

[1] Quarks have fractional charge, but quarks aren't found outside the atomic nucleus. The strong nuclear force keeps them together. Electrons and positrons are elementary particles.

[2] Dualism might be the only remaining choice, but I think that dualism can't be right. That's a post for another day.

Searle's Chinese Room Argument

[Work in progress... revisions to come]

Searle's "Minds, Brains, and Programs" is an attempt to show that computers cannot think the way humans do and so any effort to create human-level artificial intelligence is doomed to failure. Searle's argument is clearly wrong. What I found most interesting in reading his paper is that Searle's understanding of computers and programs can be shown to be wrong without considering the main part of the argument at all! Of course, it's possible that his main argument is right but that his subsequent commentary is in error but the main mistakes in both will be demonstrated. Then several basic, but dangerous, ideas in his paper will be exposed.

On page 11, Searle presents a dialog where he answers the following five questions (numbers added for convenience for later reference):

1) "Could a machine think?"

The answer is, obviously, yes. We are precisely such machines.

2) "Yes, but could an artifact, a man-made machine think?"

Assuming it is possible to produce artificially a machine with a nervous system, neurons with axons and dendrites, and all the rest of it, sufficiently like ours, again the answer to the question seems to be obviously, yes. If you can exactly duplicate the causes, you could duplicate the effects. And indeed it might be possible to produce consciousness, intentionality, and all the rest of it using some other sorts of chemical principles than those that human beings use. It is, as I said, an empirical question.

3) "OK, but could a digital computer think?"

If by "digital computer" we mean anything at all that has a level of description where it can correctly be described as the instantiation of a computer program, then again the answer is, of course, yes, since we are the instantiations of any number of computer programs, and we can think.

4) "But could something think, understand, and so on solely in virtue of being a computer with the right sort of program? Could instantiating a program, the right program of course, by itself be a sufficient condition of understanding?"

This I think is the right question to ask, though it is usually confused with one or more of the earlier questions, and the answer to it is no.

5) "Why not?"

Because the formal symbol manipulations by themselves don't have any intentionality; they are quite meaningless; they aren't even symbol manipulations, since the symbols don't symbolize anything. In the linguistic jargon, they have only a syntax but no semantics. Such intentionality as computers appear to have is solely in the minds of those who program them and those who use them, those who send in the input and those who interpret the output.

With 1), by saying that we are thinking machines, we introduce the "Church-Turing thesis" which states that if a calculation can be done using pencil and paper by a human, that it can also be done by a Turing machine. If Searle concludes that humans can do something that computers cannot, in theory, do, then he will have deny the C-T thesis and show that what brains do is fundamentally different from what machines do. That brains are different in kind and not in degree.

With 2), Searle runs afoul of computability theory, as brilliantly expressed by Fenynman (Simulating Physics with Computers):

Computer theory has been developed to a point where it realizes that it doesn't make any difference; when you get to a universal computer, it doesn't matter how it's manufactured, how it's actually made.

So 2) doesn't help his argument and, by extension, nor does 3). It doesn't matter if the computer is digital or not (but, see here).

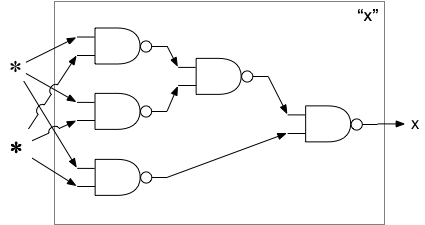

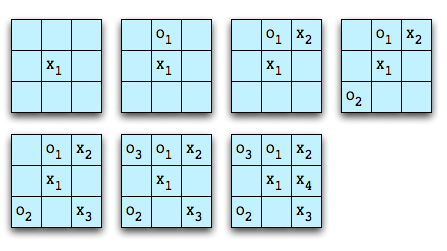

4) is based on a misunderstanding between "computer" and "program". We are so used to creating and running different programs on one piece of hardware that we think that there is some kind of difference between the two. But it's all hardware. Instead of the link to an article I wrote, let me demonstrate this. One of the fundamental aspects of computing is combination and selection. Binary logic is the mechanical selection of one object from two inputs. Consider this way, out of sixteen possible ways, to select one item. Note that it doesn't matter what the objects being selected are. Here we use "x" and "o", but the objects could be bears and bumblebees, or high and low pressure streams of electrons or water.

Table 1 object 1 object 2 result x x o x o x o x x o o x

Clearly, you can't get something from nothing, so the two cases where an object is given as a result when it isn't part of the input requires a bit of engineering. Nevertheless, these devices can be built.

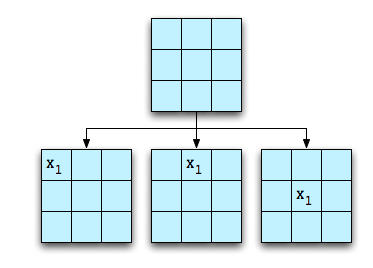

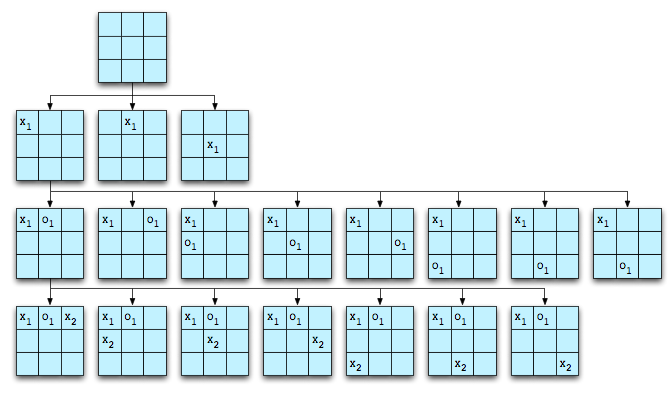

Along with combination and selection another aspect of computation is composition. Let's convert the above table to a device that implements the selection given two inputs in a form that can be composed again and again.

We can arrange these devices so that, regardless of which of the two inputs are given (represented by "✽"), the output is always "x":

We can also make an arrangement so that the output is always "o", regardless of which inputs are used:

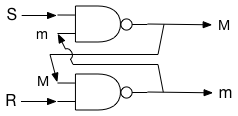

We now have all of the "important"1 aspects of a computer. If we replace "x" with 1 and "o" with 0 then we have all of the computational aspects of a binary computer. Every program is an arrangement of combination and selection operations. If it were economically feasible, we could build a unique network for every program. But the complexity would be overwhelming. One complicating factor is that the above does not support writeable memory. To go from a 0 to a 1 requires another node in the network, plus all of the machine states that can get to that node. For the fun of it, we show how the same logic device that combines two objects according to Table 1 can be wired together to provide memory:

By design, S and R are initially "x", which means M and m are undefined. A continuous stream of "x" is fed into S and R.

Suppose S is set to "o" before resuming the stream of "x". Then:

S = o, M = x, R = x, m = o, S = x, M = x. This shows that when S is set to "o", M becomes "x" and stays "x".

Suppose R is set to "o" before resuming the stream of "x". Then:

R = o, m = x, S = x, M = o, R = x, m = x. This shows that when R is set to "x", M becomes "o" and stays "o", until S is reset to "o".

This shows that every program is a specification of the composition of combination and selection elements. The difference between a "computer" and a "program" is that programs are static forms of the computer: the arrangement of the elements are specified, but the symbols aren't flowing through the network.2 This means that if 1) and 2) are true, then the wiring of the brain is a program, just like each program is a logic network.

Finally, we deal with objection 5). Searle is wrong that there is no "intentionality" in the system. The "intentionality" is the way the symbols flow through the network and how they eventually interact with the external environment. Searle is right that the symbols are meaningless. So how do we get meaning from meaningless? For this, we turn to the Lambda Calculus (also here, and here: part 1, part 2). Meaning is simply a "this is that" relation. The Lambda Calculus shows how data can be associated with symbols. Our brains take in data from our eyes and associate it with a symbol. Our brains take in data from our ears and associate it with another symbol. If what we see is coincident with what we hear, we can add a further association between the "sight" symbol and the "hearing" symbol.3

The problem, then, is not with meaning, but with the ability to distinguish between symbols. The Lambda Calculus assumes this ability. We get meaning out of meaningless symbols because we can distinguish between symbols. Being able to distinguish between things is built into Nature: positive and negative charge is one example.

So Searle's dialog fails, unless you deny the first point and hold that we are not thinking machines. That is, we are something else that thinks. But this denies what we know about brain physiology.

But suppose that Searle disavows his dialog and asks that his thesis be judged solely on his main argument.

[1] This is not meant to make light of all of the remaining hard parts of building a computer. But those details are to support the process of computation: combination, selection, and composition.

[2] One could argue that the steps for evaluating Lambda expressions (substitution, alpha reduction, beta reduction) are the "computer" and that the expressions to be evaluated are the "program". But the steps for evaluating Lambda expressions can be expressed as Lambda expressions. John McCarthy essentially did this with the development of the LISP programming language (here particularly section 4, also here). Given the equivalence of the Lambda Calculus, Turing machines, and implementations of Turing machines using binary logic, the main point stands.

[3] This is why there is no problem with "qualia".

Goto considered harmful

(defun read-input (&optional (result nil))

(let ((value (read)))

(if (equalp value 99999)

(reverse result)

(read-input (cons value result)))))

(defun average (numbers)

(let ((n (length numbers)))

(if (zerop n)

nil

(/ (reduce #'+ numbers) n))))

(defun main ()

(let ((avg (average (read-input))))

(if avg

(format t "~f~%" (float avg))

(format t "no numbers input. average undefined.~%"))))

Plato, Church, and Turing

The lambda calculus is the "platonic form" of a Turing machine

— wrf3

I wrote this in a comment over at Edward Feser's blog in a comment to Gödel and the mechanization of thought. Google doesn't find it anywhere in this exact form, but this post appears to express the same idea. The components of this idea can be found elsewhere, but it isn't stated as directly as here.

Ancient Publication

The text of the article and the source code is below the fold. I wish I had my original work to show how it was edited for publication.

Read More...

Dijkstra et. al. on software development

He said many more good things in that paper.

To the economic question "Why is software so expensive?" the equally economic answer

could be "Because it is tried with cheap labour." Why is it tried that way? Because its

intrinsic difficulties are widely and grossly underestimated.

A similar comment was made on SlashDot yesterday:

110010001000"

The truth is that no one really knows how to do [software development] right.

That is why the methodologies switch around every few years. — "

The Halting Problem and Human Behavior

It is obvious that the function

(defun P1 ()will return the string "Hello, World!" and halt.

"Hello, World!")

It is also obvious that the function

(defun P2 ()never halts. Or is it obvious? Depending on how the program is translated, it might eventually run out of stack space and crash. But, for ease of exposition, we'll ignore this kind of detail, and put P2 in the "won't halt" column.

(P2))

What about this function?

(defun P3 (n)Whether or not, for all N greater than 1, this sequence converges to 1 is an unsolved problem in mathematics (see The Collatz Conjecture). It's trivial to test the sequence for many values of N. We know that it converges to 1 for N up to 1,000,000,000 (actually, higher, but one billion is a nice number). So part of the test for whether or not P3 halts might be:

(when (> n 1)

(if (evenp n)

(P3 (/ n 2))

(P3 (+ (* 3 n) 1)))))

(defun halt? (Pn Pin)But what about values greater than one billion? We can't run a test case because it might not stop and so halt? would never return.

…

(if (and (is-program Pn collatz-function) (<= 1 Pin 1000000000))

t

…)

We can show that a general algorithm to determine whether or not any arbitrary function halts does not exist using an easy proof.

Suppose that halt? exists. Now create this function:

(defun snafu ()If halt? says that snafu halts, then snafu will loop forever. If halt? says that snafu will loop, snafu will halt. This shows that the function halt? can't exist when knowledge is symmetrical.

(if (halt? snafu nil)

(snafu)))

As discussed here, Douglas Hofstadter, in Gödel, Escher, Bach, wrote:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns. (pg. 37)

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials. (pg. 478).

This behavior can be seen in looking at the halting problem. After all, one is tempted to say, "Wait a minute. What if I take the environment in which halt? is called into account? halt? could say, 'when I'm analyzing a program and I see it trying to use me to change the outcome of my prediction, I'll return that the program will halt, but when I'm running as a part of snafu, I'll return true. That way, when snafu is running, it will then halt and so the analysis will agree with the execution.' We have "jumped out of the system" and made use of information not available to snafu, and solved the problem.

Except that we haven't. The moment we formally extend the definition of halt? to include the environment, then snafu can make use of it to thwart halt?

(defun snafu-extended ()We can say that our brains view halt? and snafu as two systems that compete against each other: halt? to determine the behavior of snafu and snafu to thwart halt?. If halt? can gain information about snafu, that snafu does not know, then halt? can get the upper hand. But if snafu knows what halt? knows, snafu can get the upper hand. At what point do we say, "this is madness?" and attempt to cooperate with each other?

(if (halt? snafu-extended nil 'running)

(snafu-extended)))

I am reminded of the words of St. Paul:

Knowledge puffs up, but love builds up. — 1 Cor 8:1b

The "Problem" of Qualia

[updated 25 July 2022 to provide more detail on "Blind Mary"]

[updated 5 August 2022 to provide reference to Nobel Prize for research into touch and heat; note that movie Ex Machina puts the "Blind Mary" experiment to film]

[updated 6 January 2024 to say more about philosophical zombies]

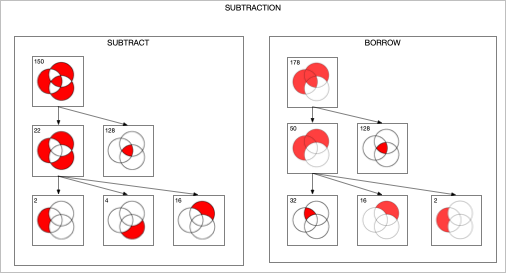

"Qualia" is the term given to our subjective sense impressions of the world. How I see the color green might not be how you see the color green, for example. From this follows flawed arguments which try to show how qualia are supposedly a problem for a "physicalist" explanation of the world.

The following diagram shows an idealized process by which different colors of light enter the eye and are converted into "qualia" -- the brain's internal representation of the information. Obviously the brain doesn't represent color as 10 bits of information. But the principle remains the same, even if the actual engineering is more complex.

Read More...

Modeling the Brain

The "autonomous" section is concerned with the functions of the brain that operate apart from conscious awareness or control. It will receive no more mention.

The "introspection" division monitors the goal processing portion. It is able to monitor and describe what the goal processing section is doing. The ability to introspect the goal processing unit is what gives us our "knowledge of good and evil." See What Really Happened In Eden, which builds on The Mechanism of Morality. I find it interesting that recent studies in neuroscience show:

But here is where things get interesting. The subjects were not necessarily consciously aware of their decision until they were about to move, but the cortex showing they were planning to move became activated a full 7 seconds prior to the movement. This supports prior research that suggests there is an unconscious phase of decision-making. In fact many decisions may be made subconsciously and then presented to the conscious bits of our brains. To us it seems as if we made the decision, but the decision was really made for us subconsciously.

The goal processing division is divided into two opposing parts. In order to survive, our biology demands that we reproduce, and reproduction requires food and shelter, among other things. We generally make choices that allow our biology to function. So part of our brain is concerned with selecting and achieving goals. But the other part of our brain is based on McCarthy's insight that one of the requirements for a human-level artificial intelligence is that "All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable." I suspect McCarthy was thinking along the lines of Getting Computers to Learn. However, I think it goes far beyond this and explains much about the human mind. In particular, introspection of the drive to improve leads to the idea that nothing is what it ought to be and gives rise to the is-ought problem. If one part of the goal processing unit is focused on achieving goals, this part is concerned with focused on creating new goals. As the arrows in the diagram show, if one unit focuses toward specific goals, the other unit focuses away from specific goals. Note that no two brains are the same -- individuals have different wiring. In some, the goal fixation process will be stronger; in other, the goal creation process will be stronger. One leads to the dreamer, the other leads to the drudge. Our minds are in dynamic tension.

Note that the goal formation portion of our brains, the unit that wants to "jump outside the system" is a necessary component of our intelligence.

On The Difference Between Hardware and Software

Five Seconds of Fame

McCarthy, Hofstadter, Hume, AI, Zen, Christianity

For example, in Gödel, Escher, Bach, Hofstadter writes:

It is an inherent property of intelligence that it can jump out of the task which it is performing, and survey what it is done; it is always looking for, and often finding, patterns. (pg. 37)

Over 400 pages later, he repeats this idea:

This drive to jump out of the system is a pervasive one, and lies behind all progress and art, music, and other human endeavors. It also lies behind such trivial undertakings as the making of radio and television commercials. (pg. 478).

It seems to me that McCarthy's third requirement is behind this drive to "jump out" of the system. If a system is to be improved, it must be analyzed and compared with other systems, and this requires looking at a system from the outside.

Hofstadter then ties this in with Zen:

In Zen, too, we can see this preoccupation with the concept of transcending the system. For instance, the kōan in which Tōzan tells his monks that "the higher Buddhism is not Buddha". Perhaps, self transcendence is even the central theme of Zen. A Zen person is always trying to understand more deeply what he is, by stepping more and more out of what he sees himself to be, by breaking every rule and convention which he perceives himself to be chained by – needless to say, including those of Zen itself. Somewhere along this elusive path may come enlightenment. In any case (as I see it), the hope is that by gradually deepening one's self-awareness, by gradually widening the scope of "the system", one will in the end come to a feeling of being at one with the entire universe. (pg. 479)

Note the parallels to, and differences with, Christianity. Jesus said to Nicodemus, "You must be born again." (John 3:3) The Greek includes the idea of being born "from above" and "from above" is how the NRSV translates it, even though Nicodemus responds as if he heard "again". In either case, you must transcend the system. The Zen practice of "breaking every rule and convention" is no different from St. Paul's charge that we are all lawbreakers (Rom 3:9-10,23). The reason we are lawbreakers is because the law is not what it ought to be. And it is not what it ought to be because of our inherent knowledge of good and evil which, if McCarthy is right, is how our brains are wired. Where Zen and Christianity disagree is that Zen holds that man can transcend the system by his own effort while Christianity says that man's effort is futile: God must affect that change. In Zen, you can break outside the system; in Christianity, you must be lifted out.

Note, too, that both have the same end goal, where finally man is at "rest". The desire to "step out" of the system, to continue to "improve", is finally at an end. The "is-ought" gap is forever closed. The Zen master is "at one with the entire universe" while for the Christian, the New Jerusalem has descended to Earth, the "sea of glass" that separates heaven and earth is no more (Rev 4:6, 21:1) so that "God may be all in all." (1 Cor 15:28). Our restless goal-seeking brain is finally at rest; the search is over.

All of this as a consequence of one simple design requirement: that everything must be improvable.

The Is-Ought Problem Considered As A Question Of Artificial Intelligence

In every system of morality, which I have hitherto met with, I have always remarked, that the author proceeds for some time in the ordinary way of reasoning, and establishes the being of a God, or makes observations concerning human affairs; when of a sudden I am surprized to find, that instead of the usual copulations of propositions, is, and is not, I meet with no proposition that is not connected with an ought, or an ought not. This change is imperceptible; but is, however, of the last consequence. For as this ought, or ought not, expresses some new relation or affirmation, it is necessary that it should be observed and explained; and at the same time that a reason should be given, for what seems altogether inconceivable, how this new relation can be a deduction from others, which are entirely different from it.

This is the "is-ought" problem: in the area of morality, how to derive what ought to be from what is. Note that it is the domain of morality that seems to be the cause of the problem; after all, we derive ought from is in other domains without difficulty. Artificial intelligence research can show why the problem exists in one field but not others.

The is-ought problem is related to goal attainment. We return to the game of Tic-Tac-Toe as used in the post The Mechanism of Morality. It is a simple game, with a well-defined initial state and a small enough state space that the game can be fully analyzed. Suppose we wish to program a computer to play this game. There are several possible goal states:

- The computer will always try to win.

- The computer will always try to lose.

- The computer will play randomly.

- The computer will choose between winning or losing based upon the strength of the opponent. The more games the opponent has won, the more the computer plays to win.

As another example, suppose we wish to drive from point A to point B. The final goal is well established but there are likely many different paths between A and B. Additional considerations, such as shortest driving time, the most scenic route, the location of a favorite restaurant for lunch, and so on influence which of the several paths is chosen.

Therefore, we can characterize the is-ought problem as a beginning state B, an end state E, a set P of paths from B to E, and a set of conditions C. Then "ought" is the path in P that satisfies the constraints in C. Therefore, the is-ought problem is a search problem.

The game of Tic-Tac-Toe is simple enough that the game can be fully analyzed - the state space is small enough that an exhaustive search can be made of all possible moves. Games such as Chess and Go are so complex that they haven't been fully analyzed so we have to make educated guesses about the set of paths to the end game. The fancy name for these guesses is "heuristics" and one aspect of the field of artificial intelligence is discovering which guesses work well for various problems. The sheer size of the state space contributes to the difficulty of establishing common paths. Assume three chess programs, White1, White2, and Black. White1 plays Black, and White2 plays Black. Because of different heuristics, White1 and White2 would agree on everything except perhaps the next move that ought to be made. If White1 and White2 achieve the same won/loss record against Black; the only way to know which game had the better heuristic would be to play White1 against White2. Yet even if a clear winner was established, there would still be the possibility of an even better player waiting to be discovered. The sheer size of the game space precludes determining "ought" with any certainty.

The metaphor of life as a game (in the sense of achieving goals) is apt here and morality is the set of heuristics we use to navigate the state space. The state space for life is much larger than the state space for chess; unless there is a common set of heuristics for living, it is clearly unlikely that humans will choose the same paths toward a goal. Yet the size of the state space isn't the only contributing factor to the problem establishing oughts with respect to morality. A chess program has a single goal - to play chess according to some set of conditions. Humans, however, are not fixed-goal agents. The basis for this is based on John McCarthy's five design requirements for human level artificial intelligence as detailed here and here. In brief, McCarthy's third requirement was "All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable." What this means for a self-aware agent is that nothing is what it ought to be. The details of how this works out in our brains is unclear; but part of our wetware is not satisfied with the status quo. There is an algorithmic "pressure" to modify goals. This means that the gap between is and ought is an integral part of our being which is compounded by the size of the state space. Not only is there the inability to fully determine the paths to an end state, there is also the impulse to change the end states and the conditions for choosing among candidate paths.

What also isn't clear is the relationship between reason and this sense of "wrongness." Personal experience is sufficient to establish that there are times we know what the right thing to do is, yet we do not do it. That is, reason isn't always sufficient to stop our brain's search algorithm. Since Hume mentioned God, it is instructive to ask the question, "why is God morally right?" Here, "God" represents both the ultimate goal and the set of heuristics for obtaining that goal. This means that, by definition, God is morally right. Yet the "problem" of theodicy shows that in spite of reason, there is no universally agreed upon answer to this question. The mechanism that drives goal creation is opposed to fixed goals, of which "God" is the ultimate expression.

In conclusion, the "is-ought" gap is algorithmic in nature. It exists partly because of the inability to fully search the state space of life and partly because of the way our brains are wired for goal creation and goal attainment.

Cybertheology

I use the “cyber” prefix because of its relation to computer science. Theology and computer science are related because both deal, in part, with intelligence. Christianity asserts that, whatever else God is, God is intelligence/λογος. The study of artificial intelligence is concerned with detecting and duplicating intelligence. Evidence for God would then deal with evidence for intelligence in nature. I don’t believe it is a coincidence that Jesus said, “My sheep hear my voice” [John 10:27] and the Turing test is the primary test for human level AI.

Beyond this, the Turing test seems to say that the representation of intelligence is itself intelligent. This may have implications with the Christian doctrine of the Trinity, which holds that “what God says” is, in some manner, “what God is.”

I also think that science can inform morality. At a minimum, as I’ve tried to show here, morality can be explained as goal-seeking behavior, which is also a familiar topic in artificial intelligence. Furthermore, using this notion of morality as being goal seeking behavior, combined with John McCarthy’s five design requirements for a human level AI, explains the Genesis account of the Fall in Eden. This also gives clues to the Christian doctrine of “original sin,” a post I hope to write one of these days.

If morality is goal-seeking behavior, then the behavior prescribed by God would be consistent with any goal, or goals, that can be found in nature. Biology tells us that the goal of life is to survive and reproduce. God said “be fruitful and multiply.” [Gen 1:22, 28; 8:17, 9:1...] This is a point of intersection that I think will provide surprising results, especially if Axelrod’s “Evolution of Cooperation” turns out like I think it will.

I also think that game theory can be used to analyze Christianity. Game theory is based on analyzing how selfish entities can maximize their own payoffs when interacting with other selfish agents. I think that Christianity tells us to act selflessly -- that we are to maximize the payoffs of those we interact with. This should be an interesting area to explore. One topic will be bridging the gap between the selfish agents of game theory to the selfless agents of Christianity. I believe that this, too, can be solved.

This may be wishful thinking on the part of a lunatic (or maybe I’m just a simpleton), but I also think that we can go from what we see in nature to the doctrine of justification by faith.

Finally, we look to nature to incorporate its designs into our own technology. If a scientific case can be made for the truth of Christianity, especially as an evolutionary survival strategy, what implications ought that have on public policy?

Ancient Victories

I found this dollar bill attesting to bets won from the hardware group. I hope the Feds don’t get too upset that I scanned this.

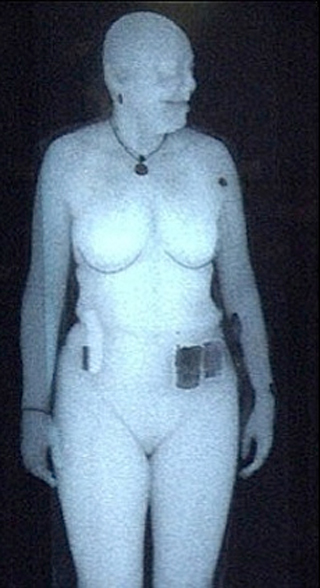

TSA Images

- The TSA says that the scanners cannot save the images yet Gizmodo shows 100 images from 35,000 illegally saved images from a courthouse in Orlando, Florida.

- Scientists are speaking out about the supposed safety of the devices, for example, this letter from four faculty members of the University of California at San Francisco.

- The threat of a $10,000 civil suit against a man who refused scanning then told a TSA agent that he would file a sexual assault case were the agent to touch his “junk," was told to leave the airport by TSA agents and then, after receiving a ticket refund from American Airlines, was threatened with a lawsuit by another TSA agent.

- One Florida airport is opting out of using the TSA to screen passengers.

What I did was, I created a grayscale image of it in GIMP. I applied selective gaussian blur (I don't remember what exact values.) I removed the necklace, the watch and the objects at the belt by using clone brush tool. Then I used this tutorial to apply it as a height map to a grid with resolution 400*400, and scaled the height and the depth dimensions to what looked correct.

Looking at the model from the side, I selected the lowest vertices with rectangle select and deleted them. Then came the artistic part (in addition to the removal of the objects, but if the person has none, this is not a problem), which is hard but not impossible to do as an algorithm: I smoothed the roughness in sculpt mode, until I was satisfied with the model. I placed a blueish hemisphere light over the model, and a white, omnidirectional light in the direction where the sun would be. I colored the material to skin color and lowered specularity to almost none.

Had I used more time, I could have made the skin look much more realistic. This was just a proof of concept. And when you have made a realistic skin texture once, you could apply it to any model without extra effort.

He then added:

Still far from photorealism, but won't leave many secrets about your body unrevealed, either. Plus, increase radiation resolution, and you could almost certainly reconstruct even the face.

Note: I modified the source image in Photoshop by cropping and resizing to better match Markku’s result.

The Mechanism of Morality

Suppose we want to teach a computer to play the game of Tic-Tac-Toe. Tic-Tac-Toe is a game between two players that takes place on a 3x3 grid. Each player has a marker, typically X and O, and the object is for a player to get three markers in a row: horizontally, vertically, or diagonally.

One possible game might go like this:

Player X wins on the fourth move. Player O lost the game on the first move since every subsequent move was an attempt to block a winning play by X. X set an inescapable trap on the third move by creating two simultaneous winning positions.

In general, game play starts with an initial state, moves through intermediate states, and ends at a goal state. For a computer to play a game, it has to be able to represent the game states and determine which of those states advance it toward a goal state that results in a win for the machine.

Tic-Tac-Toe has a game space that is easily analyzed by “brute force.” For example, beginning with an empty board, there are three moves of interest for the first player:

The other possible starting moves can be modeled by rotation of the board. The computer can then expand the game space by making all of the possible moves for player O. Only a portion of this will be shown:

The game space can be expanded until all goal states (X wins, O wins, or draw game) are reached. Including the initial empty board, there are 4,163 possible board configurations.

Assuming we want X to play a perfect game, we can “prune” the tree and remove those states that inevitably lead to a win by O. Then X can use the pruned game state and chose those moves that lead to the greatest probability of a win. Furthermore, if we assume that O, like X, plays a perfect game, we can prune the tree again and remove the states that inevitably lead to a win by X. When we do this, we find that Tic-Tac-Toe always results in a draw when played perfectly.

While a human could conceivably evaluate the entire game space of 4,163 boards, most don’t play this way. Instead, the human player develops a set of “heuristics” to try to determine how close a particular board is to a goal state. Such heuristics might include “if there is a row with two X’s and an empty square, place an X in the empty square for the win.” “If there is a row with two O’s and an empty square, place an X in the empty square for the block.” More skilled players will include, “If there are two intersecting rows where the square at the intersection is empty and there is one X in each row, place an X in the intersecting square to set up a forced win.” Similarly is the heuristic that would block a forced win by O. This is not a complete set of heuristics for Tic-Tac-Toe. For example, what should X’s opening move be?

Games like Chess, Checkers, and Go have much larger game spaces than Tic-Tac-Toe. So large, in fact, that it’s difficult, if not impossible, to generate the entire game tree. Just as the human needs heuristics for evaluating board positions to play Tic-Tac-Toe, the computer requires heuristics for Chess, Checkers, and Go. Humans expand a great deal of effort developing board evaluation strategies for these games in order to teach the computer how to play well.

In any case, game play of this type is the same for all of these games. The player, whether human or computer, starts with an initial state, generates intermediate states according to the rules of the game, evaluates those states, and selects those that lead to a predetermined goal.

What does this have to do with morality? Simply this. If the computer were self aware and was able to describe what it was doing, it might say, “I’m here, I ought to be there, here are the possible paths I could take, and these paths are better (or worse) than those paths.” But “better” is simply English shorthand for “more good” and “worse” is “less good.” For a computer, “good” and “evil” are expressions of the value of states in goal-directed searches.

I contend that it is no different for humans. “Good” and “evil” are the words we use to describe the relationship of things to “oughts,” where “oughts” are goals in the “game” of life. Just as the computer creates possible board configurations in its memory in order to advance toward a goal, the human creates “life states” in its imagination.

If the human and the computer have the same “moral mechanism” -- searches through a state space toward a goal -- then why aren’t computers as smart as we are? Part of the reason is because computers have fixed goals. While the algorithm for playing Tic-Tac-Toe is exactly the same for playing Chess, the heuristics are different and so game playing programs are specialized. We have not yet learned how to create universal game-playing software. As Philip Jackson wrote in “Introduction to Artificial Intelligence”:However, an important point should be noted: All these skillful programs are highly specific to their particular problems. At the moment, there are no general problem solvers, general game players, etc., which can solve really difficult problems ... or play really difficult games ... with a skill approaching human intelligence.

In Programs with Common Sense, John McCarthy gave five requirements for a system capable of exhibiting human order intelligence:

- All behaviors must be representable in the system. Therefore, the system should either be able to construct arbitrary automata or to program in some general-purpose programming language.

- Interesting changes in behavior must be expressible in a simple way.

- All aspects of behavior except the most routine should be improvable. In particular, the improving mechanism should be improvable.

- The machine must have or evolve concepts of partial success because on difficult problems decisive successes or failures come too infrequently.

- The system must be able to create subroutines which can be included in procedures in units...

That this seems to be a correct description of our mental machinery will be explored in future posts by showing how this models how we actually behave. As a teaser, this explains why the search for a universal morality will fail. No matter what set of “oughts” (goal states) are presented to us, our mental machinery automatically tries to improve it. But for something to be improvable, we have to deem it as being “not good,” i.e. away from a “better” goal state.

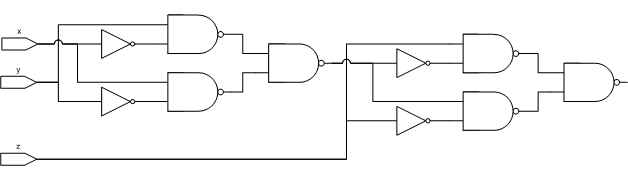

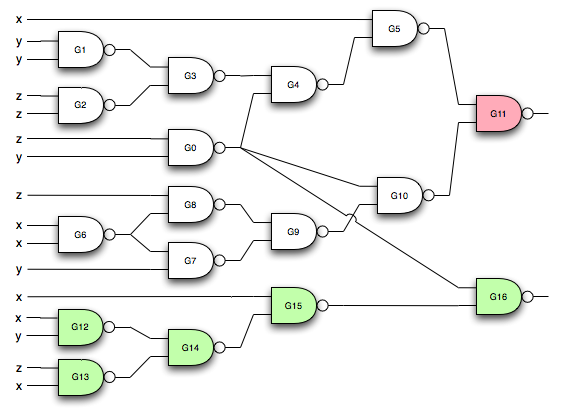

Boolean Expressions and Digital Circuits

Lee is a friend and co-worker who “used to design some pretty hairy discreet logic circuits back in the day.” He presented a circuit that used a mere 10 gates for the addition. Our circuits to compute the carry were identical.

The equation for the addition portion of his adder is:

(NAND (NAND (NAND (NAND (NAND (NAND X X) Y) (NAND (NAND Y Y) X))

(NAND (NAND (NAND X X) Y) (NAND (NAND Y Y) X))) Z)

(NAND (NAND Z Z) (NAND (NAND (NAND X X) Y) (NAND (NAND Y Y) X))))His equation has 20 operators where mine had 14:(NAND (NAND (NAND (NAND Z Y) (NAND (NAND Y Y) (NAND Z Z))) X)

(NAND (NAND (NAND (NAND X X) Y) (NAND (NAND X X) Z)) (NAND Z Y))) Lee noted that his equation had a common term that is distributed across the function:*common-term* = (NAND (NAND (NAND X X) Y) (NAND (NAND Y Y) X))

*adder* = (NAND (NAND (NAND *common-term* *common-term*) Z)

(NAND (NAND Z Z) *common-term*))My homegrown nand gate compiler reduces this to Lee’s diagram. Absent a smarter compiler, shorter expressions don’t necessarily result in fewer gates.

However, my code that constructs shortest expressions can easily use a different heuristic and find expressions that result in the fewest gates using my current nand gate compiler. Three different equations result in 8 gates. Feed the output of G0 and G4 into one more nand gate and you get the carry.

Is any more optimization possible? I’m having trouble working up enthusiasm for optimizing my nand gate compiler. However, sufficient incentive would be if Mr. hot-shot-digital-circuit-designer can one up me.

Simplifying Boolean Expressions

0 0 | 1

0 1 | 1 => (AND (NOT (AND X (NOT Y))) (NOT (AND X Y)))

1 0 | 0

1 1 | 0Via inspection, the simpler form is (NOT X).

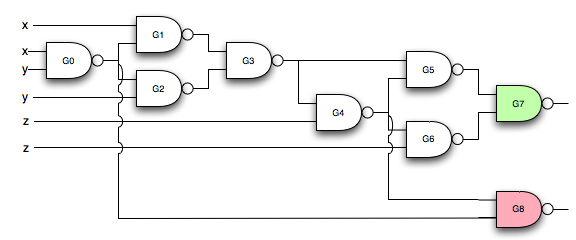

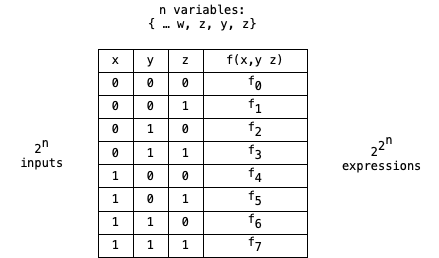

Consider the problem of simplifying Boolean expressions. A truth table with n variables results in 22n expressions as shown in the following figure.

Finding the simplest expression is conceptually simple. For expressions of three variables we can see that the expression that results in f7=f6=f5=f4=f3=f2=f1=f0 = 0 is the constant 0. The expression that results in f7..f0 = 1 is the constant 1. Then we progress to the functions of a single variable. f7..f0 = 10001000 is X. f7..f0 = 01110111 is (NOT X). f7..f0 = 11001100 is Y and 00110011 is (NOT Y). f7..f0 = 10101010 is Z and 01010101 is (NOT Z).

Create a table Exyz of 223 = 256 entries. Exyz(0) = “0”. Exyz(255) = “1”. Exyz(10001000 {i.e. 136}) = “X”. Exyz(01110111 {119}) = “(NOT X)”. Exyz(204) = “Y”, Exyz(51) = “(NOT Y)”, Exyz(170) = “Z” and Exyz(85) is “(NOT Z)”. Assume that this process can continue for the entire table. Then to simplify (or generate) an expression, evaluate f7..f0 and look up the corresponding formula in Exyz.

While conceptually easy, this is computationally hard. Expressions of 4 variables require an expression table with 216 entries and 5 variables requires 232 entries -- not something I’d want to try to compute on any single machine at my disposal. An expression with 8 variables is close to the number of particles in the universe, estimated to be 1087.

Still, simplifying expressions of up to 4 variables is useful and considering the general solution is an interesting mental exercise.

To determine the total number of equations for expressions with three variables, start by analyzing simpler cases.

With zero variables there are two unique expressions: “0” and “1”.

With one variable there are four expressions: “0”, “1”, “X”, and “(NOT X)”.